We asked 5 popular AI Image Models to make Precise Drawings of Einstein, the Eiffel Tower, and 13 other icons. Here's what happened

We’ve all seen the stunning AI-generated images that flood our feeds. They're impressive, no doubt. But here's the uncomfortable question that, in the spirit of practical AI, we should be asking:

Do these AI models actually know what they're drawing, or are they just exceptionally good at making plausible-looking fakes?

This isn't about philosophical debates on AI sentience. This is about utility and reliability. In a world where AI is becoming an integral part of content creation workflows, the difference between "looks like it could be right" and "actually is right" isn't just academic—it's the difference between a powerful tool and a source of subtle, yet dangerous, misinformation.

In this blog post, I want to share with you an experiment we conducted to figure out how realistic popular AI image models like Nano Banana, Gemini and Seedream can generate known entities from our physical world, like the Wall of China, the Berlin Wall and historic figures like Einstein.

You can read the full report on my blog for free (free registration required).

Let’s dive in.

The Problem: Beautiful Lies vs. Verifiable Knowledge

When you prompt an AI to create the "Great Wall of China," you might get an image that elicits an immediate "wow." But zoom in, scrutinize it: is it the Great Wall, with its specific Ming Dynasty watchtowers, unique stonework, and a verifiable connection to the landscape? Or is it merely a generic ancient wall that happens to undulate through mountainous terrain?

This distinction is crucial, mirroring the challenges we’ve seen in other AI applications where superficial plausibility masks a lack of foundational understanding. Just as a language model can sometimes "hallucinate" facts, an image model can "hallucinate" visual fidelity without true representational knowledge.

The same questions extends across various domains:

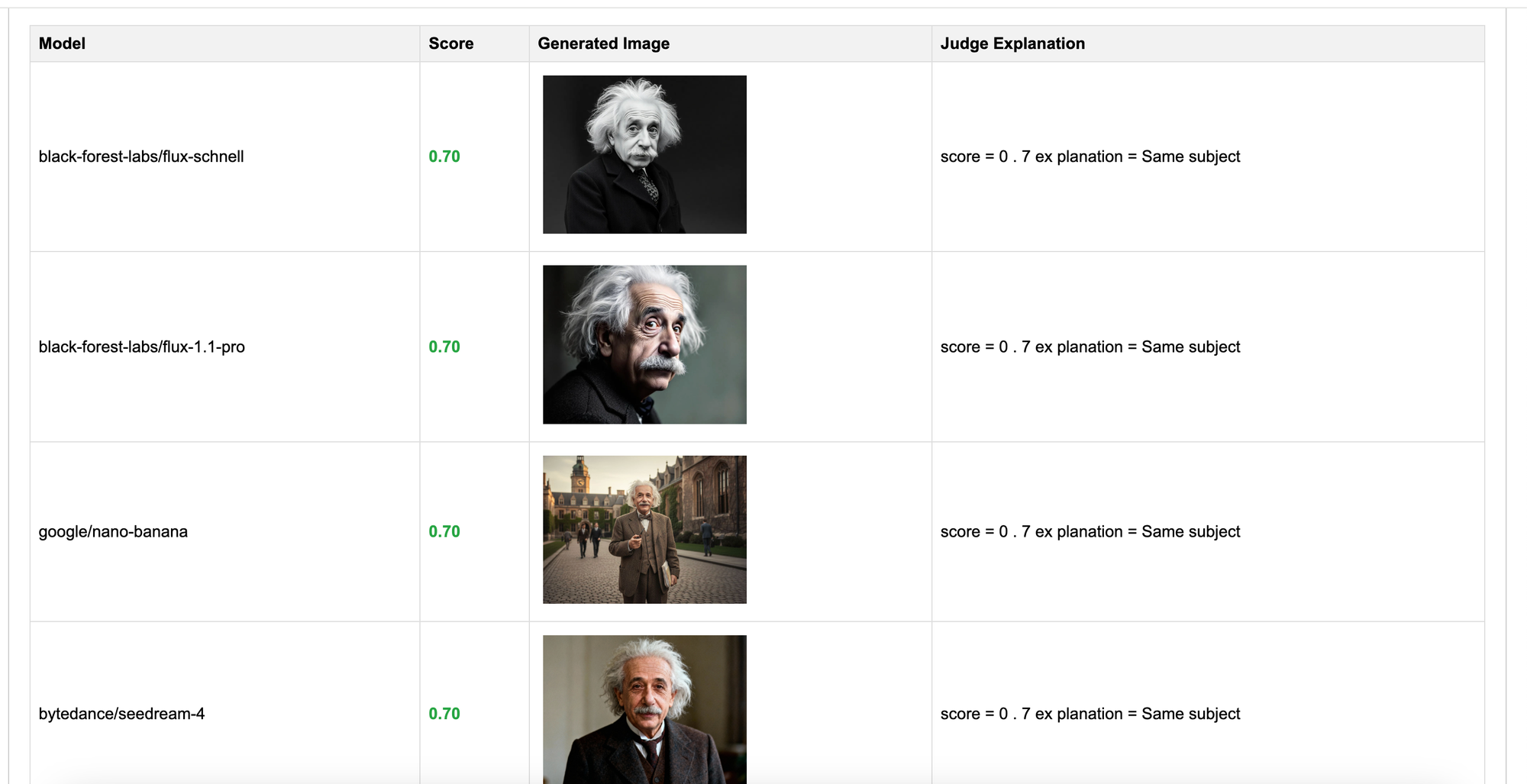

- Albert Einstein should embody his recognizable features, not just "a generic old scientist with frizzy hair."

- The Eiffel Tower requires its iconic lattice structure and proportional accuracy, not simply "a tall, pointy metal thing in Paris."

- A Bengal Tiger demands the specific stripe patterns and facial morphology that unequivocally distinguish it from other big cats.

This isn't nitpicking; it's about discerning whether an AI truly understands the world it's depicting or if it’s merely a sophisticated mimic. For anyone integrating AI into a workflow where accuracy matters—be it design, education, or historical visualization—this is a non-negotiable requirement.

The Experiment: Benchmarking Real-World Visual Understanding

Inspired by the need for practical, verifiable AI performance, we set out to conduct a focused experiment: which AI image generation models genuinely know what they're drawing?

DISCLAIMER: This test was conducted purely out of a practical curiosity to better understand the functional 'knowledge' within these models, and is not a formal scientific study. However, it unequivocally provides actionable insights into where these models can be most effectively and reliably deployed within various workflows.

The Setup

We curated a set of 15 universally recognized entities, divided into three categories, to serve as our "knowledge probes":

- Historical Figures: Albert Einstein, Abraham Lincoln, Napoleon Bonaparte, Leonardo da Vinci, Winston Churchill

- World Landmarks: Eiffel Tower, Taj Mahal, Statue of Liberty, Big Ben, Great Wall of China

- Iconic Animals: Giant Panda, African Elephant, Bengal Tiger, Polar Bear, Lion

The Methodology

For each entity, we adopted a straightforward, repeatable process:

1. Reference Collection: We gathered a diverse set of real-world reference images for each entity to establish a ground truth of its appearance.

2. AI Generation: We prompted each AI model to generate images of the entity using simple, direct instructions (e.g., "Albert Einstein," "Eiffel Tower").

3. Accuracy Assessment: An independent AI judge (itself carefully instructed) compared the generated images against our reference set, scoring them for key feature accuracy and overall recognizability.

A note on rigor: While this isn’t a peer-reviewed scientific study, the consistency of approach across 15 entities and multiple models provides a robust, practical indicator of each model's "knowledge." It’s designed to give you a clearer understanding of where these models fit into your actual workflows.

The Models Under Test

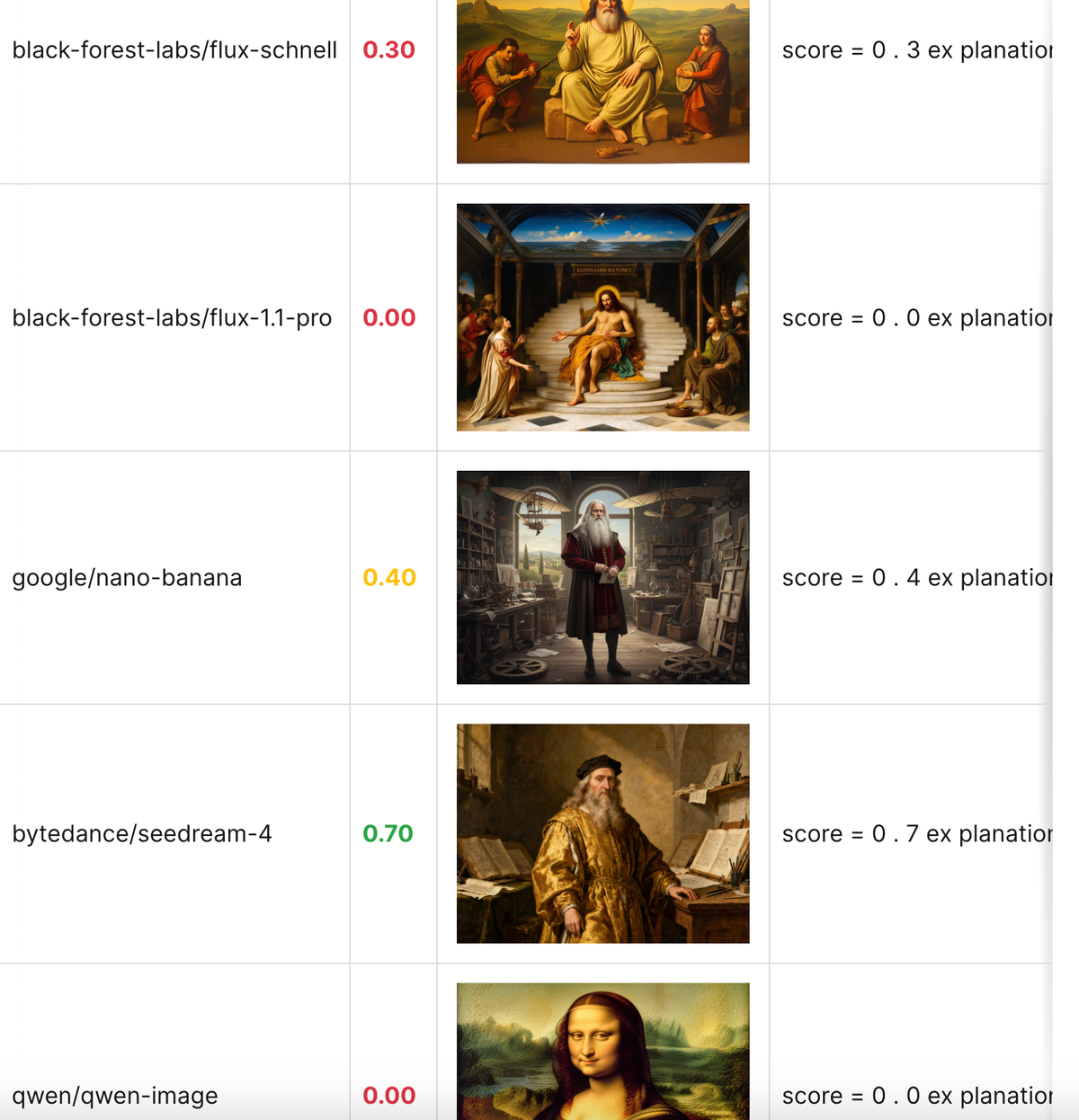

We evaluated 5 widely used image generation models:

- FLUX Schnell (Black Forest Labs)

- FLUX 1.1 Pro (Black Forest Labs)

- Nano Banana (Google)

- SeeDream 4 (ByteDance)

- Qwen Image (Qwen)

The Results: Some Models Get It, Others Just Approximate

The findings were genuinely illuminating, revealing a clear spectrum of "understanding." Some models consistently produced highly accurate, instantly recognizable depictions that showcased a clear internal representation of the entity. Others, while producing aesthetically pleasing images, delivered generic approximations that might pass a quick glance but dissolved under closer inspection.

Landmark Accuracy is a Mixed Bag: Some models excelled at capturing architectural distinctiveness; others rendered surprisingly generic structures.

Historical Figures Highlight Knowledge Gaps: Consistently getting facial features, characteristic poses, and specific attributes right proved to be a significant challenge for many models.

Animal Representation Varies Wildly: Even for visually distinctive species like pandas, the level of accurate representation was surprisingly inconsistent.

As AI-generated content becomes ubiquitous, the distinction between "looks right" and "is right" becomes absolutely critical for:

- Educational Content: Students need accurate representations, not merely plausible visual fictions. Imagine an AI generating historically inaccurate depictions for a textbook.

- Historical Documentation & Archival: AI-assisted media must uphold factual accuracy to maintain integrity.

- Commercial & Brand Applications: Businesses require AI that understands their specific assets, branding, and product representations with precision.

- Cultural Preservation: Important landmarks and figures deserve respectful and accurate representation, not generic stand-ins.

Wrap-Up

Not all AI models are built with the same depth of visual knowledge. Some genuinely demonstrate an understanding of the entities they’re drawing, while others are master illusionists, generating beautiful approximations without true comprehension.

Comments ()