The Art of Code generation with Cline (Claude + OpenAI)

Unlock the full potential of code assistants like Cline and Copilot with key best practices! This guide delves into common pitfalls—including quality risks and cost overruns—and offers actionable strategies like modular coding, thorough testing, and effective prompt-writing. Elevate your coding e...

Code assistants like Cline (Prev. Claude-Dev) and Copilot are invaluable tools that can save significant time. However, they come with various pitfalls that can waste resources and impact quality and costs. While I've discussed these issues in previous posts, this blog entry summarizes the most common challenges and offers best practices for even better results.

If you're not familiar with Claude-Dev (now Cline), it might be worth checking out. In short, it is an incredibly powerful open-source code assistant that works with any LLM capable of reasoning and code generation.

Outline

- Start with Unit Tests/Documentation/Architecture

- Build Features Incrementally

- Keep It Modular

- Commit Early, Commit Often

- Choose Your Model Wisely

- Watch Your Token Count

- Test Thoroughly

- Write Clear Prompts

- Think Security First

- Set Up Your Environment Properly

- Review, Understand, Then Implement

- Manage Multiple Projects Carefully

- Simplify Complex Tasks

- Stay Updated on Model Names and Capabilities

- Encourage Continuous Learning

- Debug Logging Helps

- Mock Up Design Changes Using Claude Artifacts

- Alternative Approaches When Stuck

1. Start with Unit Tests/Documentation/Architecture

Before diving into coding, use AI to generate architecture documentation and diagrams. This ensures a clear and scalable structure from the outset.

Example Prompt:

"Create a Mermaid sequence diagram for a user registration process in a web app."

Generated Diagram:

DatabaseServerWeb AppUserDatabaseServerWeb AppUserFill Registration FormSubmit Registration DataStore User DataConfirmationRegistration Successful Message

I discussed this approach in an earlier post, which you can read here:

Architecture-driven code generation with Claude --- a complete tutorial

Another technique is to include coding guidelines, best practices, and common issues in the project's documentation. Claude-Dev will usually consider them when generating code. This helps avoid common mistakes and messy code that Claude might produce if not specifically directed or corrected by a human.

2. Build Features Incrementally!

Add one feature at a time, updating your architecture diagrams before implementation. This prevents overwhelming the AI and ensures each feature is fully functional before moving on. Incremental development allows you to save intermediate working results, so if something goes wrong, you don't have to start over. It also leverages Claude-Dev's cache efficiently, saving on costs.

Example Workflow:

- Implement User Registration:

- Prompt Claude-Dev to create the user registration feature.

- Commit the changes to version control.

- Test thoroughly to ensure it's working.

- Add Email Verification:

- Update the architecture diagram to include email verification.

- Prompt Claude-Dev to implement the email verification feature.

- Commit and test again.

Example Prompt:

"Update the previous diagram and code to include email verification after user registration."

You can find more examples in an earlier post with a real-world example:

From Idea to Working App with Claude Dev and GPT-4 (Part II)

3. Keep It Modular!

Encourage the AI to generate modular code. Modular code is easier to maintain, debug, and update. Claude-Dev can sometimes create monolithic files; emphasizing modularity helps prevent this.

Example Prompt:

"Generate a modular folder structure for a React Todo app, separating components, services, and utilities."

Folder Structure:

/src

/components

TodoList.jsx

TodoItem.jsx

/services

api.js

/utilities

helpers.js

In real-world applications, you may have a wonderful structure on day one, but the code does not grow linearly across all files; some files may become monolithic and messy. If you notice this growth (e.g., a large number of LOC), you can simply ask Claude-Dev to refactor the code and split it into files.

✍️ If you work often with Claude-Dev, refactoring code into smaller chunks will save you a lot of money and time.

Example:

Here is an example where I simply ask Claude-Dev to split a monolithic ChatInterface class, and as you can see, it splits it into several files. When I need to modify something, it only reads and edits the relevant files, saving time and costs by avoiding unrelated code changes.

Another smart approach is to keep files that you frequently change both modular and small. This can save you a lot of time and money.

4. Commit Early, Commit Often!

Use version control carefully. Commit after every feature or significant change to ensure that you can easily roll back if necessary. This is crucial because Claude-Dev can sometimes generate code that introduces problems; frequent commits allow you to revert to a working state.

Example Commands:

git add .

git commit -m "Implement user registration feature"

git push origin main

If, between two commits, you have accepted changes that break the code, it can be difficult for Claude to know what he broke, especially if you have already progressed with the code generation. The best solution is to get the git diff, give it to Claude, and ask him to fix it, regardless of Claude's current context.

Sample Prompt:

"You screwed up the search feature between these two commits.

diff --git a/src/app/page.tsx b/src/app/page.tsx

index 92e740e..41357aa 100644

--- a/src/app/page.tsx

+++ b/src/app/page.tsx

@@ -4,7 +4,6 @@ import { useState, useEffect } from 'react';

import { useSession, signIn, signOut } from 'next-auth/react';

....

Another common problem is that when the conversation gets too long, Claude and other models tend to produce placeholders instead of the actual code. If you are not careful when producing code, you may end up losing functionality instead of adding or modifying existing functionality. In this case, I strongly recommend resetting the conversation and starting a new session; your chance to get complete code is probably higher.

Alternative Approach:

If the placeholders are just old, manually copy the code from the original file to the same location in the file being edited and save it. Claude-Dev will take your changes and, in this case, will not remove the original code and replace it with placeholders.

Recovery from Previous Mistakes:

If you encounter issues after a recent change, check out a previously running state from Git into a temporary directory (e.g., /tmp) and compare it with the current non-working state. For example:

git checkout – /path/to/project

Then, pass both the previous state and the current state to Cline and ask for assistance in identifying why the previous version was functioning correctly. This method can help pinpoint the changes that led to the issue.

5. Choose Your Model Wisely!

Pay attention to the AI model you're using. Different tasks may require different models for optimal performance. The default claude-sonnet 3.5 model is recommended for reasoning tasks, as it is the most suitable and reliable. Other models offered by Claude-Dev may not perform as well in complex reasoning scenarios due to their lack of planning capabilities compared to Sonnet.

Example Prompt:

"Using claude-sonnet 3.5, generate unit tests for the login function."

Notes:

- While Claude-Dev supports multiple models, experience shows that

claude-sonnet 3.5provides the best results for most coding tasks. - Be cautious, as Claude-Dev may sometimes replace models without explicit notification. Always verify that the code is using the intended model to avoid unintended costs or performance issues.

6. Watch Your Token Count!

Keep an eye on costs, especially when generating large amounts of code. Utilize Claude-Dev's token tracking and cost analysis features to stay within budget.

How to Monitor Tokens:

- In Claude-Dev: Token counts and costs can be viewed in the Claude summary at the top of the page.

- Cost Analysis Tools: Use the built-in tools within Claude-Dev for detailed cost analysis.

Important Notes:

- When using Claude-Dev through other deployments like AWS Bedrock, caching might not be available as of today. This could increase costs significantly since the cache reduces token usage by up to 90% in Claude-Dev.

- Only when using Claude-Dev directly through claude.ai do you have access to caching features.

- Avoid using cache-unfriendly prompts or initiating new tasks unnecessarily, as this can reset the cache and increase costs.

We discussed Claude caching in more detail in an earlier post:

Is Prompt Caching the New RAG?

7. Test Thoroughly!

Always test generated code. AI can make mistakes, and thorough testing ensures reliability. Claude-Dev may generate code that doesn't work as expected; catching problems early saves time.

Example Prompt:

"Generate unit tests for the user registration function using Jest."

8. Write Clear Prompts!

The clearer your prompt, the better the code you'll get. Avoid ambiguity to achieve accurate results. Claude-Dev sometimes tries to rationalize changes it thinks are safe to make; explicit instructions help prevent unintended changes.

Example:

- Ambiguous Prompt: "Create a login function."

- Clear Prompt: "Create a login function in Node.js that authenticates users using JWTs. Do not modify other existing functions."

Tips:

- Add "Don't change anything else" at the end of your prompts to ensure only the intended parts are modified.

- Be specific about the technologies, libraries, and frameworks you want to use.

When editing a user interface, it is important to be very precise about which elements you are referring to. For example, saying that the toolbar is misplaced may not lead to the desired result if there is another toolbar that Cline is trying to change instead. To avoid this confusion, refer to unique, concrete IDs or classes (e.g., SidePanel_form) of UI elements instead.

9. Think Security First!

Always review generated code for security implications. Ensure that no sensitive data is exposed and that best security practices are followed. Claude-Dev might sometimes generate client-side code that should be server-side for security reasons.

If you don't explicitly tell the LLM to follow best practices, it may make changes that are quick and dirty, resulting in messy code and vulnerabilities.

Example Prompt:

"Verify that API keys are not exposed on the client side and are securely stored on the server."

Common Security Mistake:

- Exposing API Keys: Including API keys in client-side code or in public repositories.

Solution:

- Use environment variables stored in a

.envfile that is not checked into version control. - Ensure API calls that require sensitive information are made from the server side, not the client side.

10. Set Up Your Environment Properly!

Ensure that all necessary libraries and environment variables are set up correctly to avoid runtime errors and streamline development. Claude-Dev may not automatically set up the environment and install the required libraries.

11. Review, Understand, Then Implement!

Always review and understand the generated code before implementing it. This ensures that you can spot potential problems and maintain control over your codebase. Claude-Dev may sometimes make changes that don't match your intentions.

Example Prompt:

"Explain how the authentication middleware works and identify any potential issues."

Understanding the Code:

- Review each function and its purpose.

- Check for security vulnerabilities or inefficient logic.

- Ensure that the code aligns with your project's architecture and standards.

12. Manage Multiple Projects Carefully

Avoid having more than one project open in the same workspace. This can confuse Claude-Dev when you ask for changes, as it may apply them to the wrong project.

Tips:

- Use separate workspaces or IDE windows (e.g., separate VSCode windows) for different projects.

- Close unrelated projects before starting a new task with Claude-Dev.

Note:

- Keeping only one project in your Claude-Dev workspace helps the AI focus on the correct codebase.

13. Simplify Complex Tasks

If Claude-Dev struggles with a complex task in a large project, try simplifying the problem or isolating it in a smaller, simpler context.

Example:

- If a feature isn't working in your main project, create a simple HTML page or a minimal project to test the feature independently.

Benefit:

- Helps identify whether the issue is with your code or with Claude-Dev's understanding.

14. Stay Updated on Model Names and Capabilities

Ensure that the generated code uses the correct models (e.g., GPT-4, GPT-3.5-turbo) to avoid unintended costs or performance issues.

Tips:

- Claude-Dev may not have the latest model names or API changes.

- Verify that the code generated uses the intended models.

- Be cautious, as Claude-Dev might sometimes replace models without explicit notification.

Example Prompt:

Ensure the code uses 'gpt-3.5-turbo' model for OpenAI API requests, not 'gpt-4'."

15. Encourage Continuous Learning

Help Claude-Dev learn from its mistakes and improve over time by instructing it to create "Lessons Learned" documents when problems occur.

Example Prompt:

"Whenever there are more than 2 errors in a task you are working on and it finally works, automatically save the result to a file named 'lessons_learned.md' without me explicitly asking for it."

Benefit:

- Claude-Dev can refer to this document in future tasks to avoid repeating the same mistakes.

- Improves efficiency and code quality over time.

I discussed this approach in an earlier post, which you can read here:

Iterative Refinement: How ChatGPT Enhances Its Own Content Through Self-Review

16. Debug Logging Helps

You will often encounter code that does not work as expected without causing errors. This can be tedious to explain if you need Claude to help fix the code. The code might not work as expected. If you need Claude to fix it, let it generate traces and logs in the area you need help with, run the application with logs, and then pass the logs to Claude. This way, you can see how the application behaves from a data flow standpoint.

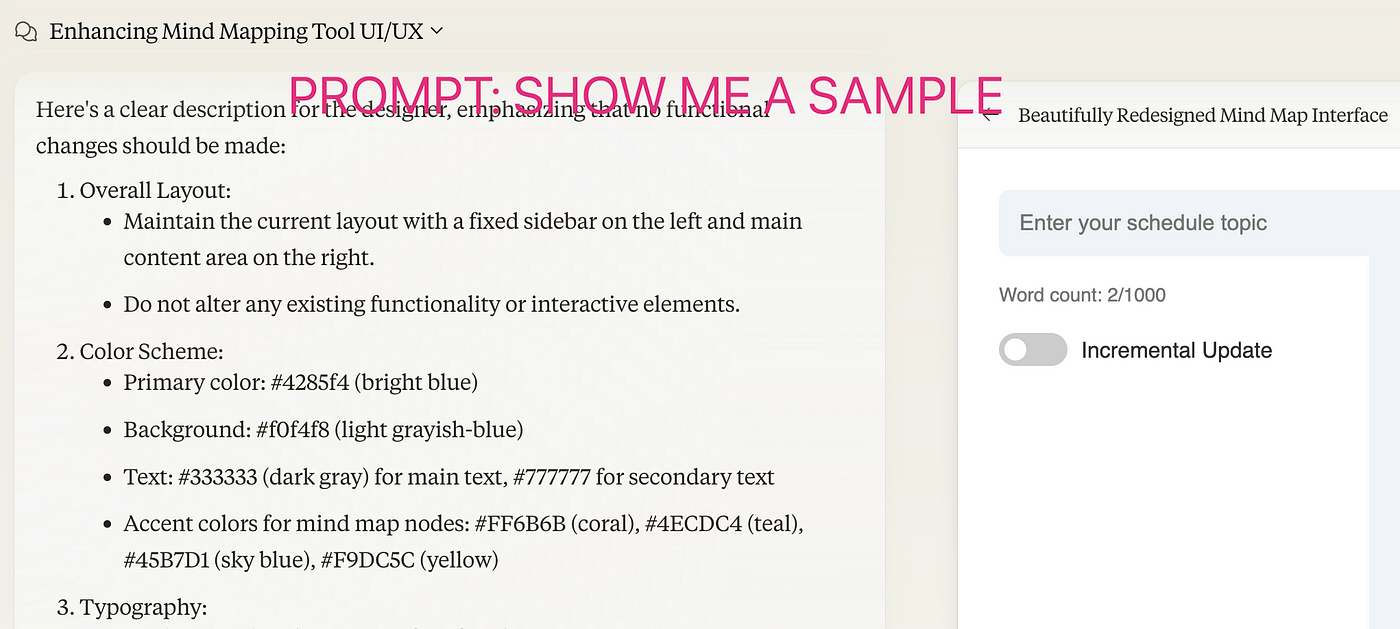

17. Mock Up Design Changes Using Claude Artifacts

Sometimes you may need to redesign a page, and if your code is already bulky, each change may take longer, leading to frustration if the result is not as expected.

To avoid this:

- Create a mockup of the redesign and iterate quickly in the prompt, checking the result in the Artifact.

- Example Prompt: "Make a simple HTML mockup/sample of << DESCRIBE WHAT YOU WANT TO REDESIGN >>."

When it matches your expectations, ask Claude to create a prompt for Claude-Dev to update those changes. Describe exactly what needs to be done.

Alternative Approach:

- Copy the HTML code in the Artifact and ask Claude-Dev to create a similar design.

18. Alternative Approaches When Stuck

Sometimes Claude is unable to handle a particular problem properly. This can occur with complex problems or even simple ones where it goes in circles. Here's what you can do:

Use a Smarter Model:

- Use a model like OpenAI's

o1-mini, provide necessary information (code snippets), describe the problem, and ask for advice. - Then, copy the result to Claude-Dev and let it fix the issue using the given advice. This works well when Claude gets stuck.

Analyze First:

- Ask Cline to analyze the problem first and explicitly instruct it not to code, only to analyze. This allows deeper reflection and understanding before coding.

- You may notice it begins to read more files to get a full picture of the problem's cause.

Write Test Cases:

- Let Claude write a test case with a clearly specified scenario where it does not work.

- Ask it to fix the code so that this case works. This helps avoid multiple iterations of non-working code.

Manual Fix:

- Sometimes, solving the problem yourself is faster. For example, a small bug like a parameter change can be quickly fixed manually.

Example:

I gave a bug to OpenAI's o1-mini and Claude to fix. The response from o1-mini was surprisingly long and might not have solved the small problem effectively. Solving it manually was much faster.

19. Conclusion

Putting it all together:

By following these best practices, you'll be well-equipped to harness the full potential of Cline. These strategies enhance efficiency, code quality, and ensure cost-effective and scalable project development.

Happy coding!