The Economy of AI Multi-Agent Systems and how to Save Money on Token Usage

Wondering how to manage costs while using multiple AI agents? This blog explores critical strategies for tracking token consumption in AutoGen interactions. From understanding token accumulation in two-agent and group chats to implementing monitoring middleware, learn key practices for optimizing...

Have you ever wondered how multiple AI agents can interact seamlessly while keeping costs under control? When working with multiple AI agents in AutoGen, understanding and managing token consumption is critical to both cost optimisation and system performance. Let's look at how tokens are consumed in different conversation patterns and explore some strategies for efficient token usage.

Token flow in different conversation patterns

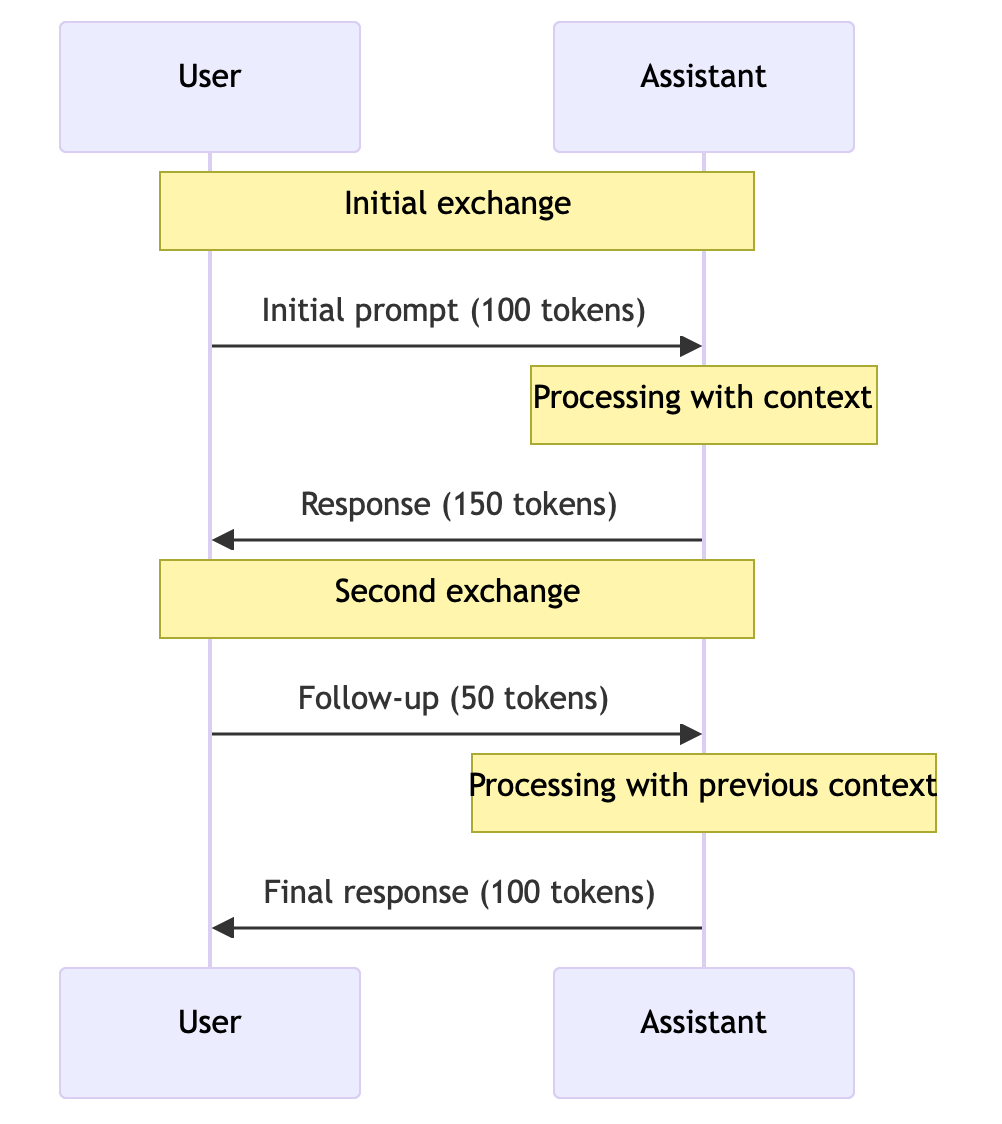

Two-Agent Conversation Pattern

How do tokens add up in a simple interaction between you and an assistant?

Token accumulation:

- First exchange: 250 tokens (100 input + 150 output)

- Second exchange: 200 tokens (50 input + 100 output)

- Total: 450 tokens

Isn't it fascinating how each interaction builds on the previous one?

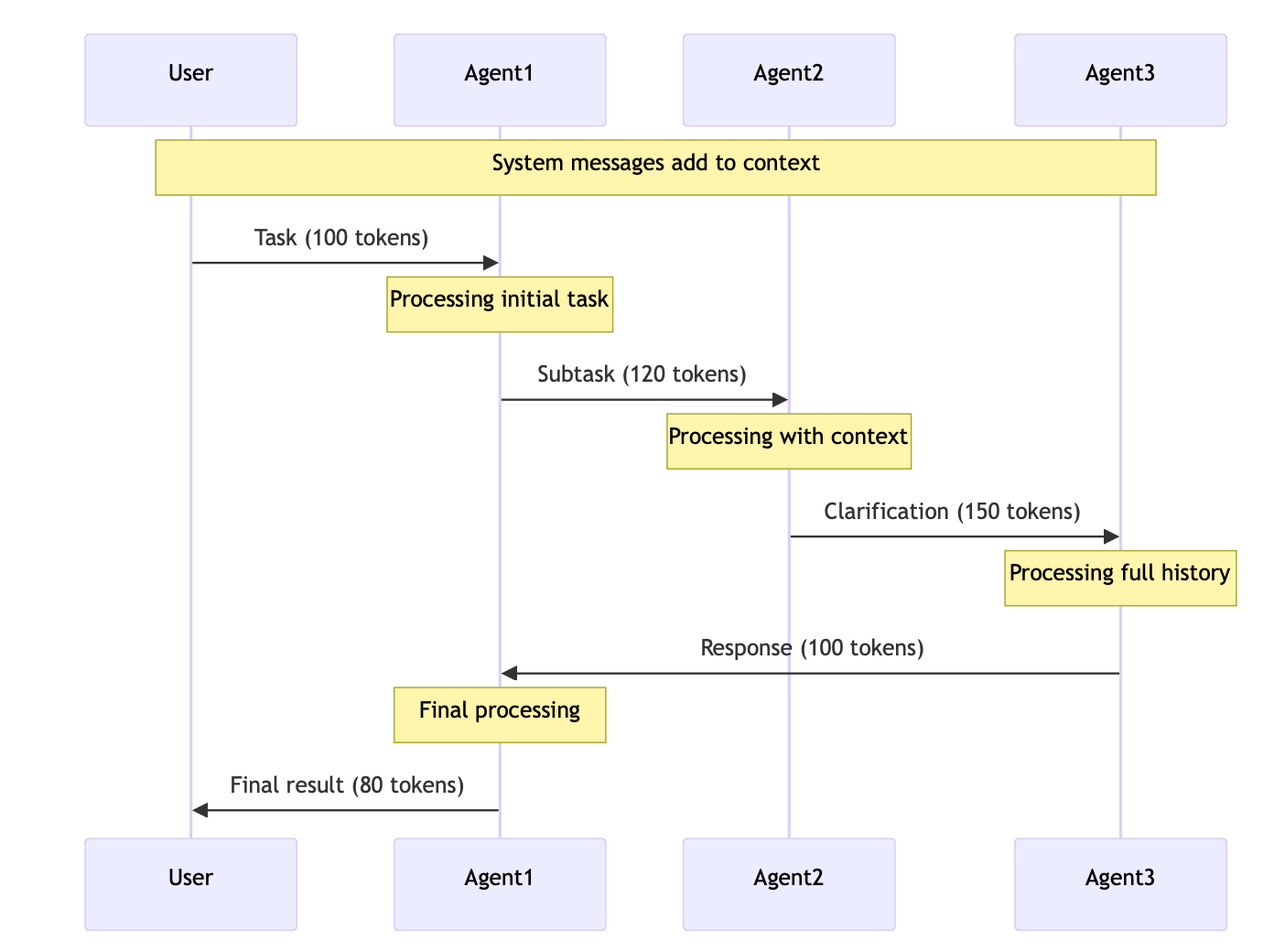

Group chat pattern

What happens when multiple agents join the conversation? Let's have a look:

Token accumulation:

- Initial task: 100 tokens

- Agent1 processing: 220 tokens (100 input + 120 output)

- Agent2 processing: 370 tokens (220 input + 150 output)

- Agent3 processing: 470 tokens (370 input + 100 output)

- Final result: 550 tokens (470 input + 80 output)

Can you see how quickly tokens can accumulate in a group chat scenario?

Token tracking implementation

But How do you keep track of all these tokens?

AutoGen provides a middleware-based approach to tracking token usage. Let's explore a token counter middleware implementation:

public class TokenCounterMiddleware : IMiddleware

{

private read-only List<ChatCompletionResponse> responses = new List<ChatCompletionResponse>()

public async Task<IMessage> InvokeAsync(MiddlewareContext context, IAgent agent,

cancellationToken = default)

{

var reply = await agent.GenerateReplyAsync(context.Messages, context,

cancellation_token);

if (reply is IMessage<ChatCompletionResponse> message)

{

Responses.Add(message.Content)

}

Return the reply;

}

public int GetCompletionTokenCount() => responses.Sum(r => r.Usage.CompletionTokens);

public int GetPromptTokenCount() => responses.Sum(r => r.Usage.PromptTokens);

public int GetTotalTokenCount() => responses.Sum(r => r.Usage.TotalTokens);

}

Token usage example

Let's see how this works in a real multi-agent scenario:

// Create agents with token tracking

var assistant = new AssistantAgent("assistant", modelClient)

var tokenCounter = new TokenCounterMiddleware();

assistant.RegisterMiddleware(tokenCounter)

// Track token usage throughout the conversation

await Assistant.SendAsync("Explain quantum computing")

Console.WriteLine($"Total tokens used: {tokenCounter.GetTotalTokenCount()}");

Console.WriteLine($"Estimated cost: ${tokenCounter.GetTotalTokenCount() * 0.002 / 1000:F4}");

Token usage patterns

Two-Agent Conversations

How are tokens counted in a two-agent conversation?

- Enter tokens:

- Assistant system message

- User message

- Previous conversation history

- Output tokens:

- Assistant's reply

- Accumulation:

- Each subsequent message includes all previous messages in the prompt tokens.

- Tokens usage grows linearly with conversation length

Group chat scenarios

Group chats introduce more complexity. How do tokens accumulate?

- System context:

- Every agent has its own system message

- Agent descriptions are included in the context

- Message Routing:

- Messages between agents count as input and output tokens

- Each agent sees full conversation history

- Token multiplication:

- Total tokens grow faster with multiple agents

- Each agent's response adds to everyone's context

Can you see why managing tokens in group chats requires a more strategic approach?

Best practices for token management

Wondering how to optimise your token usage? Here are some best practices:

- Use middleware for monitoring

- Add token counting middleware to each agent

- Track both inbound and outbound tokens

- Monitor costs in real time

- Implement conversation history management

- Delete conversation history when appropriate

- Use summarisation for long conversations

- Implement message pruning when token count exceeds thresholds

- Optimise Agent System Messages

- Keep system prompts concise

- Remove unnecessary context

- Use role-specific instructions only when necessary

- Monitor and log token usage

- Track token usage per agent

- Monitor call costs

- Set up alerts for high token usage

Conclusion

Understanding and managing token usage is essential when working with multiple agents. Token counting patterns vary significantly between two-agent conversations and group chats, each with its own considerations for optimisation. By implementing proper monitoring through middleware and following best practices, you can optimise costs and maintain efficient conversations between agents.

Remember that token usage has a direct impact on both performance and cost:

- Two agent conversations have linear token growth.

- Group chats have exponential token growth due to multiple participants

- System messages and conversation history contribute significantly to token usage

- Regular monitoring and management of token usage is critical for cost control.