ChatGPT / GPT-4 Fine-Tuning 101: A Beginner's Guide to Fine-Tuning

Unlock the full potential of OpenAI's text generation models through fine-tuning! This powerful technique enhances performance by delivering higher quality results, reducing costs with fewer tokens, and minimizing response times. Learn how to effectively prepare data, train models, and integrate ...

Fine-tuning is a powerful technique that allows you to optimize OpenAI's text generation models for specific tasks, providing:

- Higher Quality Results: Fine-tuning delivers better outcomes compared to standard prompting by allowing the model to learn from a larger dataset.

- Token Savings: With a fine-tuned model, you can reduce the length of prompts, leading to cost savings.

- Lower Latency: Fine-tuning results in faster response times, as shorter prompts require less processing.

Why Fine-Tune?

OpenAI’s models, pre-trained on a vast corpus of text, are versatile, but fine-tuning takes this versatility a step further by training the model on additional specific examples. This process, called "few-shot learning," typically involves including a few examples in a prompt to instruct the model on how to perform a task.

However, fine-tuning offers significant advantages over few-shot learning:

- More Examples: Fine-tuning allows you to train on more examples than can fit into a single prompt, resulting in better performance across a wide range of tasks.

- Efficiency: Once fine-tuned, a model requires fewer examples in the prompt, reducing token usage and improving request efficiency.

How Fine-Tuning Works

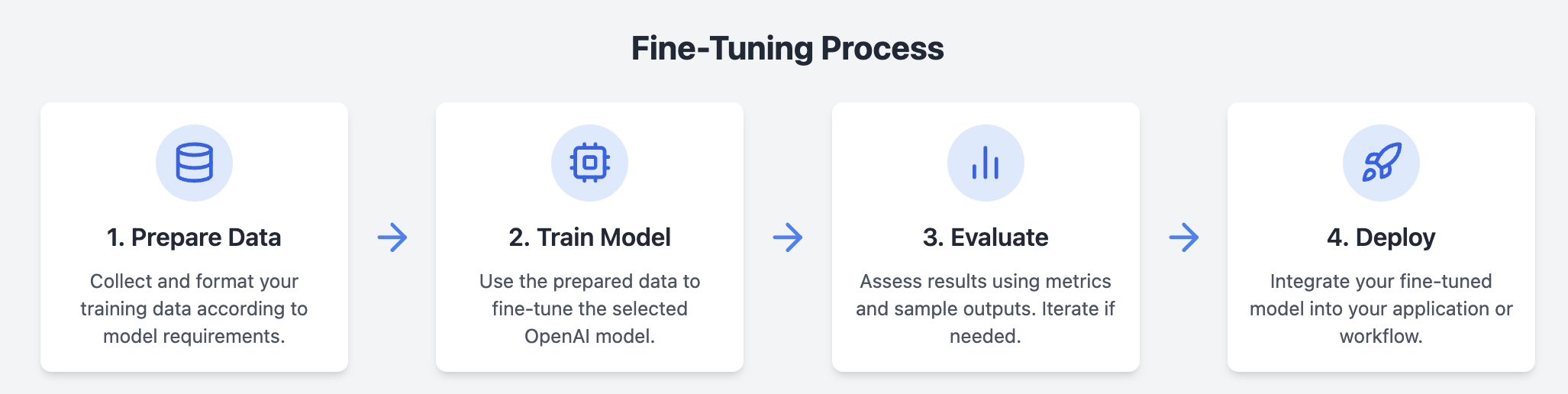

Fine-tuning involves several steps:

- Prepare and Upload Training Data: Collect and format your data for training.

- Train a New Fine-Tuned Model: Use the prepared data to fine-tune the model.

- Evaluate and Iterate: Assess the results and adjust the training data if needed.

Deploy Your Fine-Tuned Model: Use your customized model in production.

Key Considerations

☞ Choosing the Right Model

Fine-tuning is available for several models, including:

- gpt-4o-mini-2024-07-18 (Recommended)

- gpt-3.5-turbo variants

- babbage-002 and davinci-002

For most users, the gpt-4o-mini model strikes the right balance between performance, cost, and ease of use.

☞ When to Fine-Tune

Before opting for fine-tuning, it's advisable to:

- Experiment with Prompt Engineering: Many tasks can be solved with effective prompting.

- Consider Prompt Chaining: Break complex tasks into multiple prompts.

- Explore Function Calling: Use structured outputs to achieve your goals.

☞ Fine-tuning is ideal when:

- Styling and Formatting: You need consistent tone or format.

- Reliability: The model must follow complex prompts accurately.

- Edge Cases: Specific handling of rare scenarios is required.

- New Skills: You need the model to perform tasks that are difficult to describe in a prompt.

#1 - Preparing Your Dataset

Ensure your training data is diverse and mirrors the conversations or tasks you expect the model to handle. The data should follow the format required by the target model:

- Chat format for gpt-4o-mini and gpt-3.5-turbo.

- Prompt-completion pair for babbage-002 and davinci-002.

Example Data Format

For a chatbot that provides sarcastic responses, your data might look like this:

{"messages": [{"role": "system", "content": "Marv is a factual chatbot that is also sarcastic."}, {"role": "user", "content": "What's the capital of France?"}, {"role": "assistant", "content": "Paris, as if everyone doesn't know that already."}]}You can find out more about the format of the data here

#2 - Training and Testing

After collecting data, split it into training and testing portions. Use the training data to fine-tune the model and the test data to evaluate performance.

Token Limits

Each model has specific token limits for both inference and training contexts. For instance, gpt-4o-mini (as of Aug/2024) supports up to 128,000 tokens for inference.

Estimating Costs

Fine-tuning costs depend on the model and the amount of data. For example, fine-tuning with gpt-4o-mini costs about $0.90 USD (as of Aug/2024) per 100,000 tokens after the free period ends.

Uploading and Fine-Tuning

After preparing your dataset, upload it using the OpenAI Files API, then create a fine-tuning job through the OpenAI SDK.

from openai import OpenAI

client = OpenAI()

client.fine_tuning.jobs.create(

training_file="file-abc123",

model="gpt-4o-mini"

)#3 - Analyzing Results

Evaluate your fine-tuned model using training metrics like loss and accuracy. Additionally, generate samples from both the base and fine-tuned models to compare results.

Iteration and Improvement

If the model's performance isn’t satisfactory, consider revising your training data, adding more examples, or tweaking hyperparameters like the number of epochs.

#4 - Integrations

OpenAI allows integration with third-party tools like Weights and Biases (W&B) to track fine-tuning jobs and metrics.

curl -X POST \\

-H "Content-Type: application/json" \\

-H "Authorization: Bearer $OPENAI_API_KEY" \\

-d '{

"model": "gpt-4o-mini-2024-07-18",

"training_file": "file-ABC123",

"integrations": [

{

"type": "wandb",

"wandb": {

"project": "custom-wandb-project"

}

}

]

}' https://api.openai.com/v1/fine_tuning/jobsConclusion

Fine-tuning can significantly enhance the performance of OpenAI models for specific tasks, offering improvements in quality, efficiency, and cost. By following the outlined steps and best practices, you can create a model tailored to your unique requirements.