Chat with LogSeq and ChatGPT

Unlock the power of Logseq with AI-driven semantic search in this tutorial! Learn how to integrate an LLM chat app that allows intuitive queries of your notes using natural language. Forget the exact keywords? No problem! Effortlessly retrieve valuable information by setting up the Pathway API an...

One of the main benefits of using Logseq (and probably other open-source tools) is its open, text-based format (Markdown) that can be easily understood by large language models (LLMs) and human beings alike, without the need for additional tools. We will leverage this feature by integrating an LLM-powered chat, such as GPT or any other model of your choice, to search your Logseq notes using natural language.

If you have never heard about Logseq, you can read about it in my previous posts or simply check their documentation.

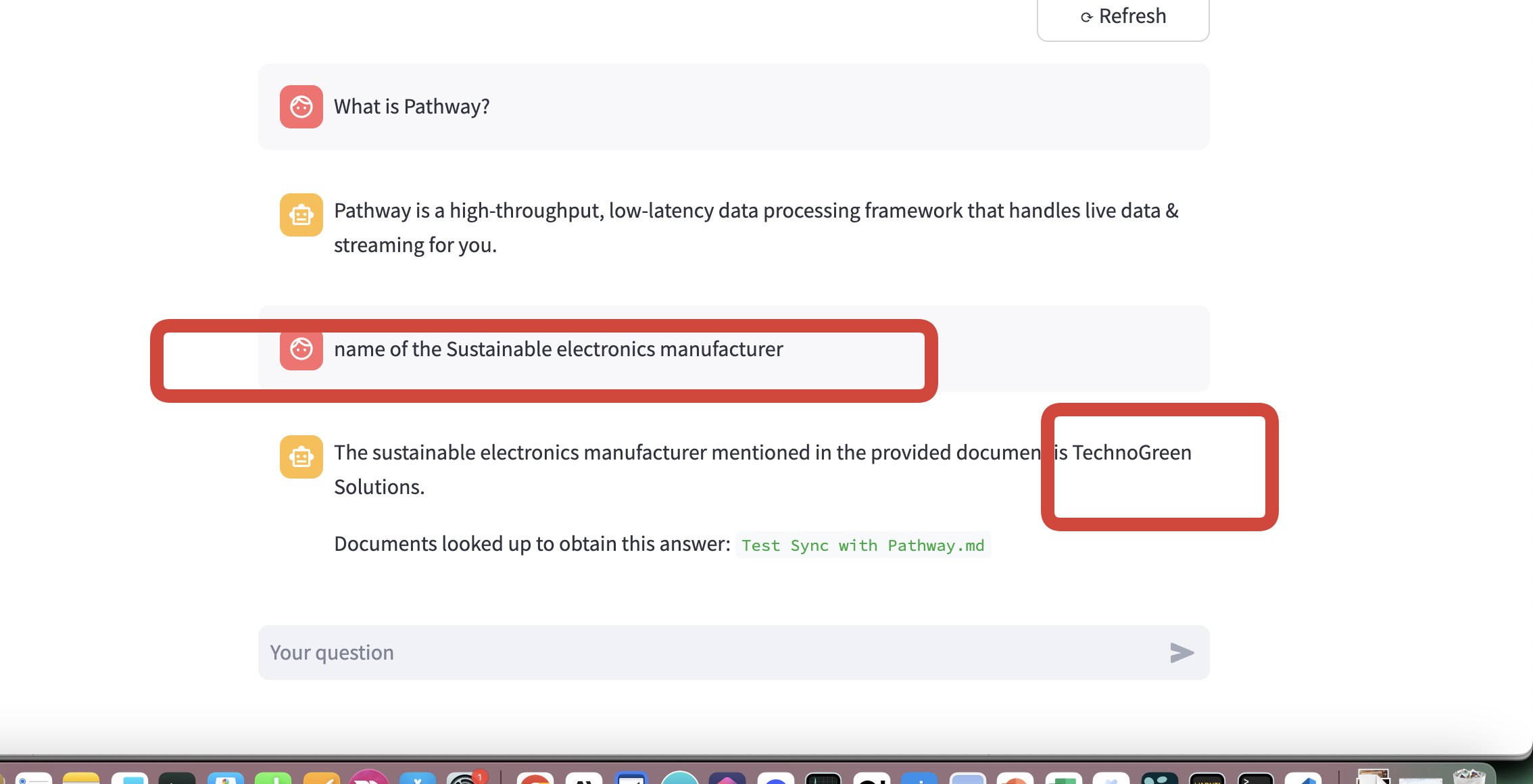

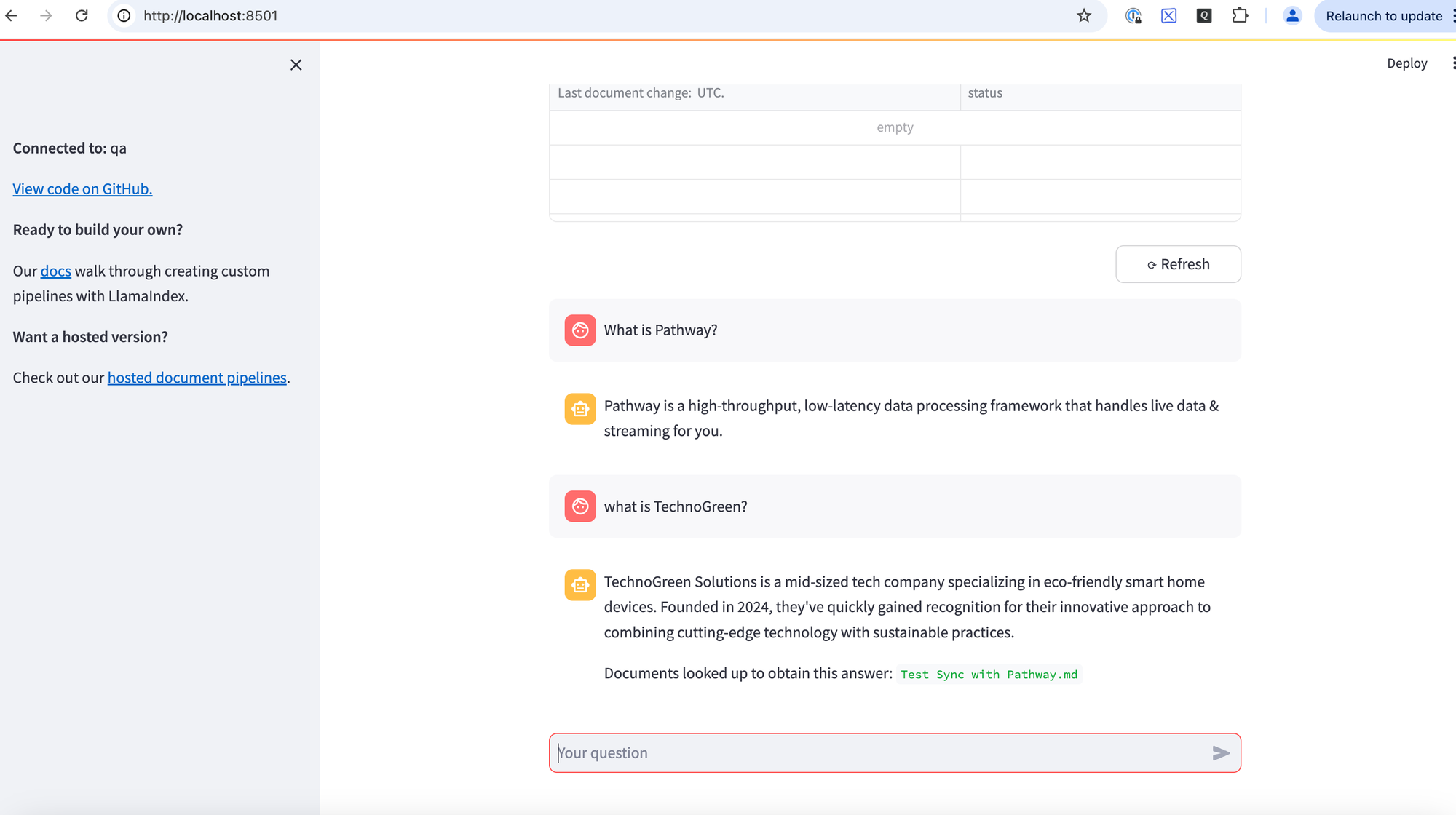

Example:

Let's say you made a note about an innovative company named TechnoGreen with the following profile:

TechnoGreen Solutions is a mid-sized tech company specializing in eco-friendly smart home devices. Founded in 2020, they've quickly gained recognition for their innovative approach to combining cutting-edge technology with sustainable practices.

Would you like me to expand on any particular aspect of this company, such as its products, history, or company culture? I can provide more details or create a longer description if you prefer.Now, imagine that after a year, you forget the name of the company. How do you find it? If you use the exact words from the text, Logseq's native search is quite powerful. However, what if you forget the exact keywords, forget to tag it, or use synonyms? In such cases, Logseq may not find it.

This is where AI becomes your friend. The power of AI lies in finding information that is semantically related to what you're searching for. By the end of this tutorial, you will be able to locate this company by simply mentioning roughly what it is about. In the example below, I search for a "sustainable electronics manufacturer," and the LLM finds it even though none of the search terms appear in the text.

Ok, so let's get started.

The Ingredients

For this solution, you will need:

- Logseq up and running (no API required)

- Pathway – An open-source Retrieval-Augmented Generation (RAG) solution that embeds documents (your Markdown files managed by Logseq) and updates them in real-time as you make changes from any device (Mac, iOS, Android, etc.). This will serve as our retrieval engine, powering the semantic search. Later in this tutorial, it will be referred to as the "Pathway API."Pathway GitHub Repository

- A Simple Chat App – This app communicates with Pathway and allows you to chat with it similarly to how you use ChatGPT, with the only difference being that the ground truth in this case is your notes. Later referred to as the "Chat App."Chat App GitHub Repository

High-Level Steps

- Install and run the Pathway API

- Install and run the Chat App

- Test the integration with real-time updates in Logseq

Step-by-Step Guide

For this setup, I will use Docker to get the Pathway app up and running.

1. Clone the Repository

First, clone the Pathway repository:

git clone https://github.com/pathwaycom/llm-app.git

cd llm-app/examples/pipelines/demo-question-answering2. Set Up the Environment

Add a .env file with your OpenAI API key.

# Create a .env file in the current directory

echo "OPENAI_API_KEY=your_openai_api_key_here" > .env3. Link a Test File from Your Logseq Directory

In this step, I will create a hard link to a file in my iCloud (you can use Google Drive or any other drive you use to sync Logseq across devices). This file will be updated in real-time in Logseq, and the changes will be reflected immediately in our chat app.

# Change to the data folder of the Pathway API. This folder will be automatically synchronized with Pathway.

cd data

# Create a hard link to your Logseq file

ln /path/to/your/Logseq/Documents/pages/logseq-ai-chat.md .4. Build and Run the Pathway API with Docker

# Ensure you are in the demo-question-answering directory

cd ../demo-question-answering

# Build the Docker image

docker build -t qa .

# Run the Docker container, mount the `data` folder, and expose port 8000

docker run -v $(pwd)/data:/app/data -p 8000:8000 qaIf everything goes well, you should see the status of the Pathway app, and all files in the /data folder will be available for retrieval. You can find more details in my previous tutorial about Logseq.

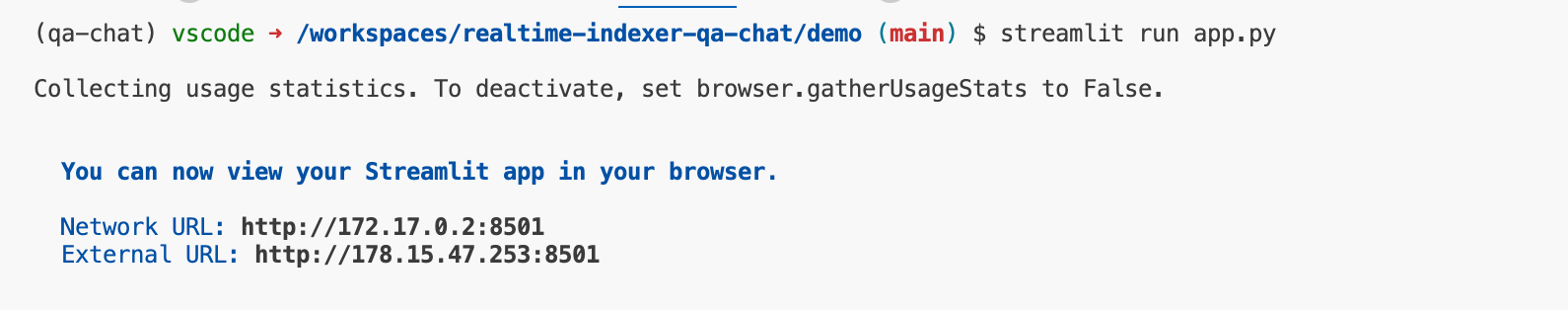

5. Run the Chat App

Now that the Pathway API is running, it's time to set up the chat app.

Run the Chat App

streamlit run app.pyCreate a .env FileBefore starting the app, create a .env file with the following content:

OPENAI_API_KEY=your_openai_api_key_here

PATHWAY_HOST=localhost # or the container name if using Docker on Mac

PATHWAY_PORT=8000 # default port

Clone the Chat App Repository

git clone https://github.com/pathway-labs/realtime-indexer-qa-chat.git

cd realtime-indexer-qa-chat6. Test the Integration

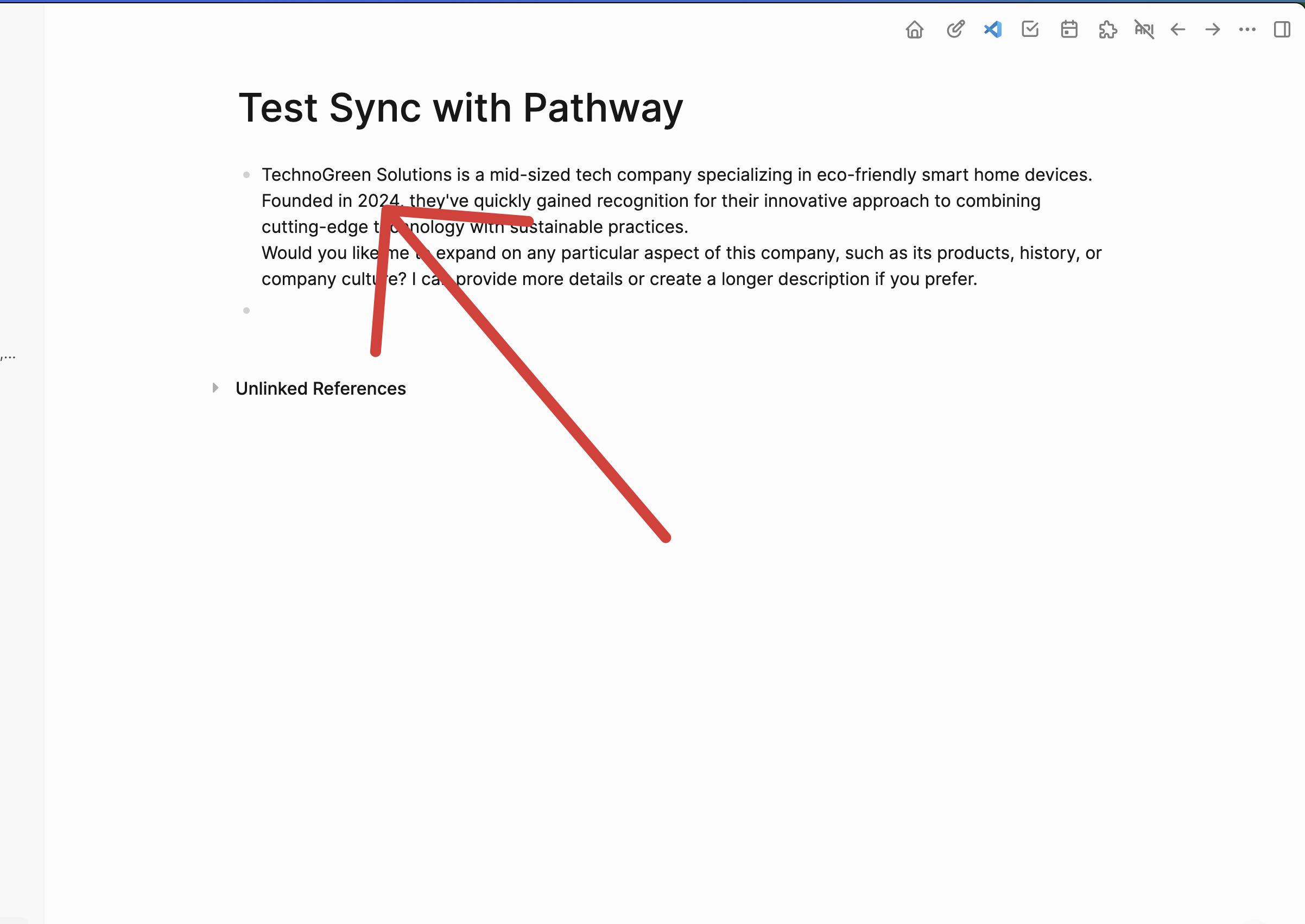

For testing, we will use the text mentioned at the beginning of this tutorial, which is already in Logseq. Note that the fictitious company was founded in 2020.

Let's check if the Pathway API has indexed it correctly.

Yes, it did. Great!

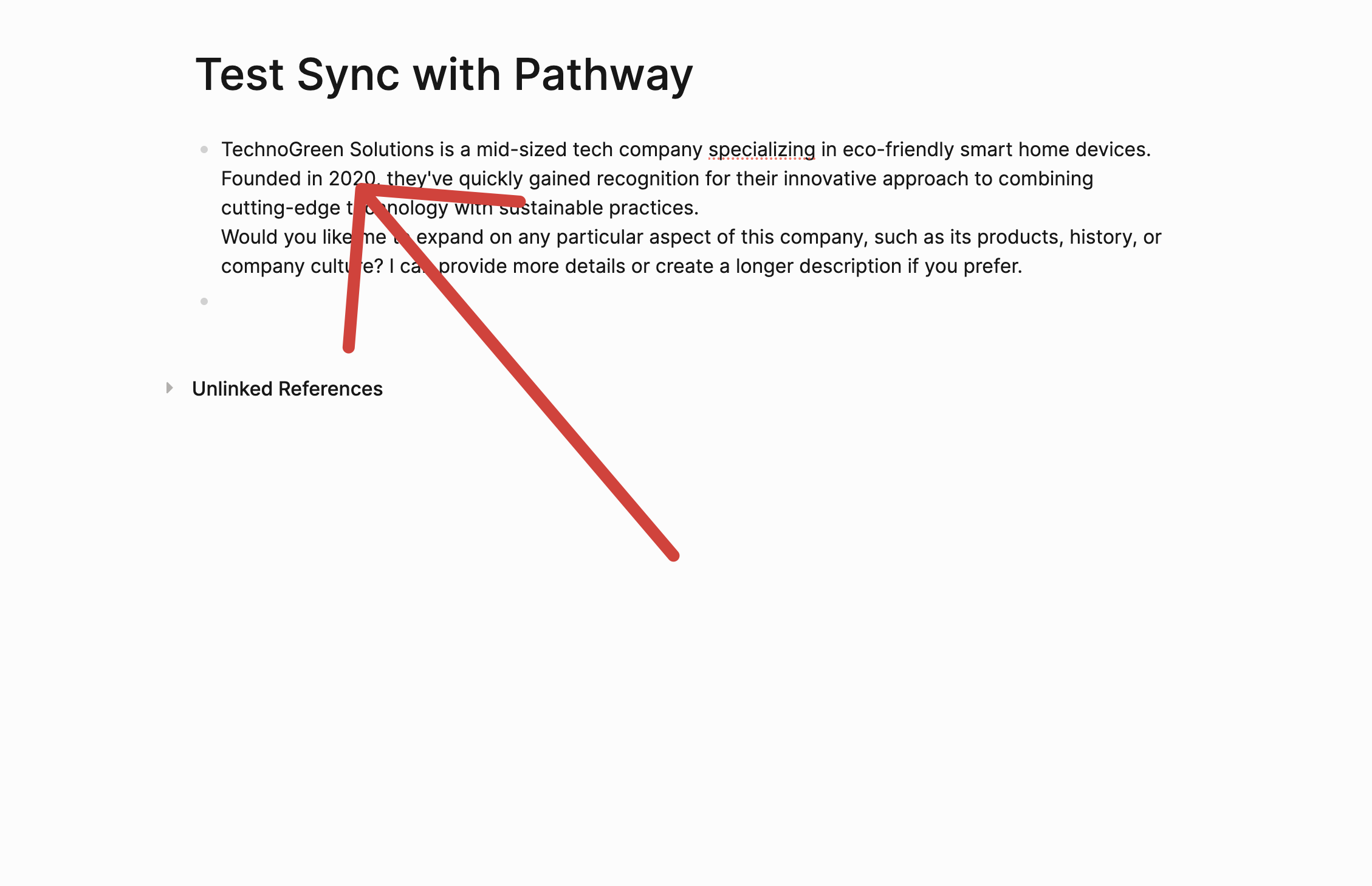

Now, let's edit the text in Logseq.

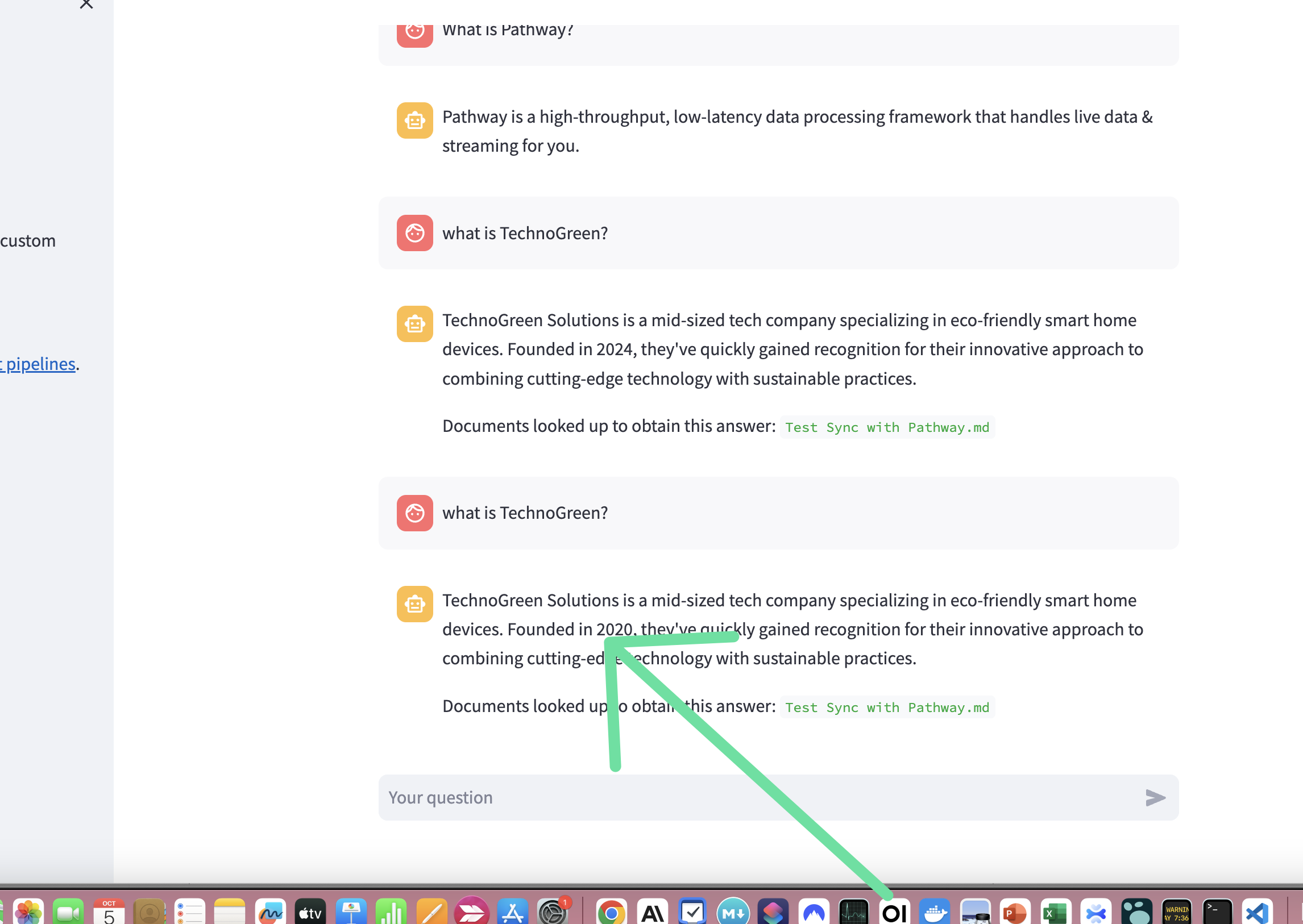

After making changes, add the chat again (note that we did not inform Pathway about the update explicitly).

I asked the same question again, and voilà—the app has noticed the change (the foundation year is now 2020 instead of 2024).

You can now experiment with more semantic questions as mentioned earlier and, of course, with real data.

Conclusion

In this tutorial, we systematically integrated an AI-powered semantic search into Logseq using existing tools without altering your workflow. By setting up the Pathway API and a corresponding chat app, you can now query your Logseq notes using natural language, making information retrieval more intuitive and efficient. This enhancement allows you to leverage the full potential of your notes, ensuring that valuable information is always at your fingertips, even when exact keywords escape you. To fully utilize this functionality across all your notes, simply link your other Logseq files in the same manner as demonstrated.