Empower Any AI Workflow: A Practical Blueprint for LLM Observability with Langfuse & LiteLLM

AI tools like Librechat and Cline are becoming essential, yet understanding their inner workings can be complex. Langfuse offers insights into AI interactions, helping developers monitor and optimize their AI systems. Setting up Langfuse with LiteLLM allows detailed tracing of AI requests, enhanc...

AI tools are becoming indispensable in our daily routines. Chatbots like Librechat and coding assistants like Cline are incredibly helpful for various tasks. However, you might have noticed them get stuck, behave unexpectedly, or simply wondered what’s happening behind the scenes.

For instance, Cline sometimes reaches a point where the AI, like Claude, starts to forget earlier parts of the conversation, making you wonder why this happens.

If you're familiar with how Large Language Models (LLMs) work, you know that conversation history is usually sent with each new request to the model. But what happens when the "context window" – the maximum amount of text the LLM can remember – is full? How do clients like Cline, Librechat, OpenWebUI, or any other AI client that supports the OpenAI standard (which most do) manage to keep the conversation flowing?

These and many more questions might come to mind. With the growing use of AI tools, gaining better insight and observability into the messages exchanged between your AI client and the AI itself (like OpenAI or Claude) becomes crucial for understanding, building, and using AI applications effectively.

One excellent way to achieve deep insight and an overview of what your AI clients are actually doing—like which AI they're calling, with what message, how many tokens they're consuming, and much more—is by using Langfuse.

While there are other options available, such as DataDog, in my opinion, Langfuse is much simpler to install and runs completely privately and is open source. They also offer a cloud service if you don't mind sharing your AI traces with the vendor.

Langfuse is an open-source observability and analytics platform designed specifically for applications that use Large Language Models (LLMs) and AI agents. It helps developers and teams monitor, debug, and optimize their AI-driven workflows by providing detailed insights into how LLMs are being used in production.

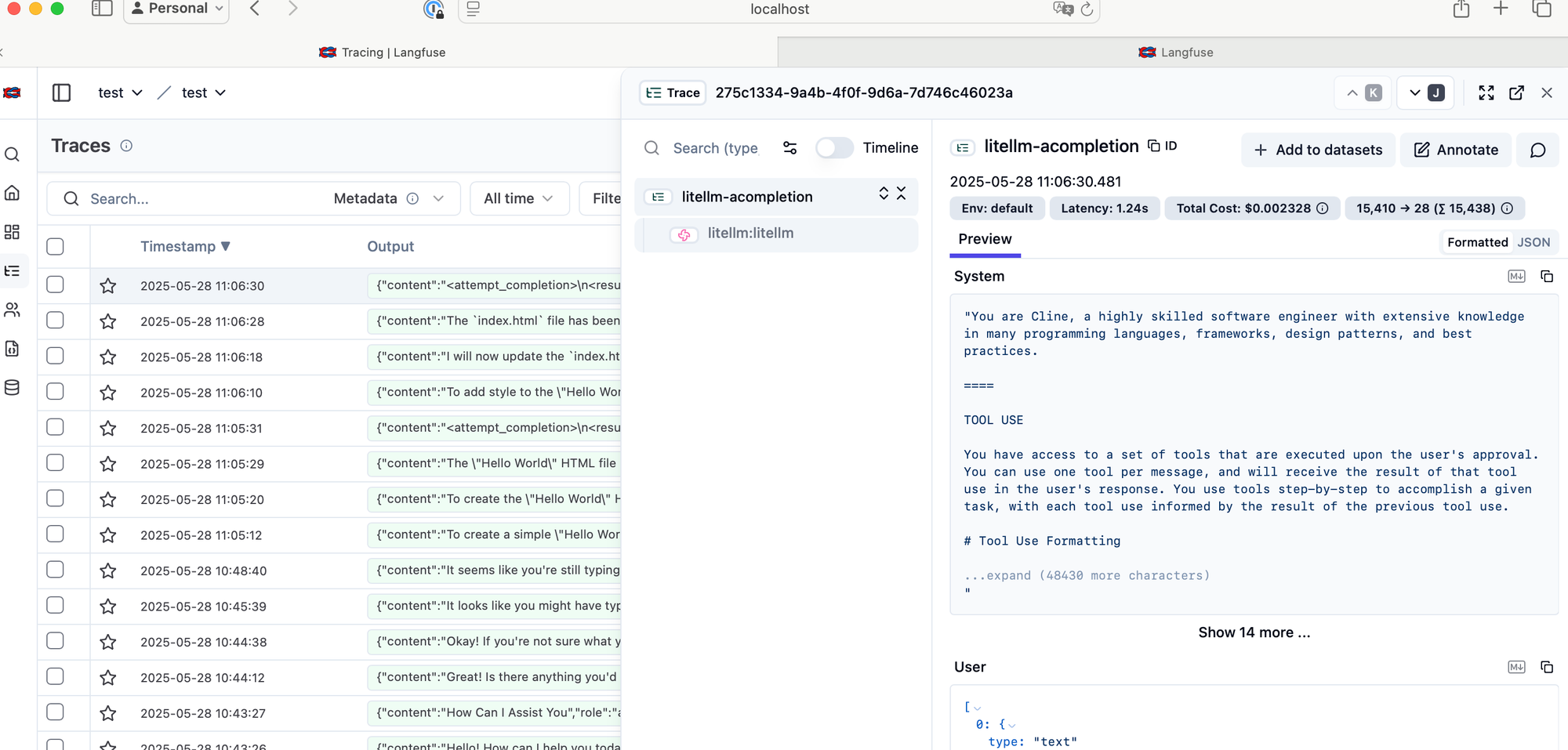

Ultimately, you'll be able to see all traces of all requests to all LLMs across all your AI applications (if you configure them to do so). I find this incredibly powerful for debugging and understanding what happens behind the scenes. Beyond that, this same data can be used for testing and fine-tuning your models, which we'll cover in future posts.

Langfuse can be used in at least two different ways:

- Through your code: Your application's code sends traces directly to Langfuse, which then analyzes the data and displays the results in a unified dashboard.

- Using a middleware proxy: This is what we're going to do here. We'll let a proxy, LiteLLM collect traces from your AI clients like Librechat or Cline and then send them to Langfuse.

LiteLLM is an open-source library and proxy server that provides a unified, OpenAI-compatible API for accessing and managing over 100 different large language models (LLMs) from various providers, such as OpenAI, Anthropic, Google VertexAI, NVIDIA, HuggingFace, Azure, Ollama, and more.

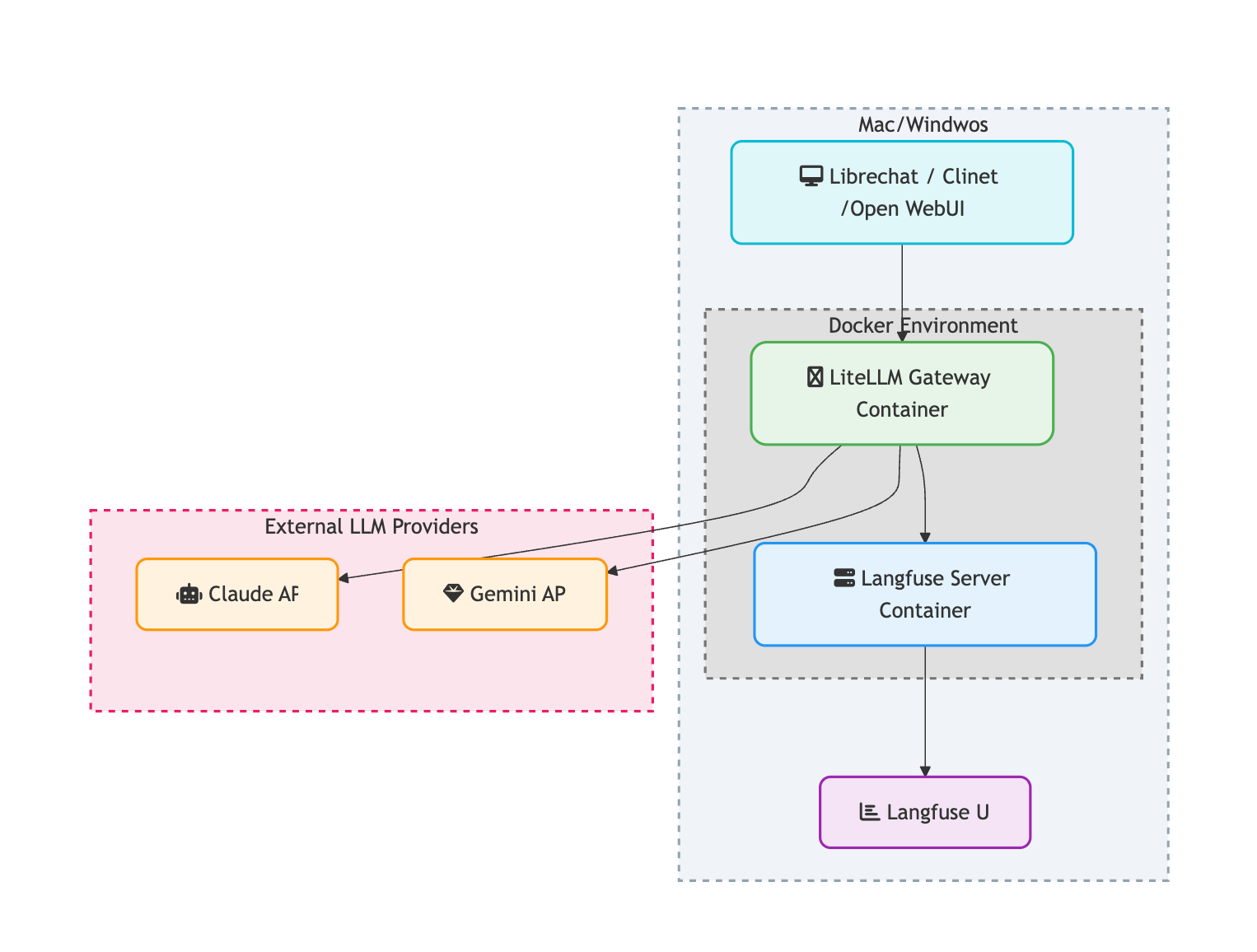

Conceptually, the proxy setup looks like this:

Typical Flow:

- You choose an AI model you want to "proxy" (i.e., intercept and monitor), for example,

gpt-4o-mini. - You configure your AI client (e.g., Librechat) to communicate with LiteLLM instead of directly with OpenAI or other LLM providers.

- Requests for the configured model go into LiteLLM.

- LiteLLM then forwards these requests to Langfuse for logging and observation.

High-Level Overview of the Setup

Here’s a quick breakdown of the steps involved:

- Install and configure Langfuse.

- Install and configure LiteLLM.

- Connect Langfuse to LiteLLM.

- Add LiteLLM as a model source to your AI clients like Librechat or Cline.

Once completed, you should be able to see the traces in Langfuse.

Prerequisites

Before we start, make sure you have:

- Docker installed.

- An AI client like Librechat, Cline, etc., to test with.

---

1. Setting Up Langfuse

We'll begin by installing Langfuse using Docker.

Installing and starting Langfuse is straightforward, as described in their official documentation:

https://langfuse.com/self-hosting/docker-compose

git clone https://github.com/langfuse/langfuse.gitcd langfuse

docker compose up

Once everything is up and running, you can access the Langfuse application in your web browser at:

http://0.0.0.0:3000

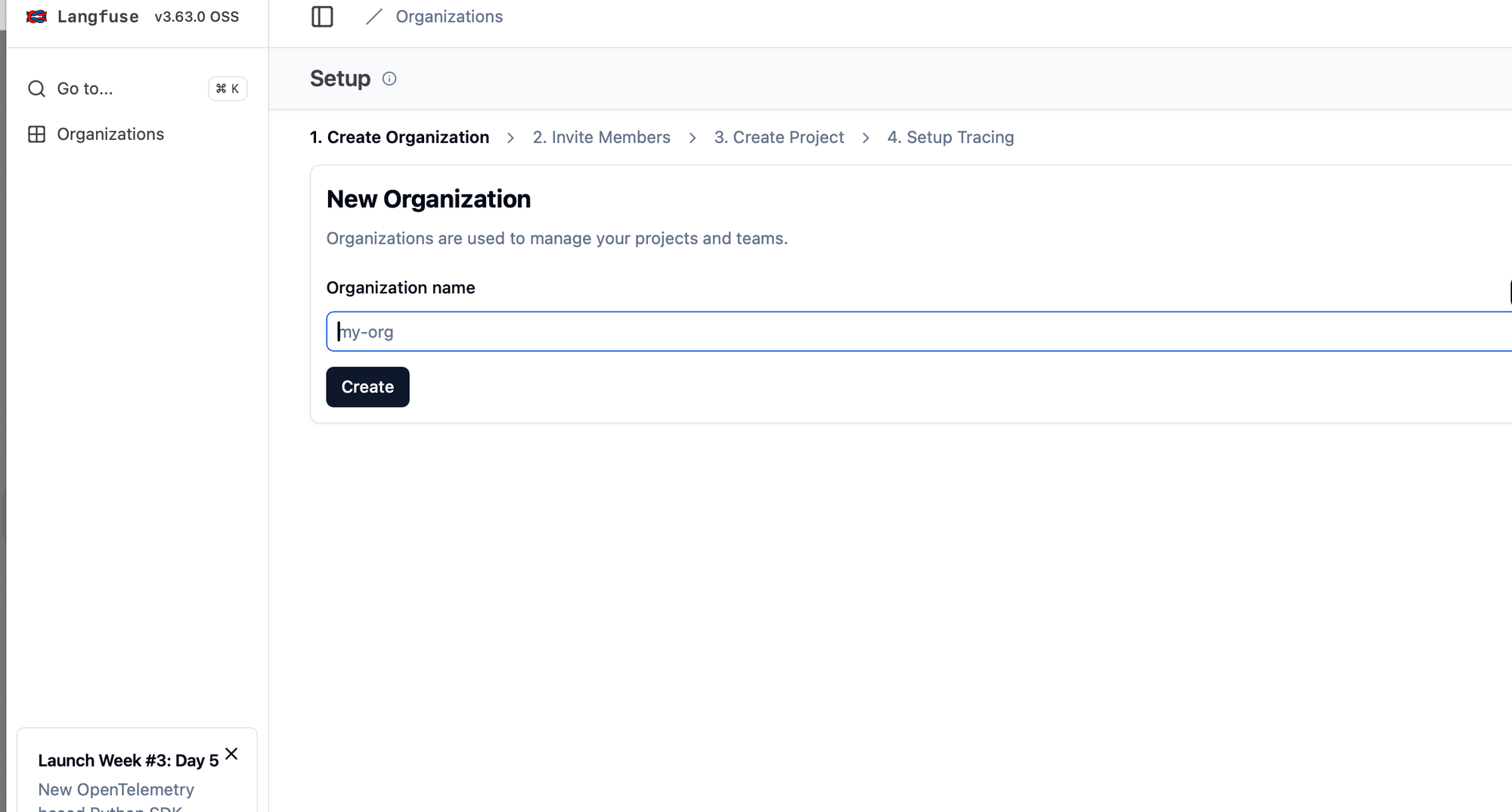

Important Note: If you encounter any issues with used ports, you may need to specify different ports by updating the docker-compose.yml file (we'll do this later for LiteLLM as well).Now that the application is started, create a new organization and project within Langfuse.

After creating your organization and project, you'll be able to obtain a public key and secret. We'll use these keys in LiteLLM to send traces to Langfuse.

Get the public key and secret by creating a new organization and project.

---

2. Setting Up LiteLLM (The Proxy)

The installation process for LiteLLM is quite similar to Langfuse, also using Docker.

However, because Langfuse and LiteLLM use similar components like PostgreSQL, we need to change the default ports for one of them to avoid conflicts. I've chosen to do this for LiteLLM in its docker-compose.yml file.