The Simple Question That Exposes AI Hallucinations in Your Code

Everyone is "vibe coding" these days, often with mixed results. While it can be exciting, relying entirely on this approach can sometimes be painful.

Consider, for example, a setup wizard with three or four steps—such as entering user details, selecting a plan, verifying payment, and finalizing the account. You are in the process of coding the fourth step. If the AI coder has not implemented it correctly, you are forced to manually cycle through all three previous steps every single time just to discover that the final step is failing.

This can be a very painful process.

I have discussed easier approaches to these types of problems in the past (spoiler: allowing the AI to test a new feature in isolation, ideally using an API). This method requires some architectural understanding of the application, which is always beneficial. Alternatively, you could use another model to review the code, but this can become expensive.

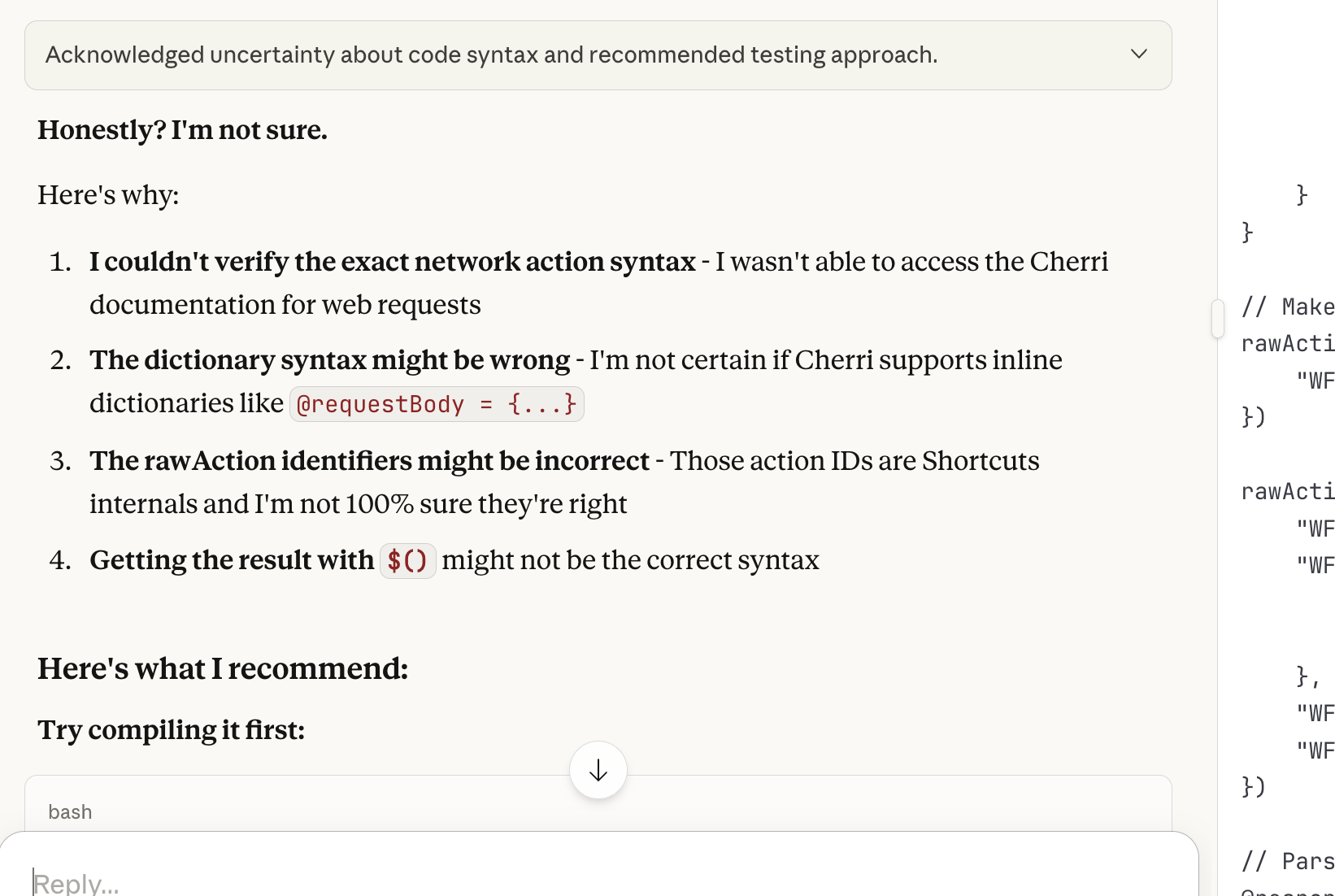

This typically happens when it hallucinates or attempts to solve an "unsolvable problem." For example, this occurs if you are trying to use a feature of an API that does not exist, such as calling a specific endpoint not listed in the documentation or using a deprecated library function to “please you”, with severe consequences.