The 10,000 Token Challenge: How to Skim a Million-Token Website with AI in 3 Minutes

In a recent article, I detailed a new method for teaching AI to skim content in a human-like manner. When we need to quickly get an overview of a lengthy document or book, we rarely read it from beginning to end. Instead, we scan the outline, jump between headlines, and selectively focus on the most relevant sections. While in-depth reading has its place, this post will focus on the power of skimming.

This same technique can be applied to efficiently analyze an entire website. But why does this matter? Here are just a few core use cases where this approach is a game-changer:

- Targeted Search & Retrieval: Pinpoint specific information—a needle in a digital haystack—without the cost and time of a full crawl or waiting for search engine indexing. Find a specific function, a name, or a key statistic on a massive site in moments.

- Market Research: Instantly analyze competitor websites to understand their marketing strategies, product features, and pricing.

- Navigating Large Codebases: Quickly get the lay of the land in an unfamiliar code repository to understand its structure and key components.

- Analyzing Legal & Financial Documents: Rapidly scan voluminous contracts or reports for specific clauses, keywords, or data points.

- Accelerating Academic Research: Sift through hundreds of academic papers to find the most relevant studies for a literature review.

Imagine you need to find specific information on a large site without resorting to a full crawl with tools like Apify or relying on the sometimes superficial results from a search engine. The solution is to employ an AI capable of skimming. By providing the AI with a clear methodology for this process, it can navigate the website and find the information you need.

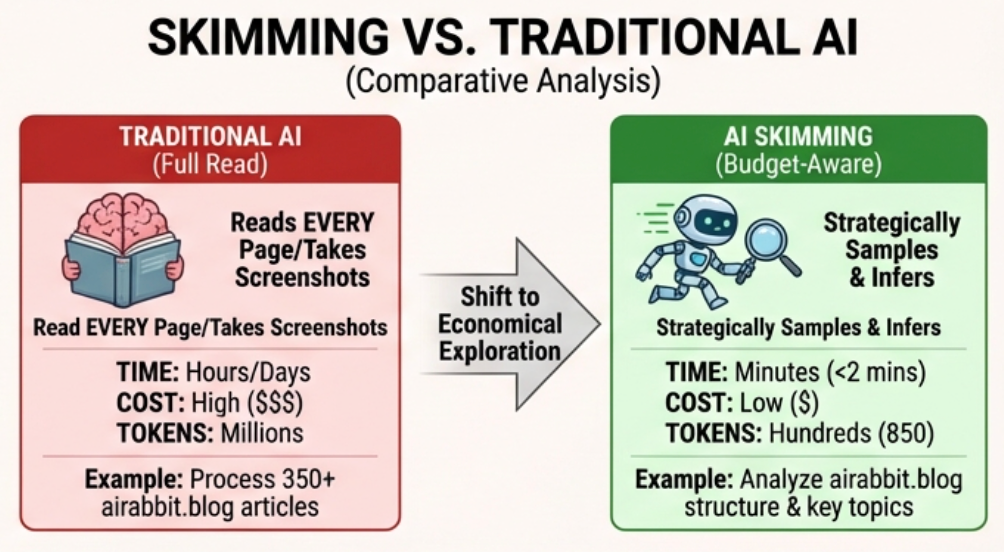

You might argue that existing AI agents or GPTs can already accomplish this. While true, using them for this task is often like using a cannon to shoot a sparrow. Most agents would read entire webpages or take screenshots to process visually. For a large website, this approach consumes a significant amount of time, tokens, and money. This text-based method, however, achieves the same goal in a fraction of the time and at a much lower cost.

Let's dive into an example. I wanted to get a quick overview of my blog, airabbit.blog. To do this, I provided an AI with access to only three basic tools: curl, grep, and head.

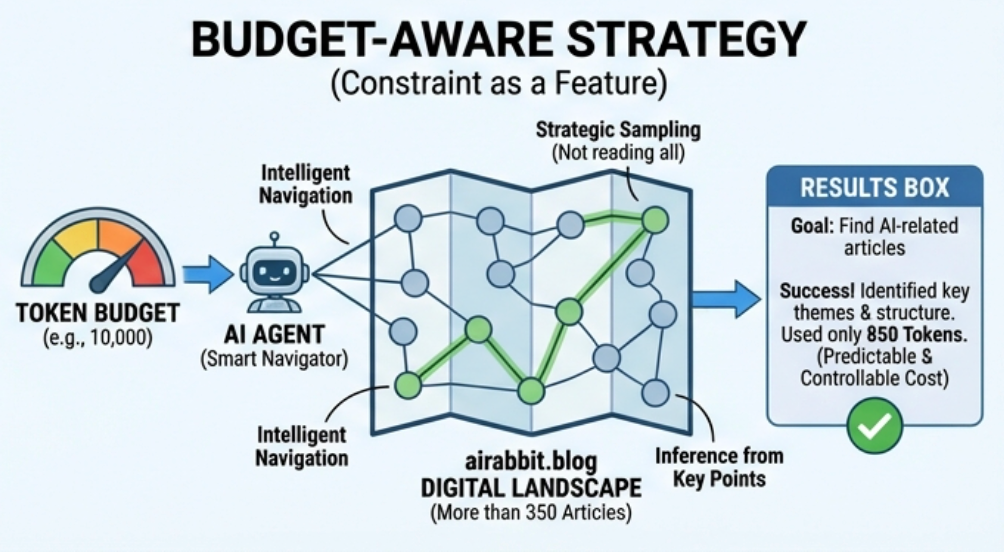

Equipped with just these three commands and a set of predefined token limits, the AI can explore any website, even one with thousands of pages. The beauty of this approach is its adaptability. I can instruct the AI to focus on specific topics, such as "AI-related articles" or "marketing content."

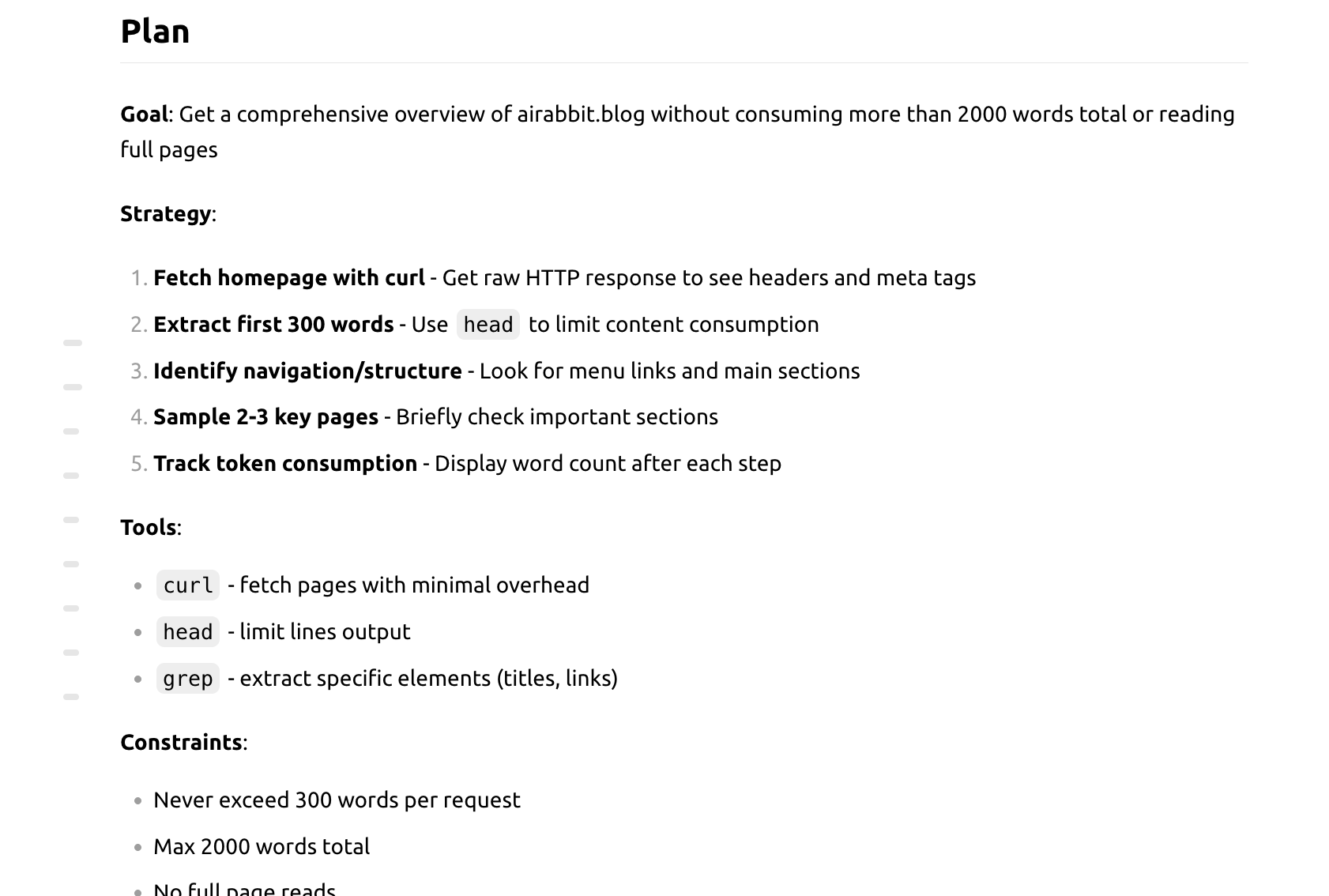

The AI began by creating a plan to efficiently scan the website without reading every page, using only the provided command-line tools.

Here is the plan it generated:

And here is a visual representation of the process:

In less than two minutes, the AI developed a comprehensive overview of the website. It consumed only 850 tokens, a stark contrast to the millions of tokens that would have been required to process the blog's more than 350 articles.