Reliable Chat with any Github Repo w/o RAG

Struggling to sift through documentation for coding solutions? The classic "RTFM" has evolved—now, simply ask GPT. But for full answers, using tools like Gemini's NotebookLM can leverage entire documentation sets as context, ensuring complete insights. Learn how to integrate this in your coding w...

If you use AI for coding, you probably know this problem—or let’s call it a challenge: you have a large set of documentation and you want to find a specific piece of information.

A few years ago, the simple answer was RTFM.

The new way is: ask GPT.

And if you work with coding assistants like Cursor or Cline, it can become even easier to do.

In many cases, this works fine, but sometimes it does not produce a complete answer and only gives part of what you need.

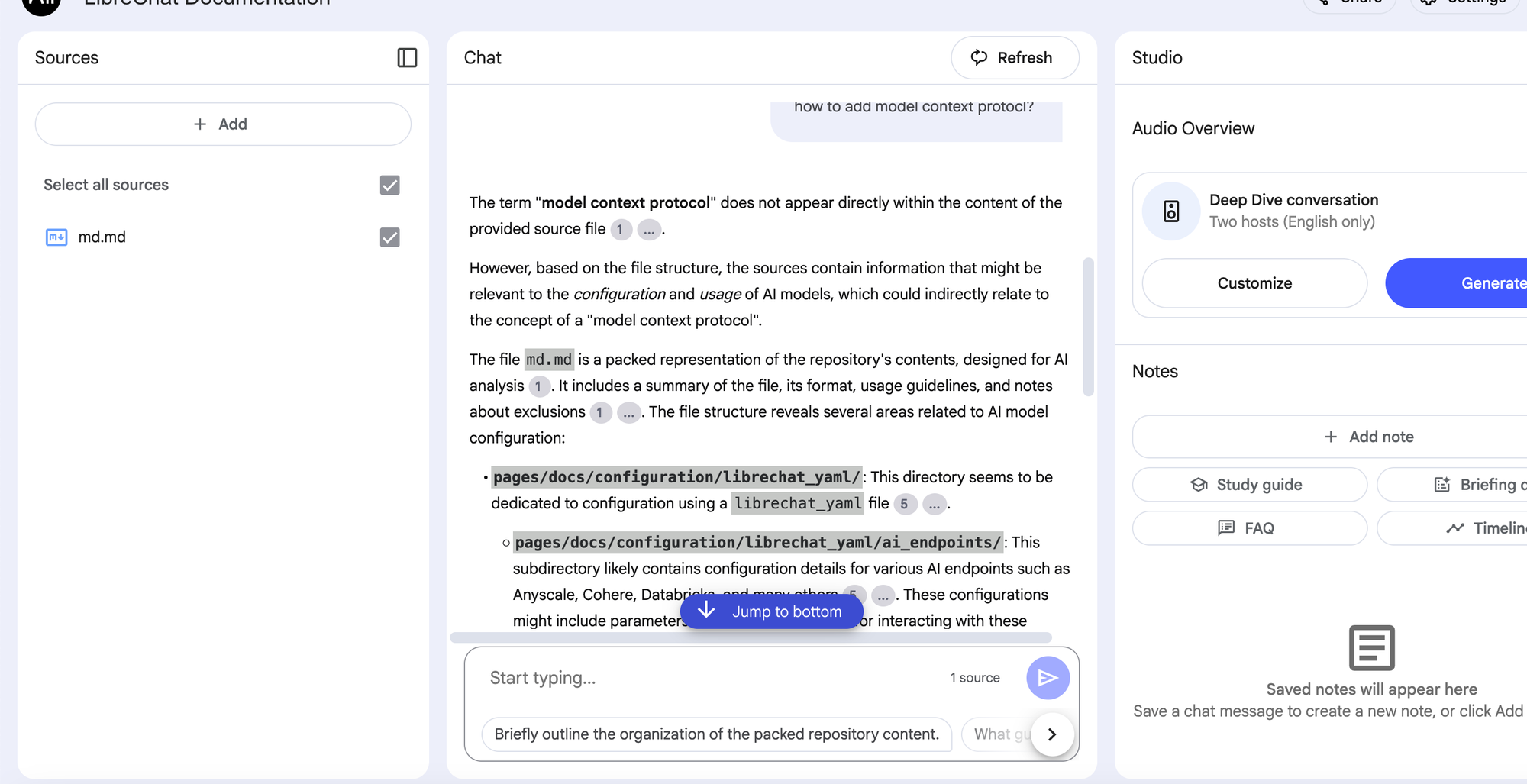

An Example Using NotebookLM

Here is an example where I use NotebookLM (using Gemini), feeding it with the documentation of LibreChat—a mind-blowing open-source LLM chat tool you can read about in my other articles.

In this example, I asked how to add MCP in the configuration, and it simply did not know the answer or only provided part of it.

Why Answers Can Be Random

This can be pretty random behavior even with powerful tools like Cursor, Windsurf, etc.

Why?

The main reason is that, depending on the size of the documentation and the capacity of the LLMs (token limits), they implicitly use Retrieval-Augmented Generation (RAG) on the documentation.

In simple terms, they create a vectorized database and use it for search.

However, the quality of this RAG-based search is one of the fundamental topics of research and applications in Generative AI these days.

Generating full answers on the spot can be challenging in some cases.

A Simpler Solution: Using the Entire Documentation as Context

Fortunately, there is a simpler solution that is now possible with huge context sizes of up to 2 million tokens, as in Gemini. Depending on the size of the repository, you can use all the documentation as context for the LLM.

This is like copying and pasting the entire documentation into the chat window of an LLM that supports huge context windows - like ChatGPT, Claude or Gemini etc - and then asking questions about the given context.

Practical Example: Adding MCP Support to LibreChat

Sounds abstract? Let’s try the same topic: figuring out how to add MCP support to LibreChat using this new approach.

Of course, this can be any question regarding any other documentation out there. All you need is access to the source code of the documentation.

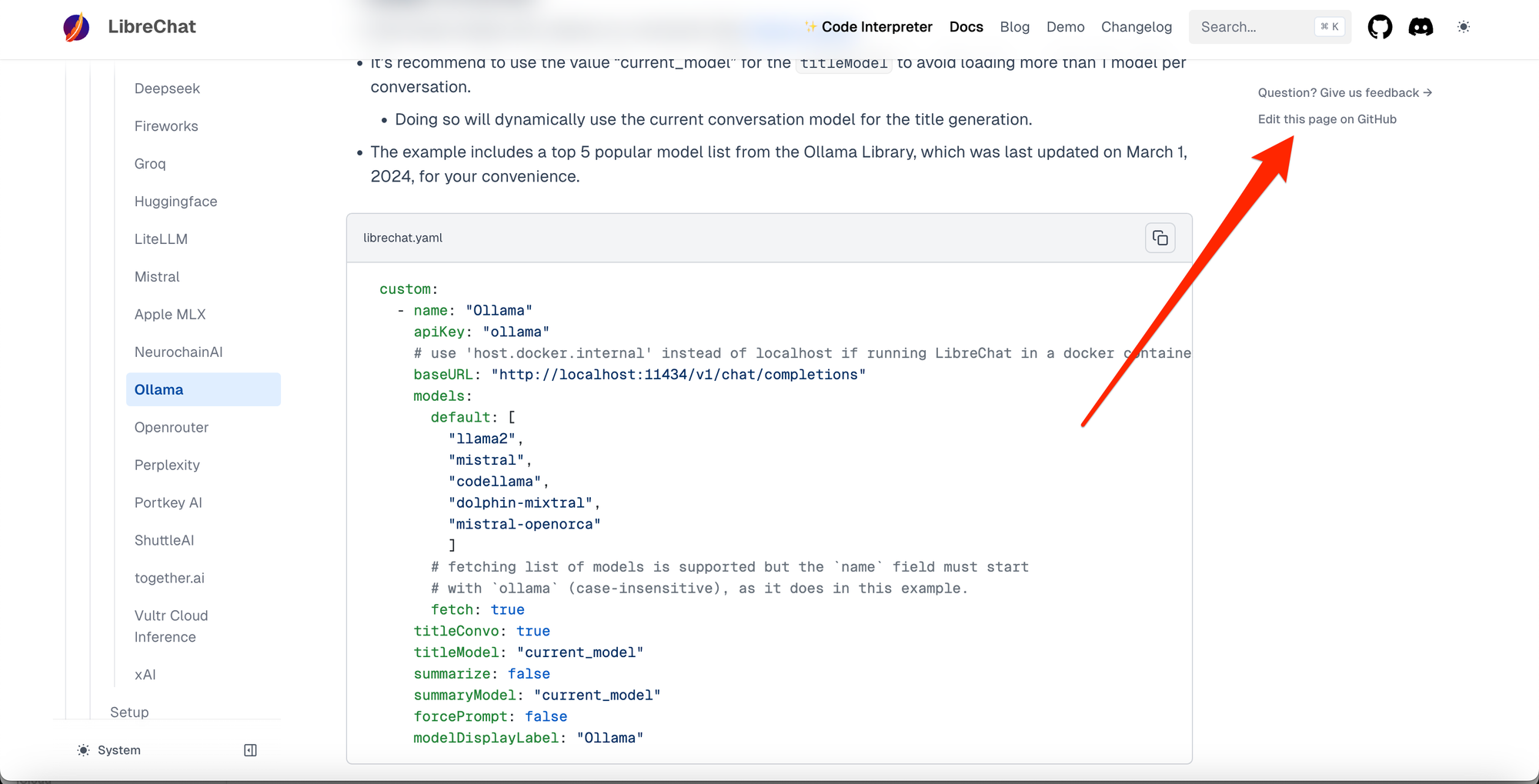

Step 1: Find the Documentation Repository and Folder

Most open-source projects have public and open-source documentation. You can usually find it by looking for a button to edit any page of the documentation. This will lead you to the repository and the exact folder.

Here, I use the LibreChat docs as an example.

Then, in the repo, you can see the exact folder in the LibreChat repo where the docs are located. Of course, you can look directly in the main application’s repository for a “docs” folder or something similar, but I find it easier to locate the documentation repo and its exact folder this way.