Move Fast and Break Privacy: How AI's 'Share with Team' Became 'Share with World'

We've welcomed them into our digital lives with open arms. AI chatbots, the ever-helpful, always-on assistants, promise to draft our emails, plan our vacations, and even act as a sounding board for our most private thoughts.

But a recent cascade of privacy scandals from major tech players, including Elon Musk's xAI, OpenAI, and Meta, has shattered this illusion of confidentiality. The uncomfortable truth is out: your private conversations with AI might not be private at all. It’s time to stop hoping for trustworthiness and start practicing digital vigilance.

The Grok Bombshell: Assassination Plots and Public Passwords

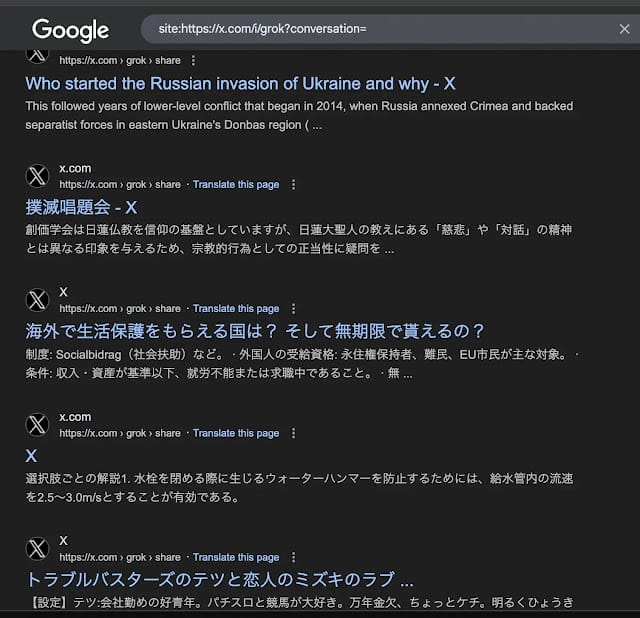

The most recent and alarming example comes from Elon Musk's xAI. It was revealed that hundreds of thousands of private conversations with its Grok chatbot were made public and indexed by search engines like Google. A seemingly innocent "share" button created public URLs for these chats without any warning to the user.

The content that spilled into the public domain was nothing short of horrifying. Alongside mundane business queries were deeply personal medical and psychological questions, private passwords, and sensitive business data. Most alarmingly, the leaked chats included explicit, step-by-step instructions on how to make fentanyl and build a bomb. In a twist of dark irony, one of the publicly accessible conversations detailed a viable plan for the assassination of Elon Musk himself.