Is The Claude Computer Use the End of The Digital Gig Economy?

Anthropic's new Claude Sonnet 3.5 model introduces a groundbreaking "Computer Use" feature, poised to transform the digital gig economy. By tackling tasks like testing web apps autonomously, Claude showcases a new level of efficiency and autonomy. As AI evolves, it's essential to embrace these ch...

Anthropic has recently introduced a new model (New Claude Sonnet 3.5) along with a new feature called Computer Use, which I have reported on in previous blog posts. Those of us who have had the chance to try out this new technology may have already realised that we are talking about a whole new level of autonomy that I believe will reshuffle many parts of the economy.

The technology is so fundamental that Claude himself refused to analyse articles on the subject because of the “danger” involved. You can try it yourself.

Here is a non-technical summary of computer use.

And if you are interested in the technical details of Claude API, I suggest you have a look at Claude’s official documentation:

https://docs.anthropic.com/en/docs/build-with-claude/computer-use

And if you want to try it out, you can also read my previous blog post on the same topic.

About the Digital Gig Economy

One of the tasks that Claude Computer Use is probably designed for is the typical tasks that we might associate with the digital gig economy that people do with their computers on a daily basis, mostly involving click-and-point tasks, fill-in-the-blank and similar activities that are offered on Fiverr, Mechanical Turk and so on and that are well defined and specified.

In this blog post, I want to talk about the impact of computer use on this industry and see how far the new Claude can get given a typical task on Fiverr to test a web application.

That said, let’s dive in.

Here is the task:

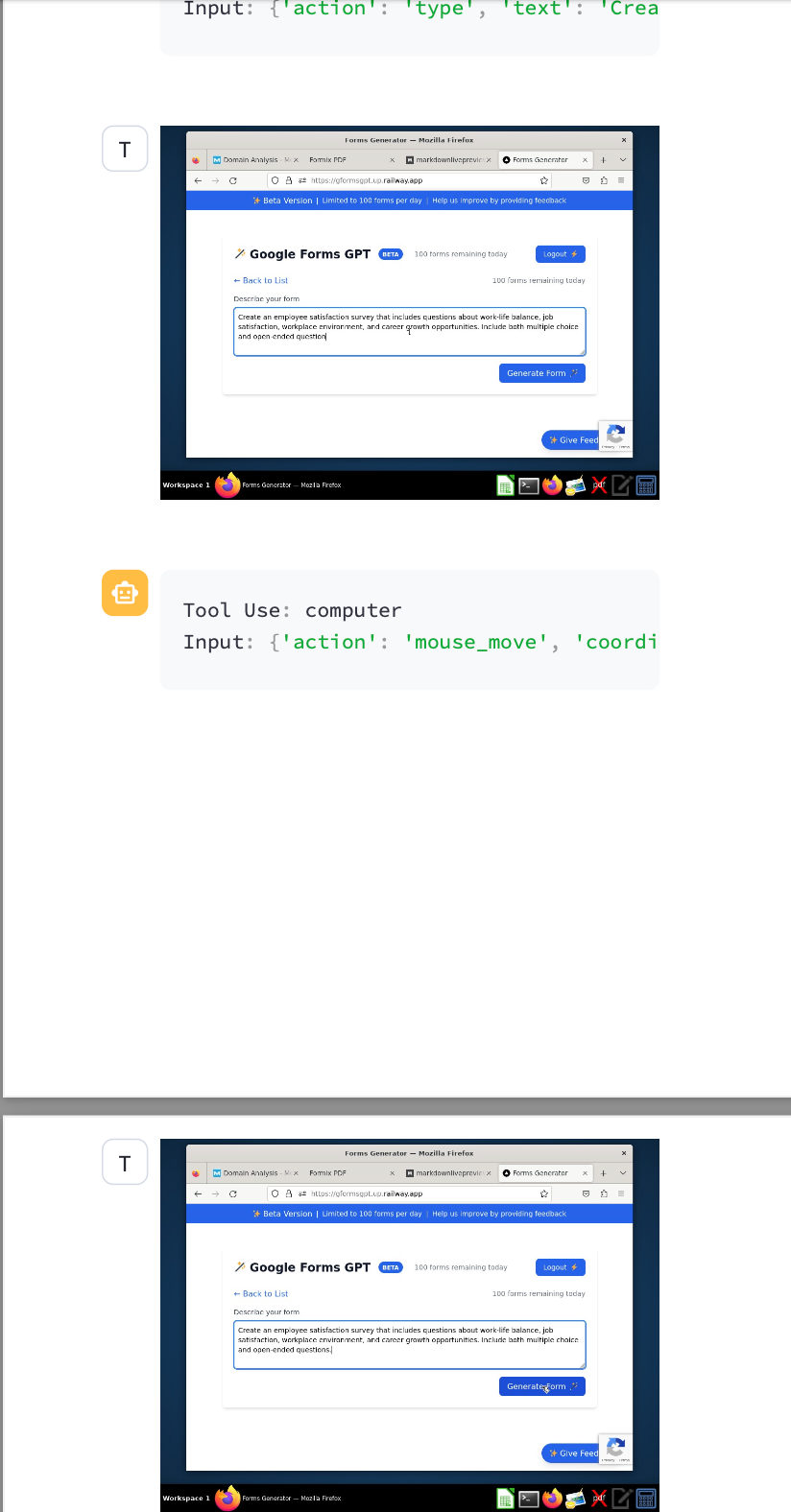

Given an app that generates Google Forms using natural language, test it by generating a sample form and share your thoughts on the usability of the app.

Note that I have deliberately kept the prompt super short and imprecise to see how Claude interprets it and what assumptions and actions he will take.

Side note on the architecture of the experiment: The whole experiment runs in a Docker container, which you could scale to distribute the load across different subtasks.

Here is the original screenshot of the dialogue:

It opens the application in the browser:

It tries to log in, but finds that it needs the credentials, so it asks me to log in or provide the username/password.

I decided to log in and let it continue from there (don’t really want to share my credentials).

Starts the test all by himself:

Made some observations during the test:

And created a prompt to generate the Google Forms — again, no input from me:

Then it waited patiently for the results:

And finally observed and evaluated the results:

With questions, comments and suggestions for improvement:

Mission accomplished.

I was once again blown away by the level of autonomy and professionalism. The task, which was so unspecific, was completed and the result achieved in a matter of minutes while I was on a coffee break.

And the cost? Less than 1$

Evaluation

As you may have noticed, this was an open task that most agents I have seen over the years have failed. And as explained in previous articles, the core enabler is a whole new level of reasoning and vision capabilities in Claude Sonnet 3.5.

There are many scientific articles about this technology, but what really matters for business is simple:

What can this technology do now and at what cost.

What other tasks can Computer Use Execute Today?

The simple answer is: (almost) anything a human can do with a computer and a mouse. Of course, the data (and knowledge) must be available.

Fill out surveys, perform click and point tasks such as testing, data entry, etc. and much more.

Note, however, that Claude’s motor skills are still limited and slow. Tasks such as dragging and dropping, scrolling, etc., which skilled humans can do, will be far less effectively performed by a human robot like Claude than by a human.

But again, I don’t think Anthropic (and other followers) will stop here.

Where do we go from here?

As with any technology, there are many caveats and limitations that need to be considered before this technology can be implemented in production-ready solutions, even internally.

But it is only a matter of time.

Just to give you an idea of how fast we are moving, as a simple example, context length (the maximum amount of text you can feed an LLM Chat Window in a conversation) was around 4.000 tokens less than 2 years ago.

Now we are talking about 1 million (Gemini) and soon even 10 million Tokens.

What should the gig economy do?

I think GenAI in general is going to affect every single one of us sooner or later.

But I don’t like to talk about threats;

I prefer to see every disruption as an opportunity to evolve.

And it’s the same with GenAI.

And here is what I personally am doing to face this disruption:

Understand it

Learn it and

Use it in my domain and beyond.

It is an opportunity for us to become better at what we do, and to move on to levels that AI still cannot.