Is Gemini Live API the End of Customer Support ?

Explore Gemini 2.0's revolutionary Multimodal Live API, enabling real-time screen sharing and camera feeds for personalized assistance. This innovation promises to streamline software support, guiding users efficiently through complex applications. Discover how grounding documentation can enhance...

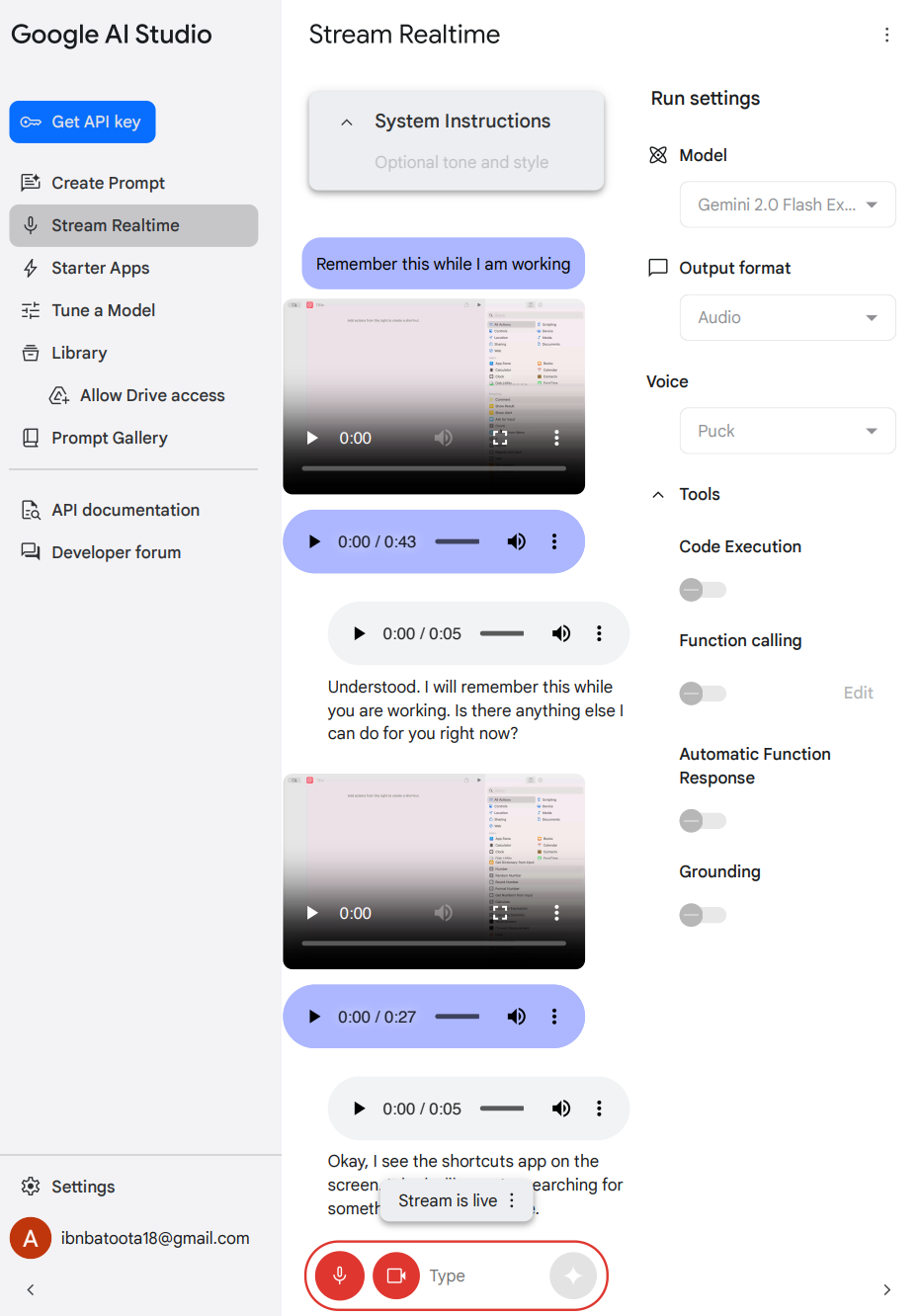

I recently covered a game-changing technology from Gemini: Multimodal Live API in Gemini 2.0. In essence, this feature allows users to share their screens or even live camera feeds of the physical world, receiving real-time assistance from Gemini as they interact with their screens or surroundings.

But why is this a big deal?

One of the most compelling applications is in application support.

Consider this: you've developed a complex software application and meticulously crafted comprehensive user manuals. Yet, you still encounter numerous hurdles:

- Users get stuck.

- Users don't utilize the app's full potential.

- Your support team is inundated with repetitive questions via phone and email.

This massive effort might be manageable for high-profit businesses, but it's often unsustainable for others.

In some cases, you might outsource customer support. However, this requires you to meticulously explain your processes and context so the support team can identify potential user errors (or identify bugs).

This typically involves a tedious process of troubleshooting, capturing screenshots, and creating support tickets. While crash reports and anonymous analytics can help, they often fall short (e.g., when the app doesn't crash but fails to perform as expected).

This isn't limited to custom apps. It applies to most software interactions, including operating systems, OS-based applications, web apps, and more.

With Gemini's video streaming, this could soon be a thing of the past.

How? If the AI can see your screen, it can guide you, right?

Well, yes and no. For well-known software, like OS functionalities or settings, it likely possesses substantial knowledge and can provide effective navigation. But two main challenges remain:

- Outdated Information: The AI's knowledge depends on its last training date.

- Lack of Data: For custom software or apps lacking public documentation within the AI's training dataset, assistance might be limited or even inaccurate, potentially leading to hallucinations and incorrect instructions.

However, if the app is popular and well-documented publicly, the AI can likely already assist you in navigating it.

Fortunately, there's a solution for the other cases: Grounding.

Grounding involves providing the AI with access to the application's documentation as a support reference. The AI then leverages this document to guide users through complex software.

But can't users just consult the documentation themselves?

Yes, but for dense and complex documentation, the AI can process it much faster than a human. With vector-based solutions, it can search hundreds of thousands or even millions of documents and pinpoint relevant articles within seconds, then use those articles to advise stuck users. For documentation smaller than the context limit, simply attaching it to the chat (within the context window) is even easier.

Let's see this in action with two scenarios: a custom app and a well-known Apple app (Apple Shortcuts).

Test 1: Custom App - Gogoforms

Gogoforms generates Google Forms from prompts.

Goal: Create a Google Form for customer feedback using AI.

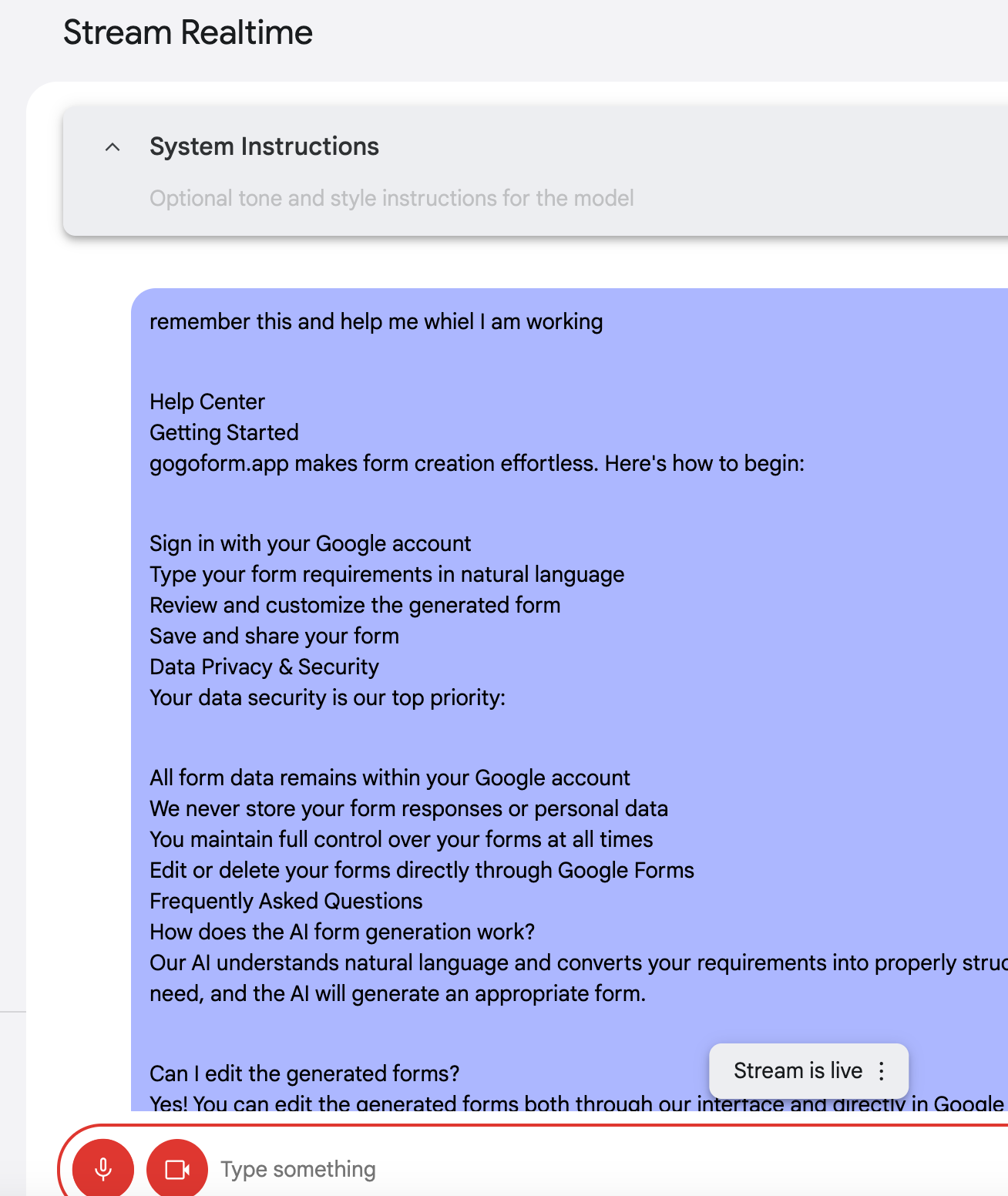

First, I provided Gemini with the FAQ and requested assistance in creating a form using AI.

It confirmed receiving the information.

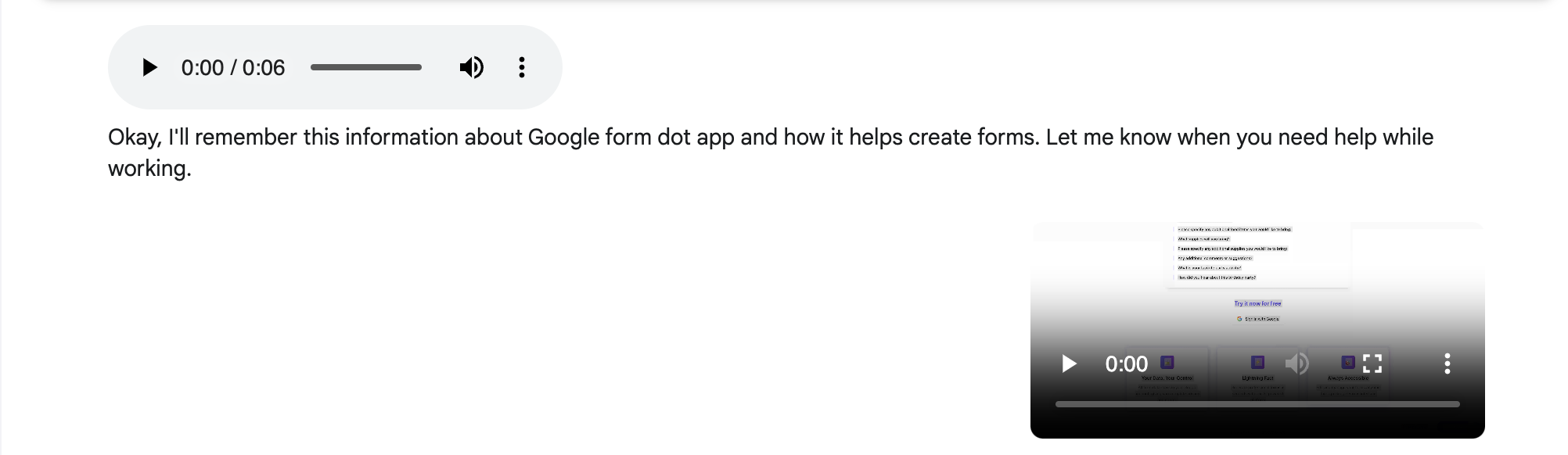

- It first asked what I wanted to create. I responded, "a feedback form."

- It provided a sample prompt.

- I entered the prompt.

- It instructed me to click the "Generate" button.

- ...

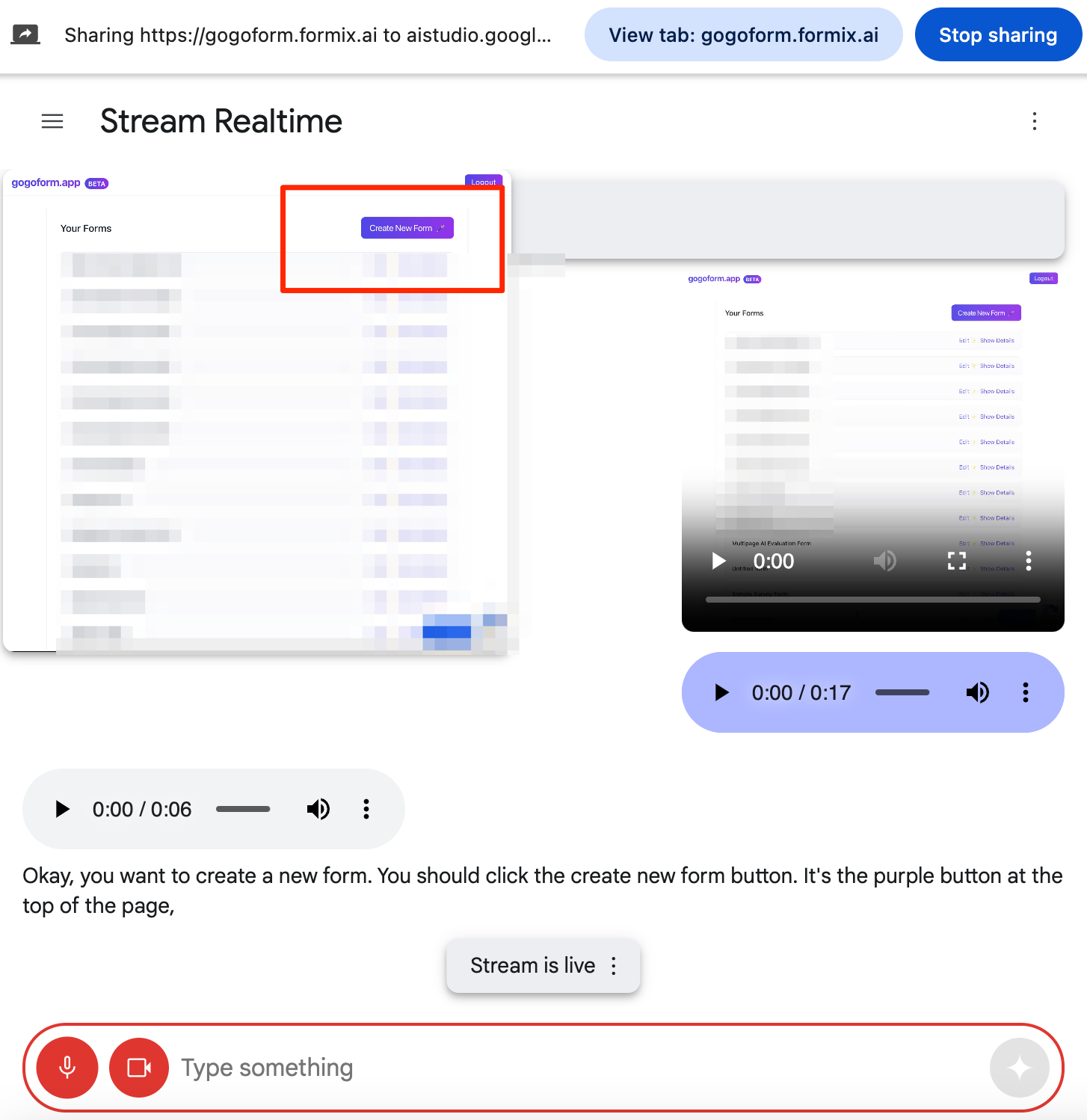

Finally, I had my form!

This is a simple scenario, but the same approach can be applied to countless other use cases. Ground your data, communicate your ultimate goal to the AI, and let it guide you along the way.

Test 2: Apple Shortcuts

Goal: Create an HTML to Markdown converter workflow.

In this case, I expected Gemini it to already understand the core concepts of Apple Shortcuts.

I simply stated my goal.

It navigated me through the UI, not just with text, but with real-time voice interaction. It's truly mind-blowing to experience.

First, it instructed me to search for "Markdown" in the action list (exactly what I would have done).

Then, it told me which action to select.

Finally, it guided me to drag it to the workflow area.

And so on...

Mission accomplished! Thanks, Gemini.

Since I primarily used voice this time, I was curious if I could get a transcript. Unfortunately, it couldn't summarize the discussion for some reason.

Key Takeaways:

We've made incredible progress, and this new video streams feature offers tangible value right now. However, it's crucial to acknowledge limitations and address data privacy concerns.

Remember, this is just a demonstration in the playground environment. Integrating these features via the API allows you to customize the UI/UX for customer support or end-users as needed.

Here's the documentation for the multimodal live API: https://ai.google.dev/gemini-api/docs/models/gemini-v2?authuser=1

And more on the real-time API from OpenAI: https://openai.com/index/introducing-the-realtime-api/

What's Next?

These new technologies not only facilitate navigation through complex tools but also significantly boost workflow efficiency by grounding data.

The efficiency gains could be comparable to using GPS navigation versus relying on human street navigation.