Is AWS Too Complicated for Startups?

Working with Large Language Models (LLMs) reveals a clear disparity in onboarding complexity among providers. AWS Bedrock's intricate setup contrasts sharply with the simplicity of Google Gemini, emphasizing a need for streamlined access. The evolution of cloud services should cater to startups, ...

I've been working extensively with Large Language Models (LLMs) for the past couple of years, and during this time, I've had the opportunity to use almost every provider on the market. This includes OpenAI, Anthropic, Together, Fireworks, Azure OpenAI, Google Gemini, and, of course, AWS Bedrock. I've also been an AWS user for over a decade. However, something has become strikingly clear to me since I started adapting AWS Bedrock for one of our clients. While AWS Bedrock (and AWS in general) is incredibly powerful, its onboarding process is significantly more complex compared to other LLM providers.

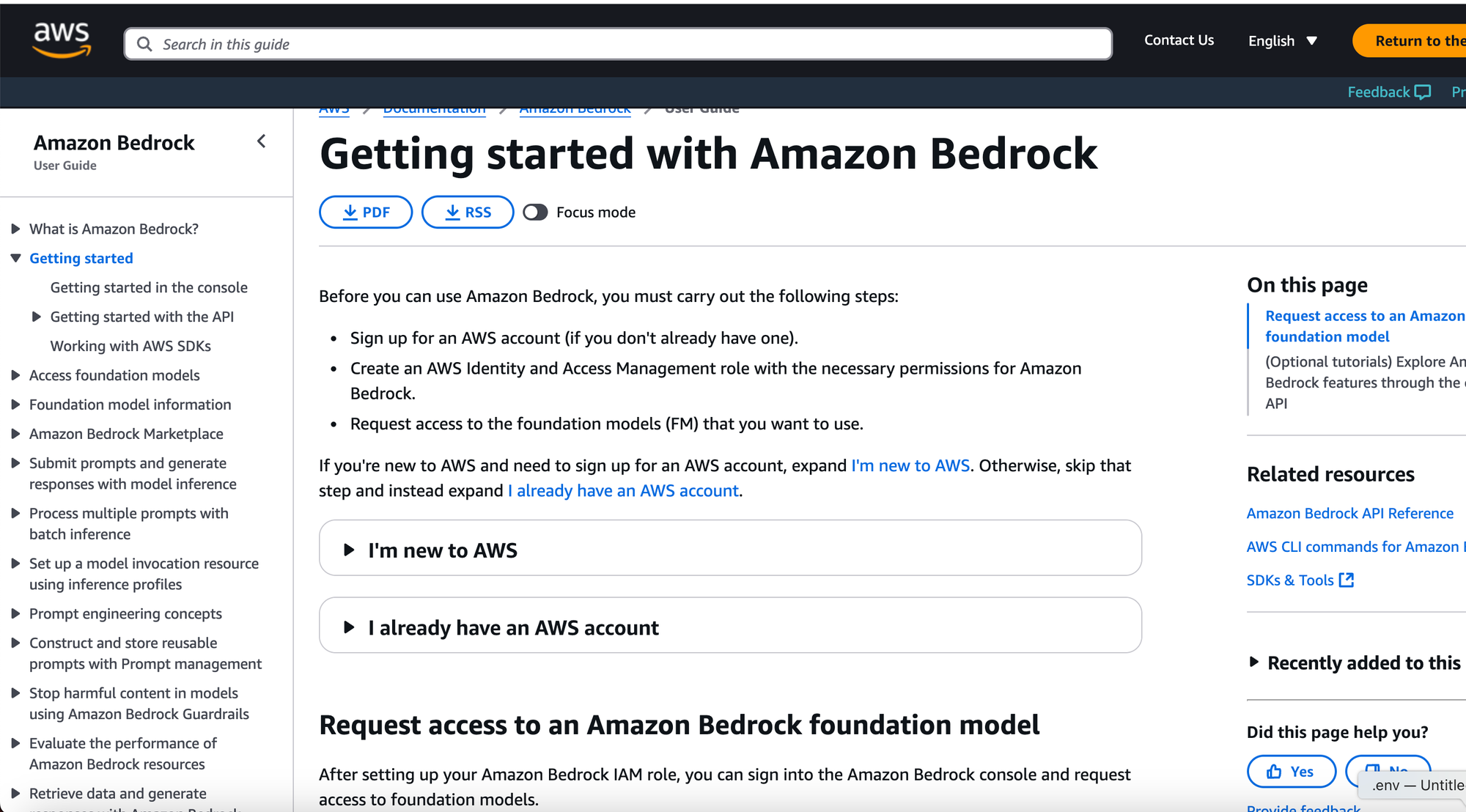

Let's say you just want to experiment with a new model, like Nova, on AWS. Here's a simplified version of the steps involved:

- Set up the proper IAM role and permissions.

- Generate an access key.

- Choose the foundation models you want to use.

- Submit an access request.

- Wait for approval.

- Open the playground and select the model.

- Finally, you can start chatting.

That's a lot of hoops to jump through just to say "Hello" to an LLM! In contrast, many other providers have adopted a much simpler approach.

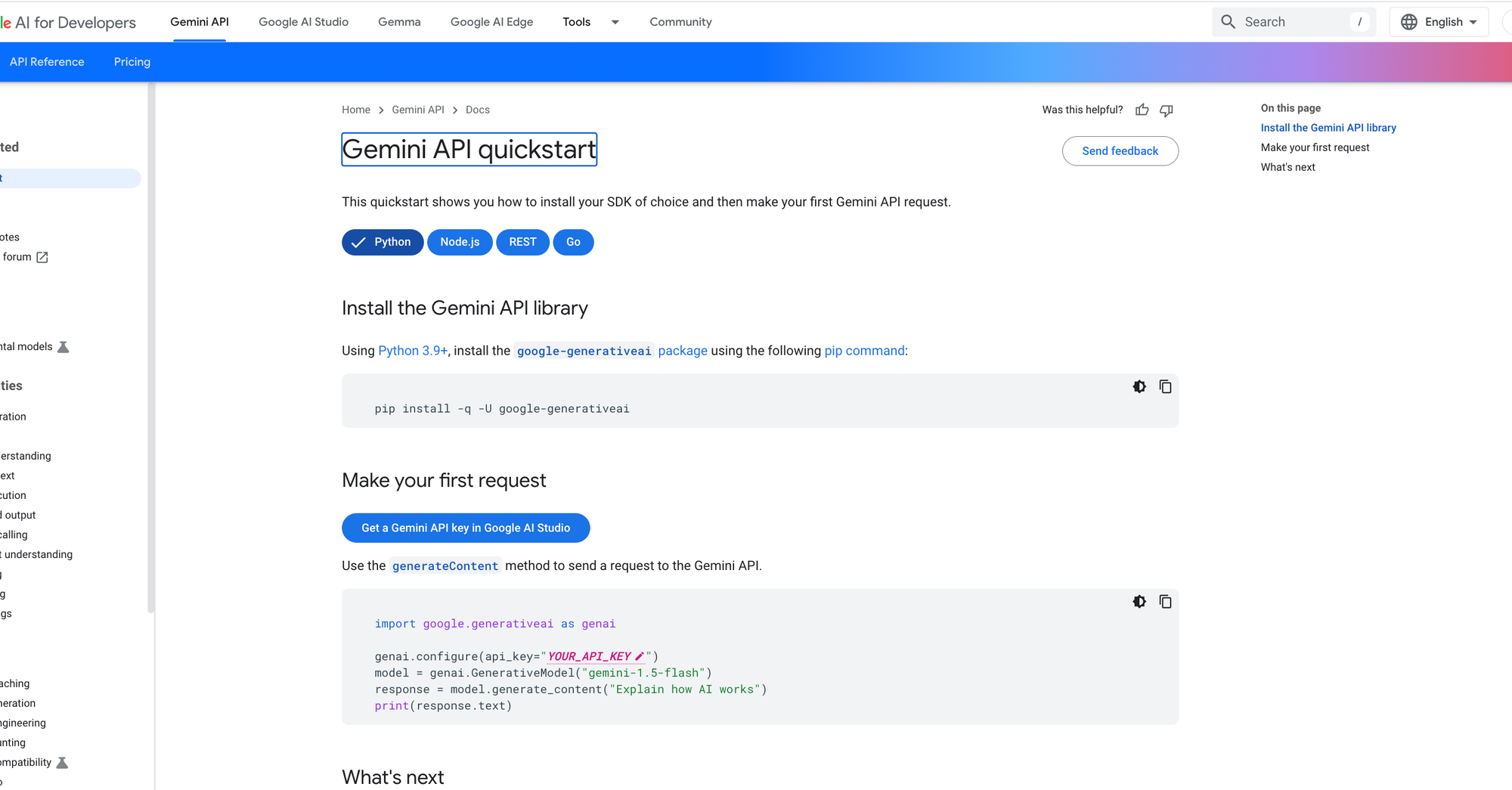

For example, with Google Gemini:

- Open the playground.

- Get an API key.

- Choose a model.

- Start chatting.

In fact, Google even lets you use the Gemini API for free in many cases, which is amazing for experimentation and quick prototyping.

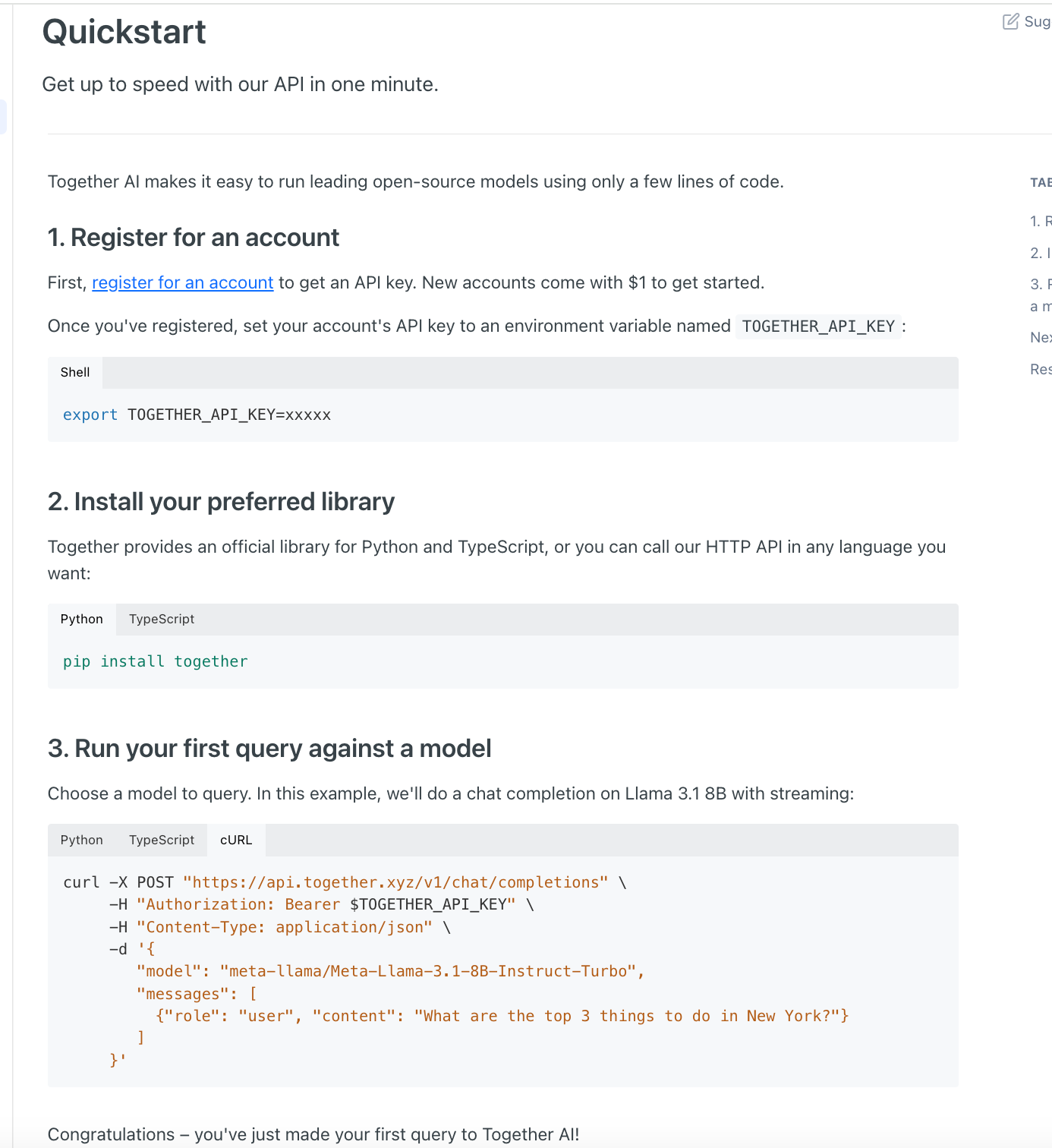

Similarly, on Together.ai, the process is just as straightforward:

So, Why the Discrepancy?

The LLM market essentially breaks down into two main categories:

- Big Players (Cloud Providers): Companies like AWS, Google, and Azure offer LLMs as part of a much larger suite of cloud services.

- Specialized LLM Providers: Companies like Together, Fireworks, OpenAI, and Anthropic focus primarily or exclusively on providing LLM access.

The big cloud providers, having been around longer and offering hundreds of services, have built their infrastructure around robust security and access management. This means that using an LLM requires the same setup as any other service: IAM roles, service configurations, etc. While necessary for enterprise-level security, this process is simply too cumbersome for a quick start.

"But Gemini is also a cloud provider," you might say. And you'd be right. However, Google has created a dedicated landing page and separate service – Google AI Studio – that focuses solely on LLMs. This eliminates the need to set up extra permissions. You essentially create an API key that has access only to the LLM service. Instead of navigating hundreds of roles or creating custom ones, you simply click "Generate API Key."

Google AI Studio Landing Page

This is a very smart move by Google, and Azure AI Studio adopts a similar approach. For enterprises, the situation might be different, but for startups or proof-of-concept projects, the AWS process is just too tedious.

What About Other Services?

This principle applies beyond LLMs. Consider web hosting, for example. If you want to deploy a simple static website, you might hesitate to go through the involved process of setting up IAM roles and configuring a web server from scratch. Instead, you'd prefer a provider that offers a streamlined service that handles the underlying complexity for you.

And Finally, The Pricing

For many startups, cost control is incredibly important. They need to "fail fast" and often don't have the resources to build perfectly scalable and secure solutions from the outset. The pricing models of AWS and most large cloud providers typically don't offer a prepaid model like the specialized LLM providers (OpenAI, etc.).

If you underestimate the load and have an open-access credit card linked to your AWS account, you could be in for a nasty surprise. With providers like OpenAI, the worst that can happen is you hit your usage limit and get a notification. While you certainly don't want this to happen and lose customers, the risk isn't as significant as an open-ended bill.

Wrap-Up

Big players like AWS have been leading the cloud market for a long time, and they offer a comprehensive range of services, including AI and LLMs. However, if they want to better serve startups (many of whom only need serverless functions and LLMs), they need to become more use-case focused. They should tailor their product offerings to make bootstrapping projects much smoother, ideally as easy as creating an API key and hitting "Deploy" or "Chat." Simplifying the onboarding process and offering more predictable pricing models would go a long way in making LLMs more accessible to a wider audience, especially those who are just starting out.