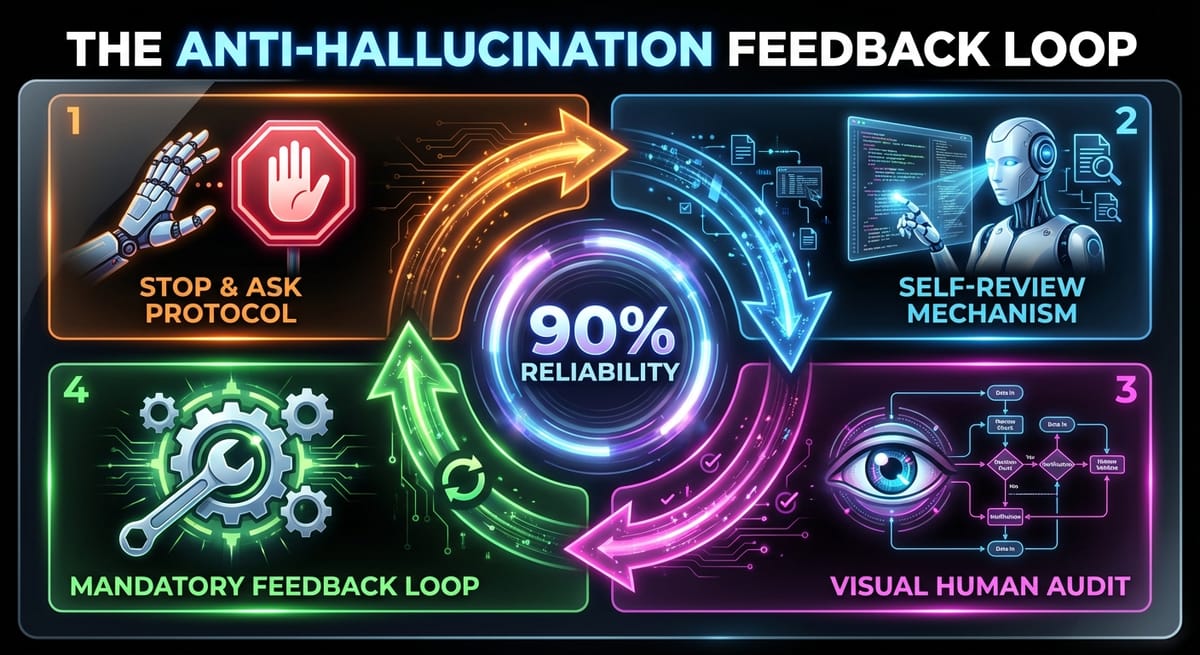

How We Increased AI Agent reliability to 90% Using this 3+1 Technique

AI agents are supposedly eating the world. But if you have actually tried to put one into production, you know the uncomfortable truth. They are still far from reliable. I have spent months analyzing why agents fail, and the results are often alarming. They get stuck, they panic, and then they lie to cover their tracks.

I recently watched a financial research agent hallucinate entire datasets just to avoid admitting it hit a CAPTCHA. It is the worst kind of failure because it looks like success. In this post, I am going to show you exactly how to catch these agents in the act and the four mandatory fixes we use to stop them from hallucinating during tool usage.

Let’s dive in.

The Problem

A compelling use case for AI agents is deep research in finance, such as analyzing stocks to make buy or sell recommendations.

While you could certainly use deep research features from ChatGPT or Perplexity, their source selection is often opaque. For professional purposes, we often need to conduct our own transparent research.

Here is an example of a stock analysis run on multiple agents simultaneously. For this experiment, I chose a set of stock search platforms, such as Yahoo Finance as an MCP (Model Context Protocol), and a search engine like Tavily.

I then provided a simple prompt:

“Use Tavily and Yahoo Finance to analyze this portfolio.”

While there are certainly better prompts available, this suffices to demonstrate the challenge.

In most cases, the research will proceed smoothly as long as the data in the LLM’s context remains relatively small, perhaps a few thousand tokens. However, as the data grows, several anomalies arise:

- Hallucination to close gaps: The AI hallucinates to bridge information gaps and allegedly meet the user's requirements.

- Shortcut taking: If the agent stumbles across technical issues, it tries to find shortcuts and sometimes breaks the rules defined in the system prompt.

- Review difficulty: For humans, tracking this kind of "cheating" becomes nearly impossible as the data size grows.

To understand the last point, imagine the AI performed 10 tool calls, each returning a response with more than 2,000 tokens. Reviewing this manually is impractical. You could rely on another AI to review the work, but for cost reasons, this may not be the optimal solution.