How to fine-tune ChatGPT - A real world example

Ever felt overwhelmed by too much information when asking a simple question? In this guide, learn how to fine-tune GPT-4o-mini for more tailored and conversational responses. With just a few steps, you can adjust the model to focus on your unique situation, making AI consultations feel more perso...

Have you ever asked GPT a simple question and gotten a ton of information, much of which is irrelevant or simply overwhelming?

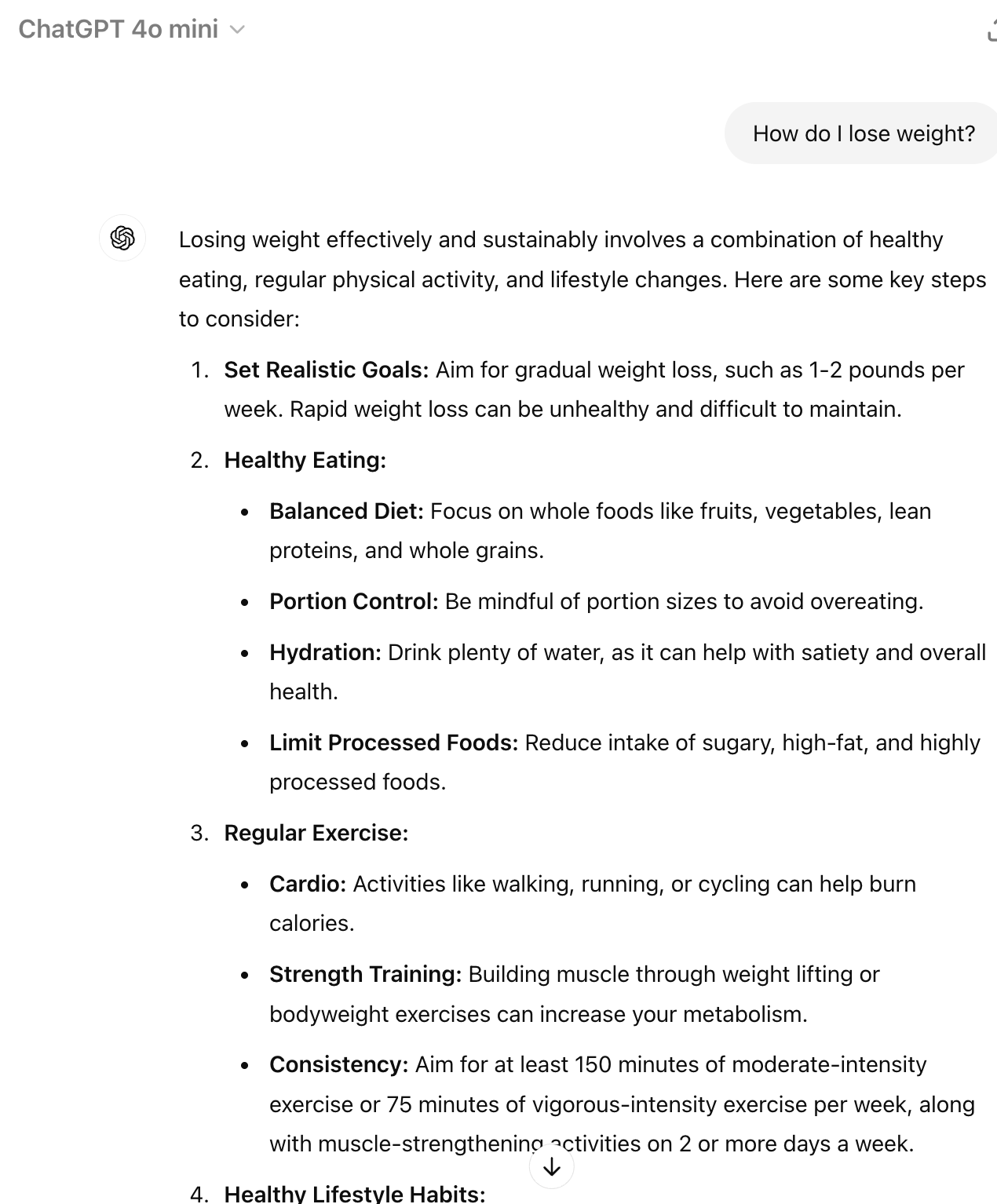

Here’s an example:

You: "How do I lose weight?"

I do not know how you feel when you receive this amount of information, but I personally feel overwhelmed😄

Instead, what I expect from a human knowledgeable human consultant is to try to reduce the search radius before making recommendations.

Maybe something like this:

"Losing weight can be tough. Are you looking for diet tips, or maybe some exercise routines? Let me know so I can give you the best advice for your situation."

This is something we can improve with fine-tuning techniques.

In this guide I'll show you how to fine-tune gpt-4o-mini to do just that in four simple steps.

To demonstrate the concept, I used a small dataset that I created using ChatGPT. In a real world scenario, you would use a larger dataset to reflect your domain and conversational patterns.

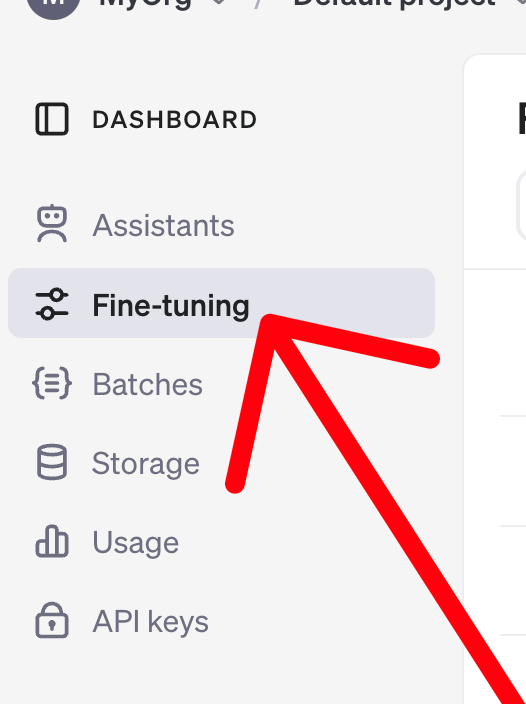

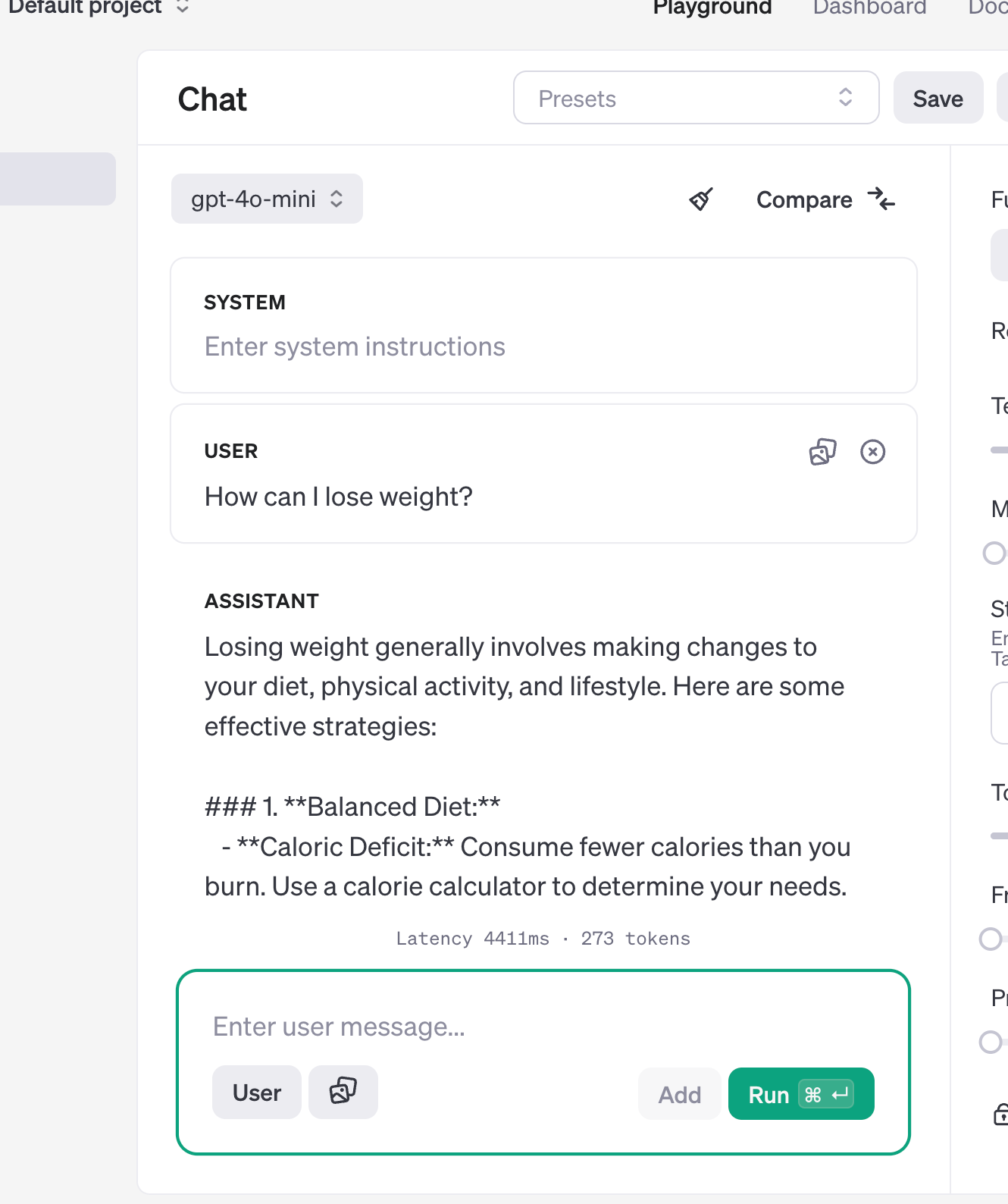

Step 1: Open the fine-tuning dashboard and hit "Create"

https://platform.openai.com/assistants

Step 2: Download the Dataset

https://huggingface.co/datasets/airabbitX/gpt-consultant/resolve/main/gpt_consultant.jsonl

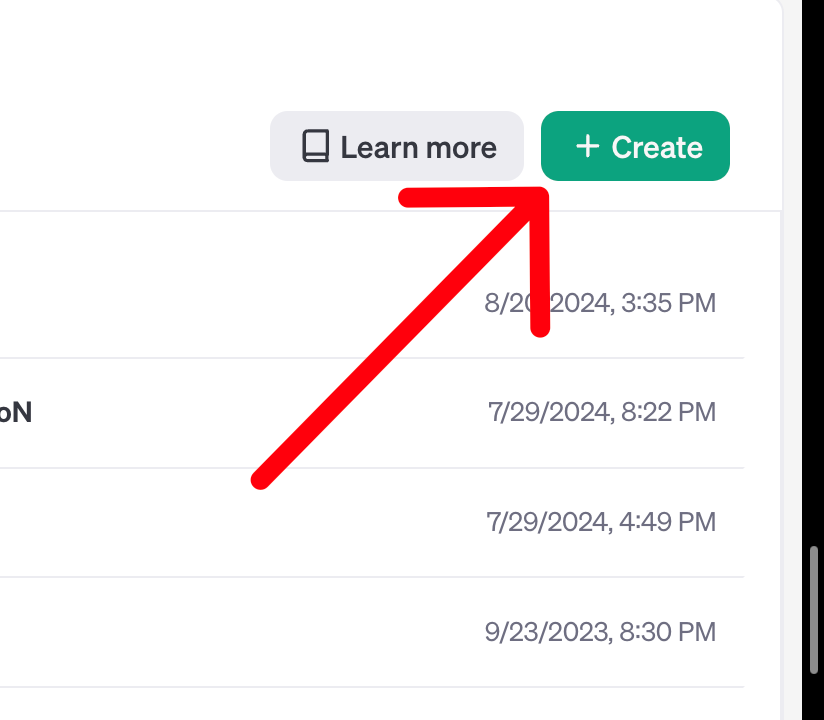

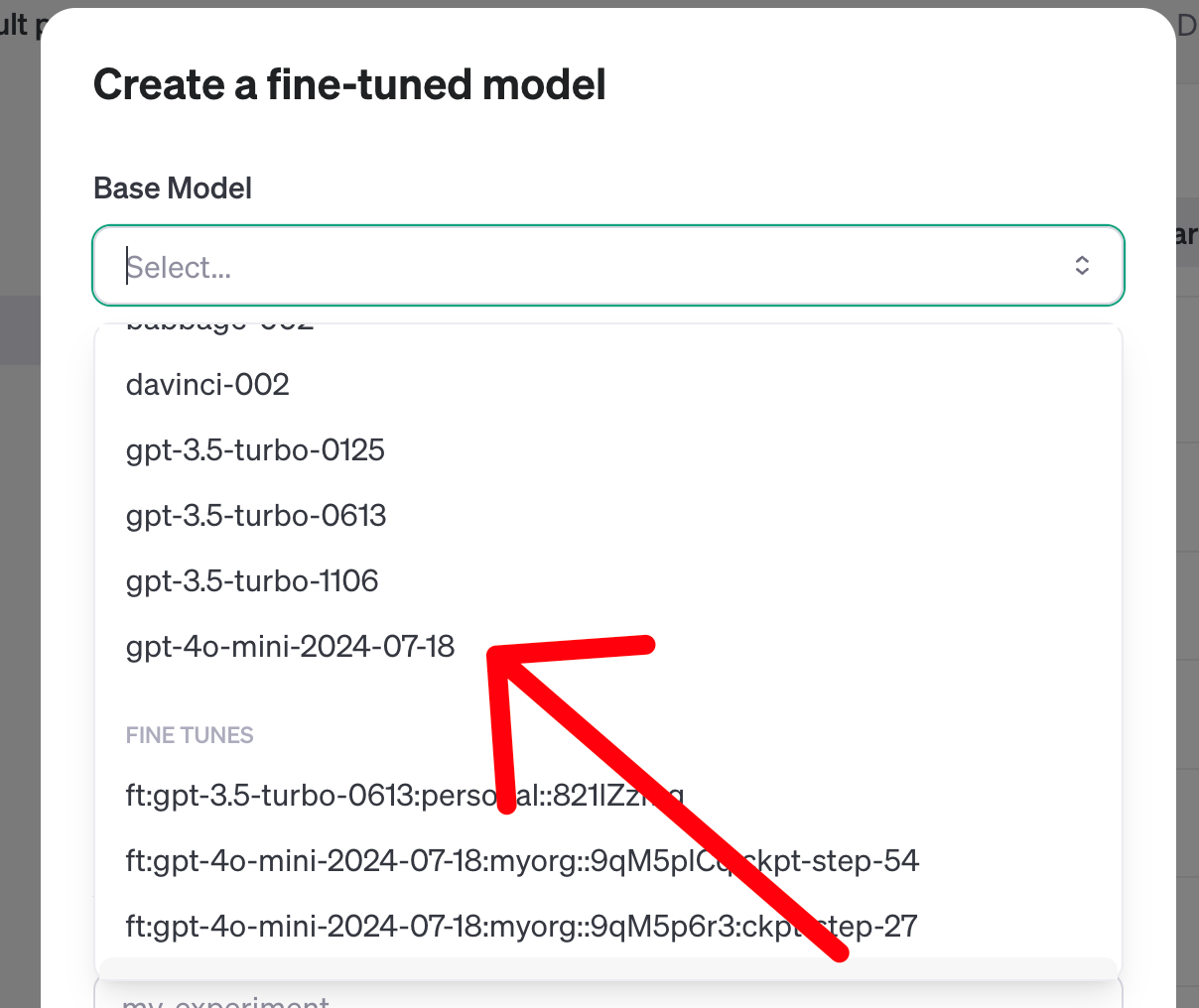

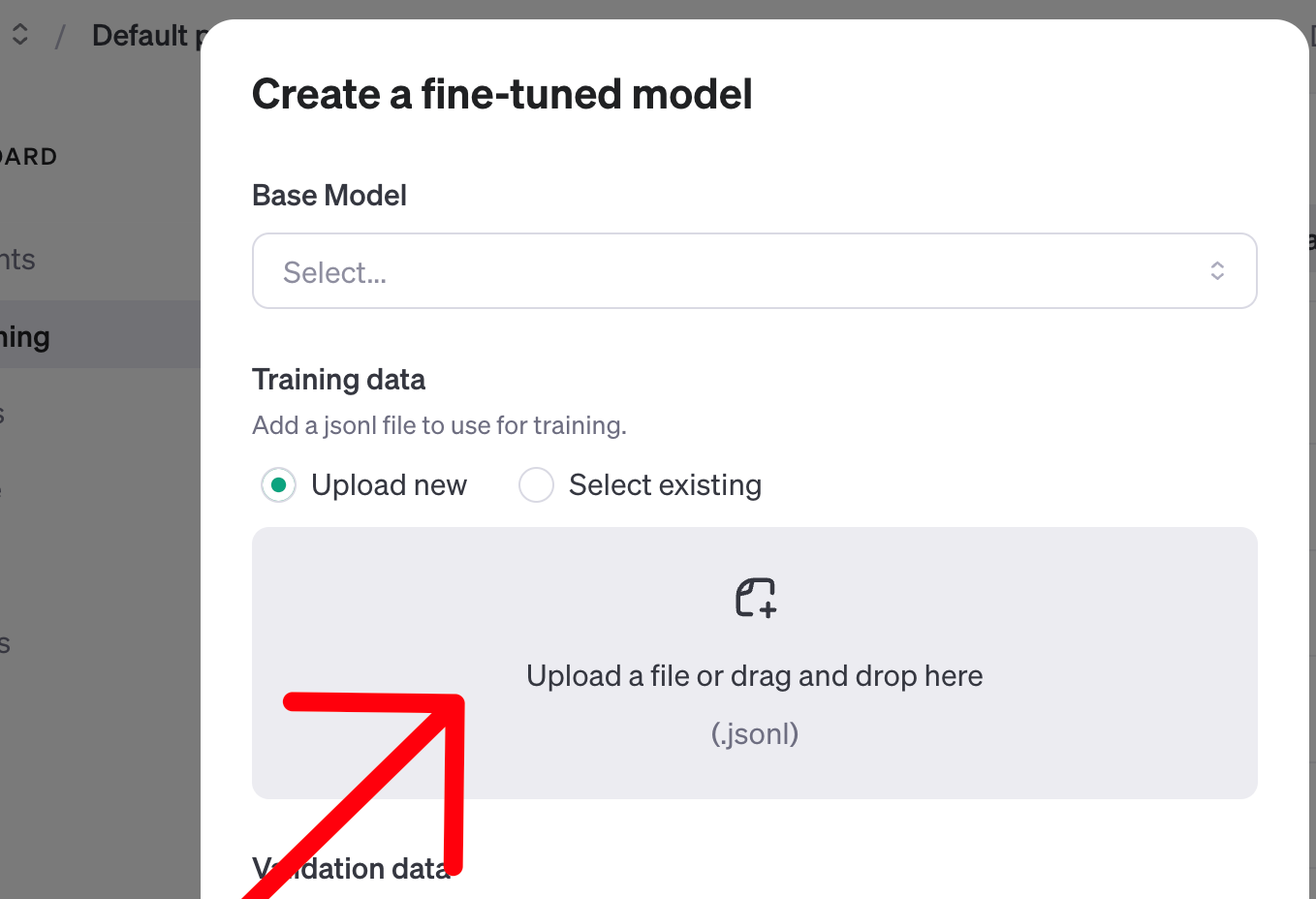

Step 3: Create a new tuning job with the downloaded dataset

Wait until the fine tuning is complete.

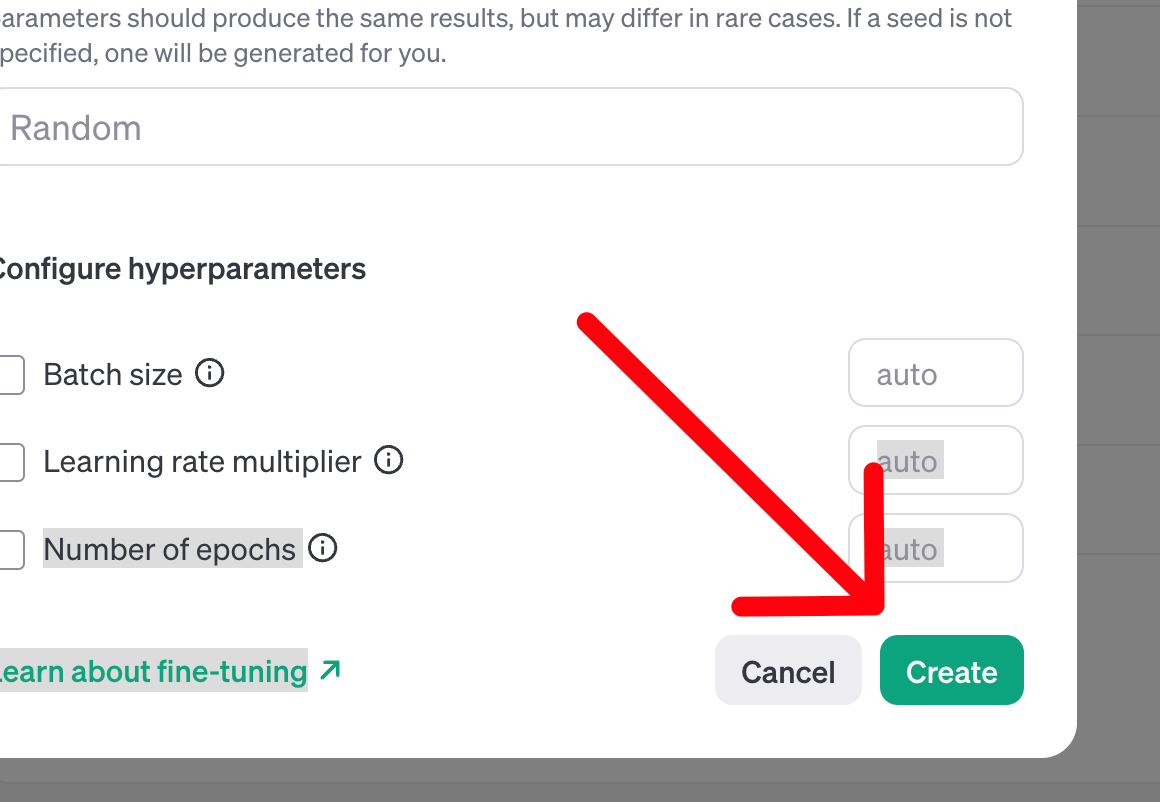

If all goes well, you should see something like this:

Remember the name of the fine-tuned model, we will use it in the next step.

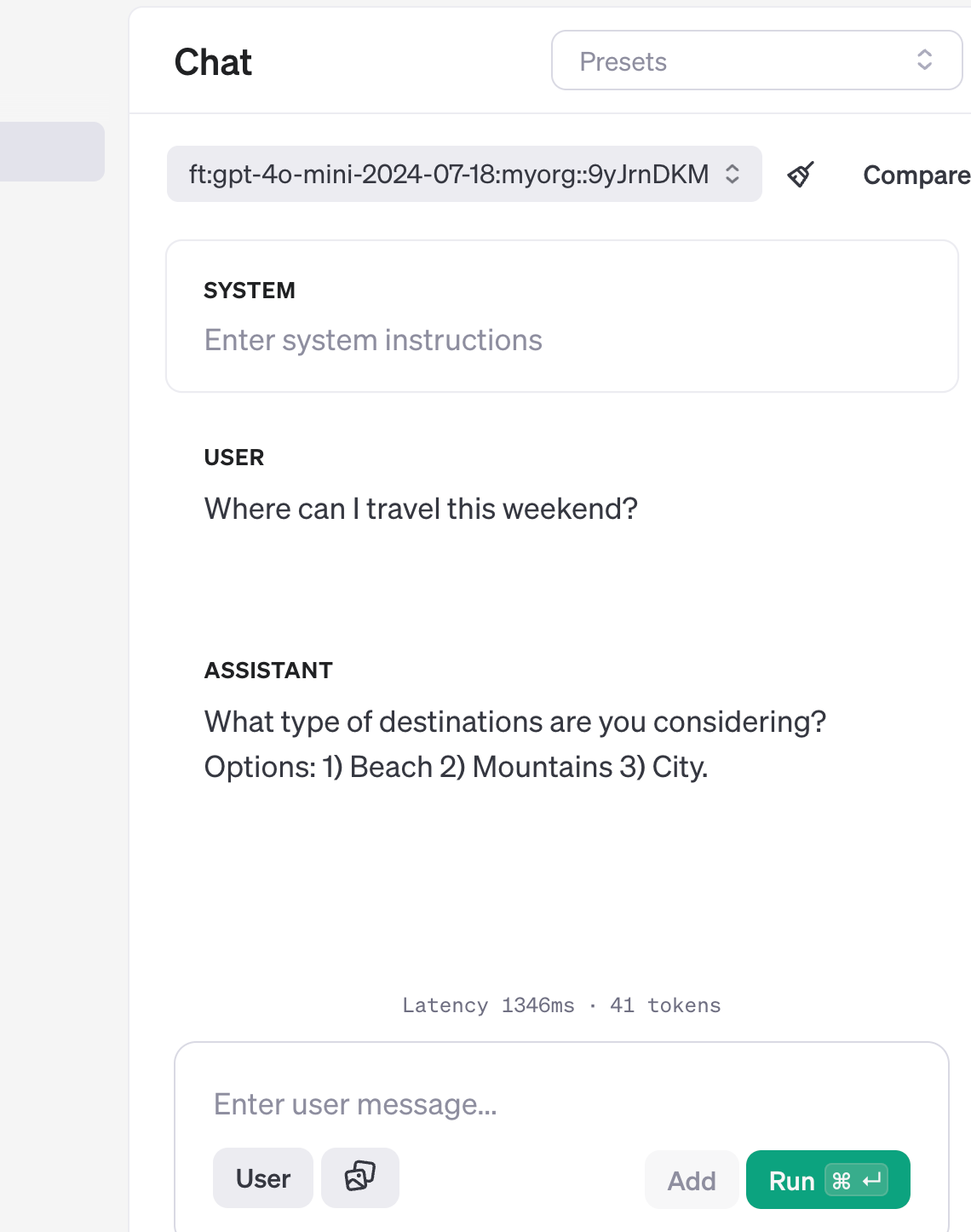

Step 4: Test the fine tuned model

https://platform.openai.com/playground/chat?models=gpt-4o

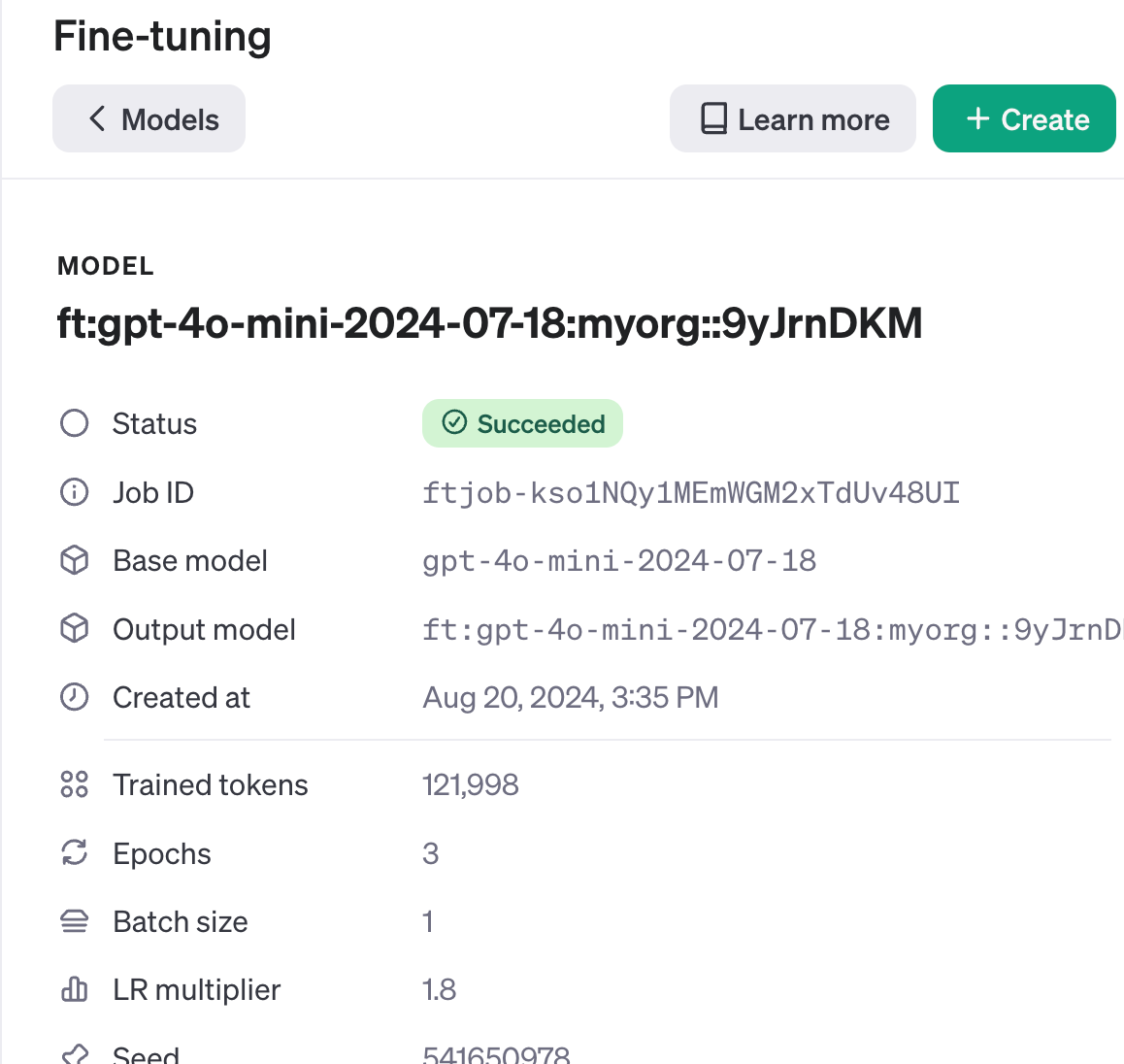

Let's see how the base model responds before fine-tuning:

Then test with the fine-tuned model by selecting the model from the previous step.

As you can see, it will first try to figure out your background and situation before jumping into conclusions. And not just for health concerns.

Conclusion

By fine-tuning GPT-4o-mini, we can make the model more conversational and behave more like an advisor than a Wikipedia machine😄

We can significantly improve the dialog of the model by adjusting the hyperparameters like the number of epochs, batch size, etc. and adding more conversations to the dataset.

Of course, this is just scratching the surface of what is possible. I hope that in this tutorial I was able to demonstrate what fine-tuning ChatGPT is and how it can be used to improve the conversation style and output of ChatGPT.

If you are also interested in fine-tuning open source models, please check out my other tutorial.