The End of Nvidia’s AI Chip Monopoly?

The AI chip market is buzzing as Groq, a Silicon Valley startup, challenges Nvidia with its cutting-edge Language Processing Units (LPUs). Designed for speed and low-latency inference tasks, Groq's revolutionary technology outpaces traditional GPUs, achieving 500-750 tokens per second. With $640 ...

The AI chip market is heating up, and Nvidia is facing stiff competition from a new player in the game: Groq, a Silicon Valley startup that's making waves with its Language Processing Units (LPUs).

What Makes Groq's LPUs Special?

Groq's LPUs are designed for inference tasks, and they're incredibly fast and low-latency, making them perfect for applications that require real-time responses.

One of the key differentiators is the use of on-chip SRAM instead of HBM, which is used by Nvidia's GPUs. SRAM is up to 100 times faster than HBM, making it ideal for inference tasks, but it's also more expensive and has a lower capacity, limiting the size of the models that can be used.

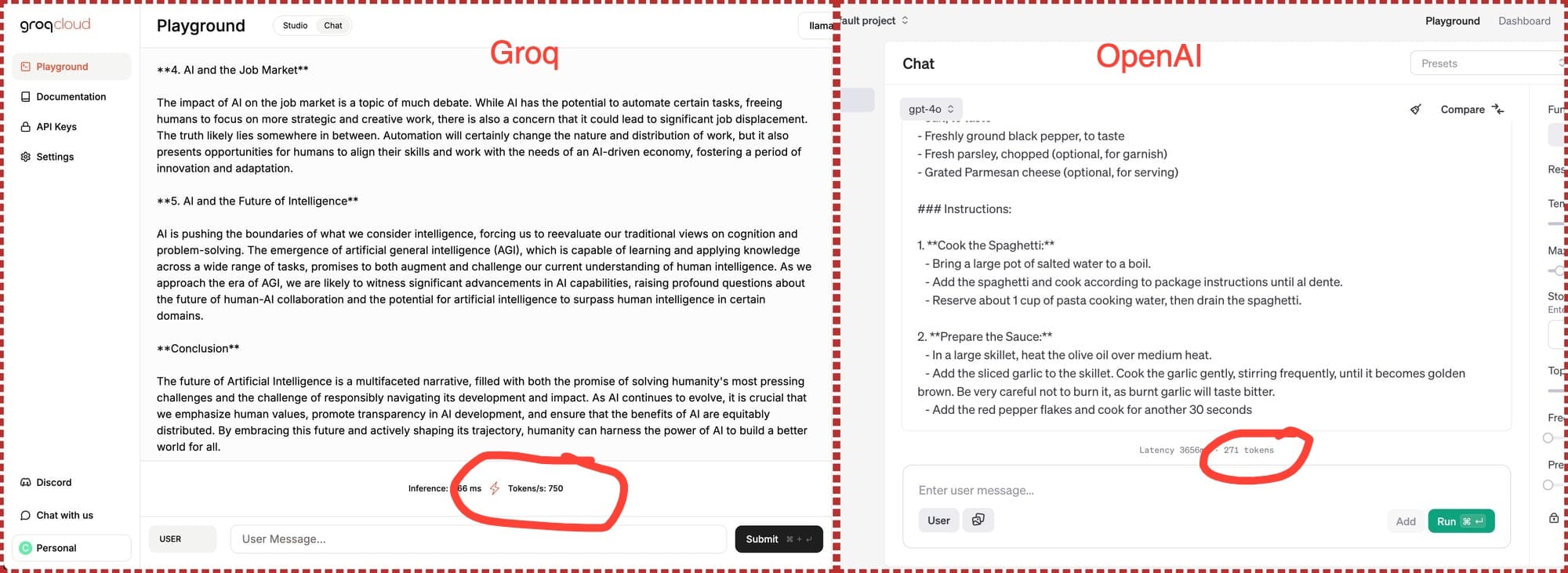

Groq's LPUs have impressive performance metrics. They can generate 500-750 tokens per second, compared to ChatGPT's GPT-3.5, which generates around 40 tokens per second. This makes them particularly well-suited for applications that require real-time responses.

What's Fueling Groq's Growth?

Groq has raised $640 million in funding, which will enable them to accelerate the development of the next two generations of LPUs and deploy more than 100,000 additional LPUs into their cloud infrastructure.

The company has also secured the advisory services of Yann LeCun, Meta's AI chief, which is a big deal. And they're not relying on TSMC, the same foundry that Nvidia uses, which has been plagued by supply chain issues. Instead, they're using Global Foundries, which has a reputation for being more reliable.

Groq's technology is designed for natural language processing, computer vision, high-performance computing, and generative AI applications. It's also suitable for real-time inference, which is particularly useful for applications that require instant responses, such as interactive gaming, real-time analytics, and live streaming services.

How Might This Impact the Market?

The emergence of Groq is causing a seismic shift in the AI chip market, and it could lead to a decrease in demand for Nvidia's, AMD's, and Intel's AI inference solutions. This could have significant financial implications for those companies, as they're heavily reliant on the AI chip market.

Groq is priced at $20,000 per unit, which is significantly lower than Nvidia's Blackwell chips, priced between $30,000 and $40,000. This cost efficiency, combined with superior performance in specific tasks, makes Groq an attractive option for businesses looking to optimize their AI infrastructure.

What's the Outlook for Groq?

Groq is expected to capture a significant share of the AI chip market, which is expected to hit $1.1 trillion by 2027. The company is planning to expand its product offerings and enter new markets, positioning it as a formidable competitor in the AI landscape.

The future is bright for Groq, and it's going to be interesting to see how the company will continue to evolve and grow in the coming years.

References

- Freund, K. (2024, August 9). Can Groq Really Take On Nvidia? Forbes. https://www.forbes.com/sites/karlfreund/2024/08/09/can-groq-really-take-on-nvidia/

- AI chip startup Groq gets $640 million in funding. Yahoo Finance. https://finance.yahoo.com/news/ai-chip-startup-groq-gets-110015470.html

- AI chip race: Groq CEO takes on Nvidia. VentureBeat. https://venturebeat.com/ai/ai-chip-race-groq-ceo-takes-on-nvidia-claims-most-startups-will-use-speedy-lpus-by-end-of-2024/