From AI Search to Fact-Based Decisions: Our Strategy Revealed

Navigating the maze of AI search tools like Google AI Search, GPT Search, and Perplexity can be daunting when seeking reliable information for business decisions. This post outlines a strategic approach: first, brainstorm potential options using AI, then employ rigorous fact-checking to verify so...

If you have been following the news, you may have read about Google AI Search and more recently GPT Search. Of course, there is also Perplexity and last but not least, plain old Google.

While these products may have different product strategies and underlying search technologies (AI models, indexing, etc.), they all more or less fulfil one goal for the user: finding the needle in the haystack.

A difficult task - not only because of the amount of information that is literally exploding every second, but also because of the difficulty of selecting reliable and relevant information.

Although this may sound like a subjective thing for some search terms (e.g. what is the best restaurant in town), in a business setting it is not.

If you ask one of these AI search tools for, say, the best cloud providers according to a given set of criteria, the result will rarely be the same twice, and even if it is, it may lack precision (also known as accuracy) or recall (how complete the information is).

Say you are looking for the best CRM that does A, B, and C. It may suggest Salesforce, SAP, etc., but there may be other niche players that are much better for your very specific use case. And again, Perplexity, for example, might mention them (because it uses the Internet like others), but it might also just skip that information.

Incorrect or missing information for business is a poison from which you may not die immediately, but soon..

In my day-to-day work as an architect, I have to make decisions on behalf of my clients, evaluating and recommending hundreds of products across the technology zoo, from hosting providers to GPU providers for machine learning. And if I make the wrong decision early on, it can be expensive to change it after the product is deployed.

So the quality of information is just as important as the speed and cost of getting that information (deep research can be expensive) needs to be well balanced._**.

In this blog post, I want to share my strategy of using AI to brainstorm and gather ideas for research, but then using non-search AI and other tools to increase reliability and have a solid basis for a decision for or against products and vendors.

So here's how we do it in a nutshell - let's say we're trying to find the best hosting provider for our Next.js application.

Brainstorming with AI search

The first step is to brainstorm with Perplexity. This will give us some popular hosting providers. In this example, the list is actually pretty good, although I miss a few, like Cloudflare. Again, maybe Vercel is best for our case, maybe not. It depends on the factors that really matter, like response time, cost, geographic distribution, integration with other products, etc.

In GPT Search we get a similar results to Perplexity if we chose the smart model.

Good for a start, but not enough.

In this case, the results actually look correct and even familiar if you already have a clue. But if you look more closely at where the information actually comes from, especially the description, the sources are not necessarily reliable.

Don't get me wrong, Dev.to is great, but there are several problems with using it as a source of actual and correct information: the information may simply be wrong, it may be biased, or even worse, it may be outdated - the vendor may have changed something, but the article is not updated.

Sounds too bad?

It is not. If you know how to do this correctly and at what stage to use which source, you can do proper product review research.

So, as I said, the AI search is great for a start, but we need to do some work here to get to a reliable source of information for a reliable and sustainable fact-based decision.

Fact checking after the search

So here is what we can do.

1. Check the domain authority of the seller

First, check the domain authority of the seller. Here we use Moz or Ahrefs (or Semrush). There are certainly others (free and premium).

What matters is the ability to see the reach of the company and the product. New start-ups may be great, but if they haven't reached a certain scale, they may not be suitable for a company that relies heavily on the stability of enterprise products. More on this in the compliance section.

In many products you can also see other competitors in the result overview, not always complete, but it gives you an idea of what's happening in the industry you're navigating.

Anyway, if you do more proper research on the keywords and competitors, you will soon end up with a more or less complete list of competitors to consider.

2. Define the criteria that (really) matter to you

Life is about compromises, and so is business. Knowing what really matters to you and prioritizing those criteria is key to choosing the right product and vendor for your use cases.

For example, let's say we're building a new SaaS and want to launch a new product (in this example, let's keep it a Next.js AI application). If you do the research, you will find that there are dozens of parameters that can be critical in selecting the best vendors. Here are a few suggested by Claude:

This is good news and bad news. The good news is that we know what to look for, the bad news is that it's just overwhelming and we don't know where to start.

The catch here:

The search starts offline.

Defining the criteria that matter to you is key.

There is tons of material and resources on how to do this and how to do proper requirements engineering (this is not my job, I take this for granted and I can only suggest to my client what to decide, but I never take the decision away from them).

In our example, the most weight (the criteria are usually weighted according to importance) is given to speed of development, performance, security and cost.

For other startups, they may be completely different.

Now I have the criteria, what next?

Having the criteria is the start of doing in-depth research against those criteria. To do this properly, you can start again with a coarse granular research with Claude and GPT and Perplexity etc. and check the resources.

But even better is to look for trusted and factual information about the product, such as datasheets, developer documentation or user documentation from the vendor, not necessarily from a second source.

The latter is not because of a lack of trust, but because of a lack of accountability if the information is wrong (I can't blame a developer who wrote a nice tutorial a few months ago that is now obsolete).

Depending on the product (open source, closed source, enterprise, community edition, etc.), the completeness and distribution of these documents may vary. For example, some open source products are amazing, but have weak documentation, so the source code becomes your only single source of truth. With commercial closed-source products, you can only rely on the documentation provided by the vendor.

In many cases, we rely heavily on the product documentation online if it is maintained and well documented. This involves crawling the website or downloading the repo (if it is open source) and using RAG to look for detailed, reliable answers to our questions. The latter has proven to be incredibly powerful. If you don't know how to crawl an entire website, see my previous tutorial on how to do it reliably in a few clicks.

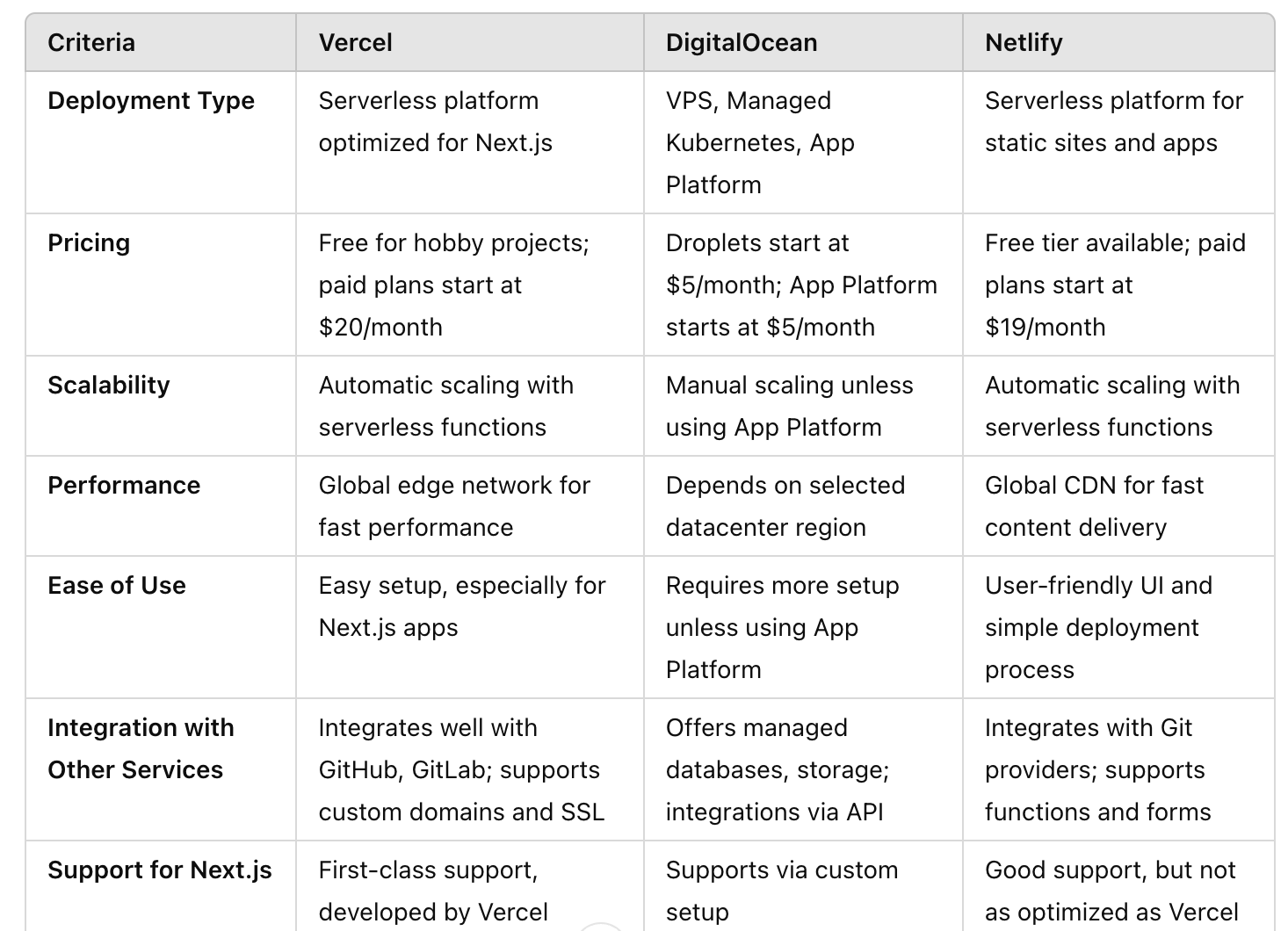

Applying this to our case: We take the open source repos documentation for the top 3 SaaS vendors (after checking the prices) and go through the criteria and do a comparison.

We might end up with a scorecard like this:

And a few more ...

3. Benchmarks

Having a list of trendy suppliers and a set of criteria to compare is a good start, but it is not enough.

Why?

Because the vendor isn't necessarily telling you the truth about how the product really performs in the field. In our example, performance could be related to scaling, response time, how well it integrates with other products, etc.

To gain more confidence, you can

- Test the product yourself (more on this in the next step)

- Look for industry trusted benchmarks.

I deliberately highlight industry trusted because there is a lot of cheating going on here and there, and even more so, the test cases used for benchmarking are not necessarily the same as your use cases.

Take for example the benchmarks in the LLM Space:

They are so versatile, but in many cases should not be taken as a single source of truth. For example, the LLM leaders in maths won't necessarily help you much in writing (remember the halo effect 😄).

To sum up:

Look for good benchmarks for the criteria that matter to you.

4. What users say (The real review)

Reviews are the holy grail of almost every transaction we make on the internet. Those businesses that manage to get a positive review (fake or real) get the user's attention and make the sale (at least the first purchase).

It's not much different in the business world. People talk about everything online, not just the best lipstick or note-taking app, but also enterprise products and every piece of technology you can think of. And usually the more popular the product, the more people talk about it - good and bad, which makes it easier to get some feedback or at least a glimpse of areas you might want to double-check (again, don't trust any one source), but opening your eyes can be incredibly helpful.

Example:

Say you want to deploy an application that does fancy stuff with AI in a long-running operation and you are looking for a hosting provider. At first glance, Vercel might be the most attractive platform out there.

But have you considered the timeouts allowed?

If not, some user comments on Reddit will mention it.

Maybe the 10 seconds is fine, but if not, you may need to dig deeper (and there are solutions, but you need to be aware of them early).

How we do it: We also use AI to analyse masses of comments (not just stars in Google!!!) to understand what people are really saying about the product and use that information as a starting point for deeper research.

5. Personal experience is key

Doing great research is all well and good, but at the end of the day your colleague or client is going to be using this tool or framework often and (hopefully) for a long time. So experiencing the product from a usability, integration and comfort perspective is key to convincing decision makers and key users.

Having a personal experience with a product is a whole new level compared to a few PowerPoint slides and comparison matrices.

What helps us the most here is to define a task to accomplish with a test/trial version without explaining too much about how to accomplish it, and let the user explore the product* and share their experiences using everyday business scenarios. We can then take that feedback and comparison the products against each other.

And again, I am not the one who defines these scenarios, that is the job of the product owner or the requirements engineer, what matters is to have a clear vision of what the product should do and how it should help the business.

6. Compliance

I admit this is not my best subject, especially for large companies with a lot of bureaucracy, especially in Europe, but it is a necessary evil.

What I mean by compliance are all the non-functional regulatory requirements such as solidity, size, legal entity, reputation of the business, etc. Many are more related to the vendor (who probably has multiple products) who happens to be the provider of the product you want to buy.

There is a lot of information to consider here.

Take for example security aspects such as certification, etc. Some companies don't just rely on (static) information, but actually do technical due diligence. For open source products this becomes very handy because you can actually look at the source code (even if you have to pay licence fees).

There are also tools that can make this process very technical and reliable, based on numbers and not just (sometimes outdated or misleading) documents:

https://github.com/ossf/scorecard

Depending on compliance requirements, you may spend a lot of time fact checking a lot of these things before you actually launch the product. For startups that are still in the early days, these aspects don't (and shouldn't) get much attention, except for proper security of their products. In this case, speed and cost dominate most business decisions.

Conclusion

With this framework, we use AI Search as a starting point to explore viable options for business decisions and with acomprehsnviie framework we do a 360 research of the product taking into account information from the vendor, the public internet, benchmarks, the customers and the key users of the product who will ultimately use the product. to get a complete picture of viable products that solve our problem.

With a proper post-search deeper research, also using AI, we can fact-check and with each step we take, gain more confidence and make decisions based on facts not gut feeling.

We have developed a framework to streamline this process. If you are interested, just PM me.