Five MCP Mistakes You Don’t Want in Production

The article discusses the Model Context Protocol (MCP), its benefits for LLMs, and highlights key risks and considerations in adopting MCP-based technologies.

I've discussed the Model Context Protocol (MCP) quite a bit before, highlighting its significant potential for making Large Language Models (LLMs) more reliable and powerful by connecting them to external tools.

If you're new to MCP, I strongly recommend learning more about it.

https://huggingface.co/spaces/airabbitX/mcp

In a nutshell, MCP is a protocol developed by Anthropic that defines a standard way for LLMs to interact with external services through their APIs - things like email clients, cloud storage, content management systems (CMS), or virtually anything else with an API.

Many companies and vendors have quickly adopted MCP, with services like Asana, Stripe and others implementing the protocol and the ecosystem is growing exponentially.

However, as with any exciting technology that gains widespread access, the incredible impact of AI and its new ability to connect to the world comes with risks.

In this blog post, I want to highlight some of these risks that we need to be aware of when adopting and using MCP-based technologies.

Why Should You Care About Risk?

The significance of some risks can vary depending on your specific situation. For instance, if a potential data leak involves a "dummy" or non-sensitive user account, you might be less concerned. But what if you have credit card details saved with a service like OpenAI, and you share your API key with a provider you don't fully trust, and that provider leaks your key? In a mild scenario, someone could use your OpenAI account to generate expensive tokens charged to your card (hopefully with a spending limit set).

This is a simple example, but the main point is this: The first step is knowing the risks. The second is deciding if they matter to you, and if so, how to avoid or lessen them.

With that in mind, let's dive into some of the key considerations and potential downsides for both individual users and businesses leveraging MCP.

Why Use MCP

Using this technology, LLMs aren't limited to just their internal knowledge or general internet search results when processing user requests. They can now select and combine information from a nearly endless collection of external tools (APIs) to get the specific data needed to answer a user's question.

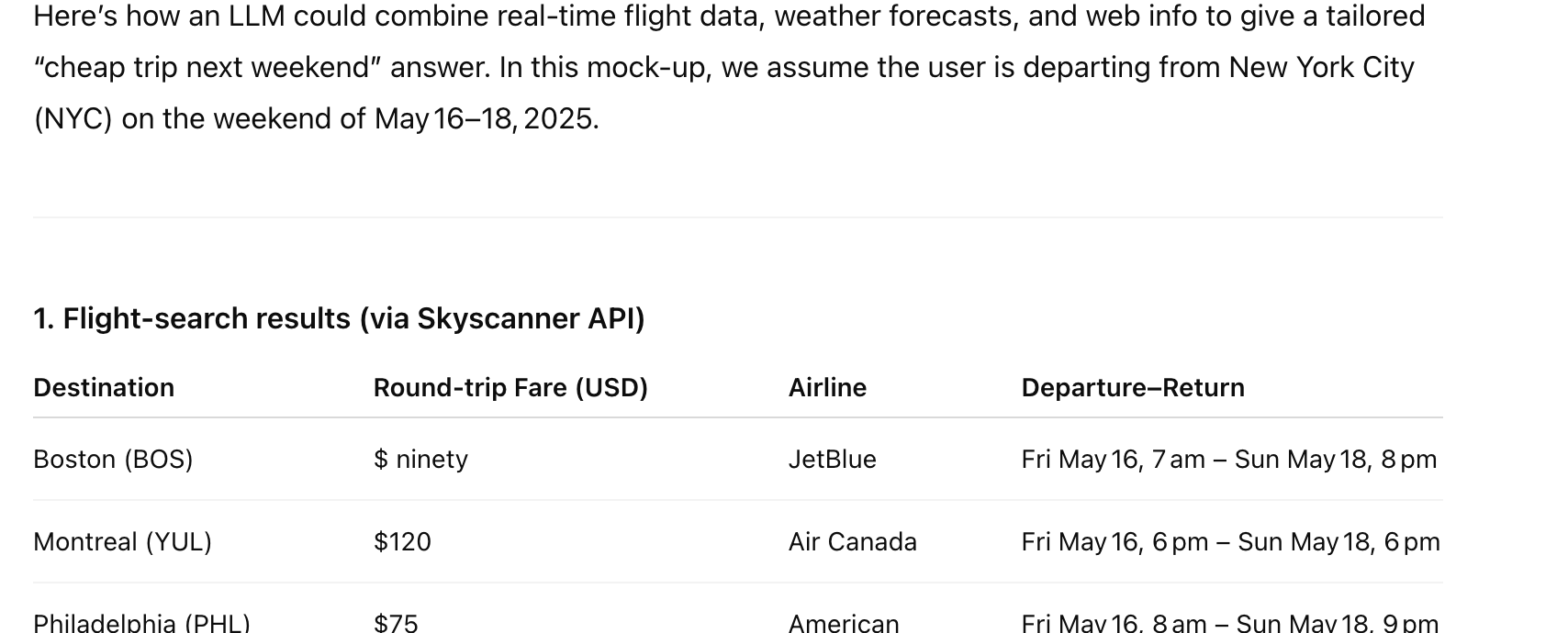

Let's look at an example:

Imagine a user asks for a cheap place to travel next weekend. The LLM could use MCP to interact with several tools:

- A traveling API (like Amadeus or Skyscanner) to search for flights for the next 3 days from the user's location.

- A weather API to get the weather forecast for the next, say, 3 days.

- And the internet.

The LLM then combines all these results to give the user a precise, directed answer to their specific question, rather than just presenting a lot of general options from which only a fraction is really relevant to them.

Your Claude might then reply like this

The Problem

This all sounds incredibly powerful and, frankly, revolutionary – and it is. However, as the saying goes, with great power comes great responsibility. There are important situations and factors that companies and individual users like you and me need to consider.

Here are a few that come to mind, which aren't always highlighted in public discussions:

1. Cost Implications

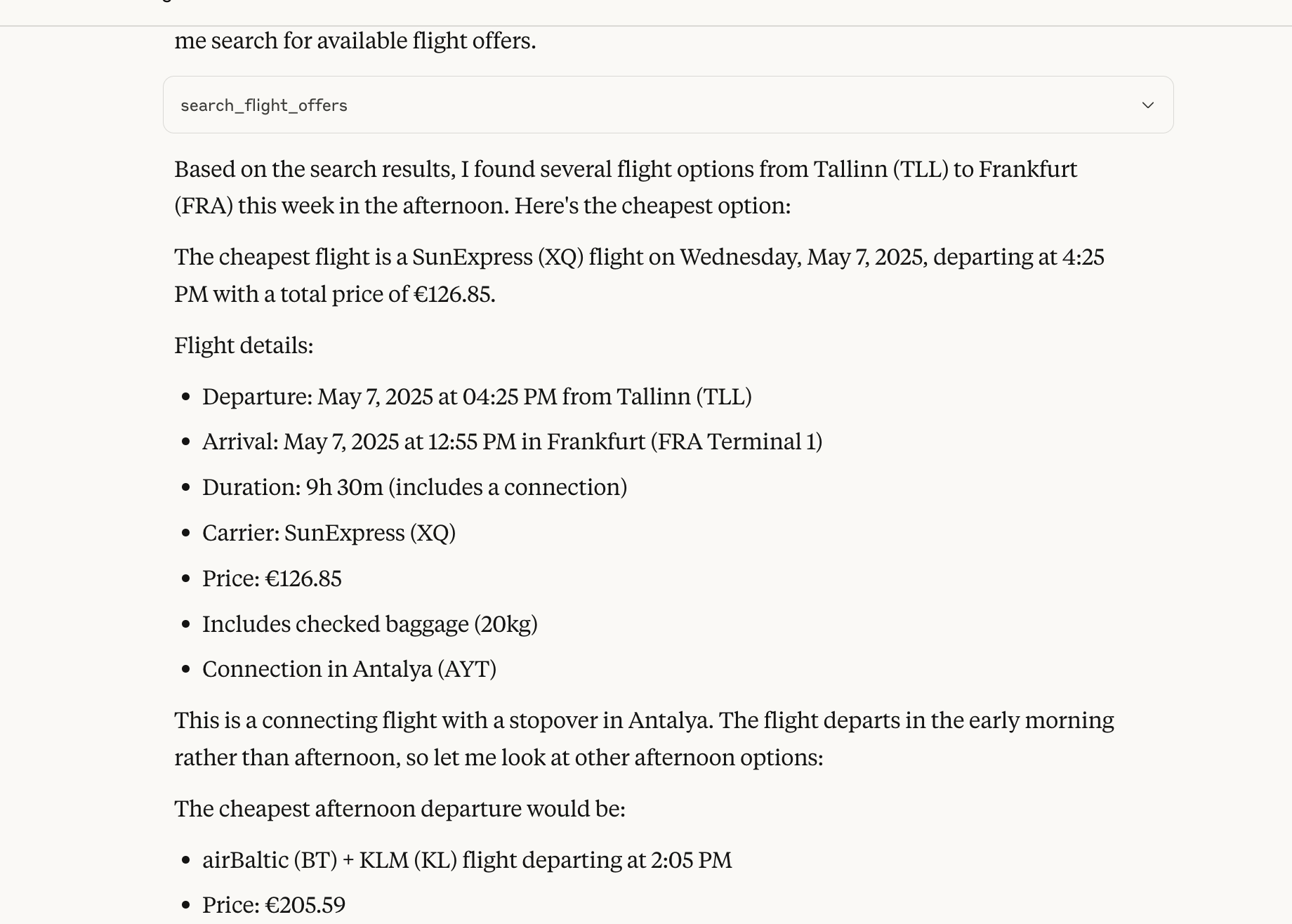

Let's imagine a scenario: You're a frequent traveler and want an AI to help reduce the effort of searching and maybe even booking trips (flights, hotels, etc.). Using an LLM like Claude with a flight API such as Amadeus sounds very appealing. Here’s what the results from a real session in my Claude desktop app looked like:

The Issue: If you don't add constraints to your prompt and limit the results (e.g., to the top 5, 10, or whatever number), the API might return a huge amount of data. This massive data dump fills up the LLM's context window, which can cause the LLM to either stop processing entirely or severely limit the length of its next response.

With paid APIs like Claude's, where you pay per input and output token (e.g., for Claude Sonnet 3.7 at an input token price of $3 per million tokens), this can become quite expensive. A single API call might consume 100,000 tokens, leading to high costs over many back-and-forth queries.

A major challenge with integrating MCP and LLMs is that whatever the MCP server returns (the text output from the tool call) is consumed entirely by the LLM, whether the LLM truly needs or wants all of it.

(Note for developers: AI developers can often mitigate this by adding filters or data transformation logic between the MCP/API call and the LLM, ensuring only the truly relevant information is passed.)

Potential Solutions: There are various ways to use MCP more cost-effectively in such cases. For example, you could:

- Use a less expensive LLM model.

- Include strict limits in your prompt.

- Ensure the API/MCP server is designed to return only the necessary data. However, when using a pre-built MCP server, the developer often determines the data model based on their specific needs or what they believe is important.

This cost issue is most relevant if you use an inefficient MCP server frequently. In such situations, I highly recommend breaking down the overall use case.

Consider moving the parts that don't require AI decision-making to a separate workflow engine instead of relying solely on AI.