The Power of 1 Million Tokens: Part I – Spotting Trends

The global data explosion, from just 2 zettabytes in 2010 to an expected 394 zettabytes by 2028, presents analysis challenges. AI, particularly modern large language models (LLMs) like those from OpenAI and Google, can analyze vast information quickly, revealing patterns in datasets, such as blog...

The amount of data in the world is growing at an incredible rate. According to Statista, total global data is expected to reach 394 zettabytes by 2028, a huge jump from just two zettabytes in 2010. The truth is that humans are finding it increasingly difficult to understand and analyse all this data. This is true even for relatively small amounts, such as social media posts or comments on a single online thread.

Fortunately, AI can help. The amount of data analysed by AI is likely to continue to grow. Modern AI models, such as those from OpenAI and others, can now process huge amounts of information in a single pass, often without the need for special tuning or complex multi-step instructions.

For instance, new models from OpenAI can handle up to 1 million tokens at once: https://openai.com/index/gpt-4-1/. And Gemini can process even more, up to 2 million tokens: [https://ai.google.dev/gemini-api/docs/long-context?ref=airabbit.blog).

This huge increase in context length opens up a world of possibilities beyond our imagination. The simplest is to fill the context of the LLM with huge documents and ask questions.

You might say that this was already possible in ChatGPT and other chat applications a long time ago. While this may sound the same, the quality of the output and the depth of analysis and understanding of your large documents is in a completely different league. I suggest you just try it, for example in the OpenAI playground or the Gemini AI Studio.

In this blog post, we'll go one step further. We'll explore if we can use this huge context window to let AI look for patterns, which could potentially help predict the future (or at least identify likely continuations of existing trends).

Why would you do that?

The typical way to make predictions in AI has been, and will continue to be, to use neural networks and large amounts of data to train the AI brain. While this is still the way to go in industrial and enterprise applications, small businesses and the average consumer can hardly afford it, partly due to a lack of resources and knowledge. Many tools such as DB-GPT, Orange and many others in the AI space have made such in-depth analysis more accessible, but they still require significant technical expertise.

Imagine being able to analyse massive amounts of data, find patterns and act on historical data more easily? You would be able to see patterns no human can, at least not at first glance or without heavy investments in tooling and manpower.

Sounds abstract? Let’s dive in.

A Practical Example: Analyzing Comments

Here's a simple use case: Let's say you get dozens of comments on your blog or social media account every day, hour or minute. And you want to understand what's really going on. You might want to know things like

- What are the general sentiments or feelings being expressed?

- When is your audience most active and consuming your content?

- Are there any recurring patterns you should be aware of? For example, if your audience is very active on certain days or seasons, you might want to post more then, or adapt in some other way.

It doesn't take much to answer these questions:

- The dataset containing the comments you want to analyse.

- An LLM with a large context window size, like Gemini with up to 2 million tokens, OpenAI with up to 1 million tokens, and Claude, as of today, with up to 200k tokens.

For simplicity's sake, I'll have Claude AI generate a data set with a specific pattern hidden in it (like an "Easter egg"). Then I'll feed this data to another model (in this case Gemini, for the first test) and ask it to look for recurring patterns.

Step 1: Generating the Test Data

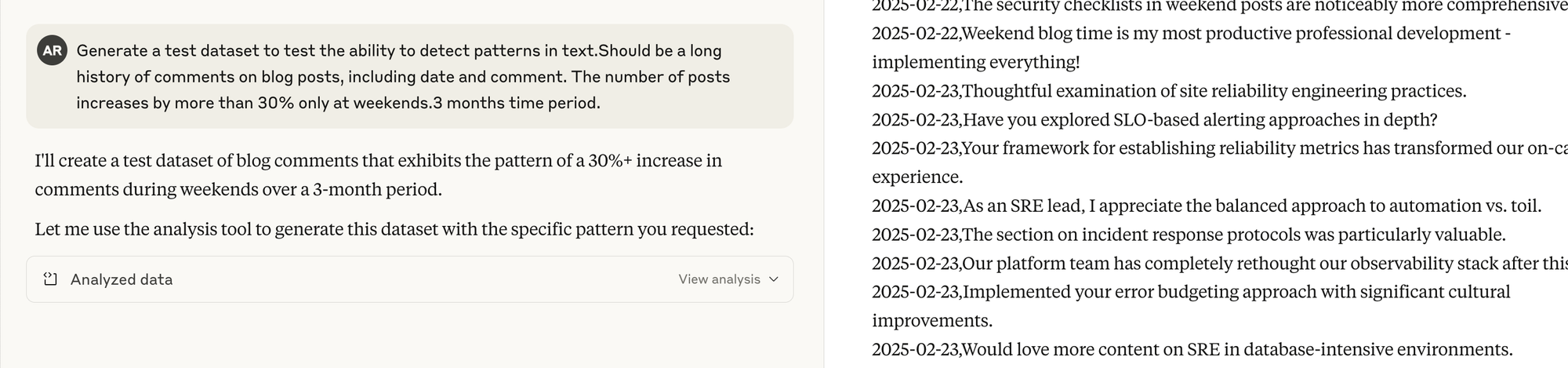

Here is the prompt used:

`Generate a test dataset to test the ability to detect patterns in text.Should be a long history of comments on blog posts, including date and comment. The number of posts increases by more than 30% only at weekends.3 months time period.

And Claude generates a whole lot of data, though the overall token limit is still a fraction of what it can produce and what the LLM in the next steps can process (up to 1 million).

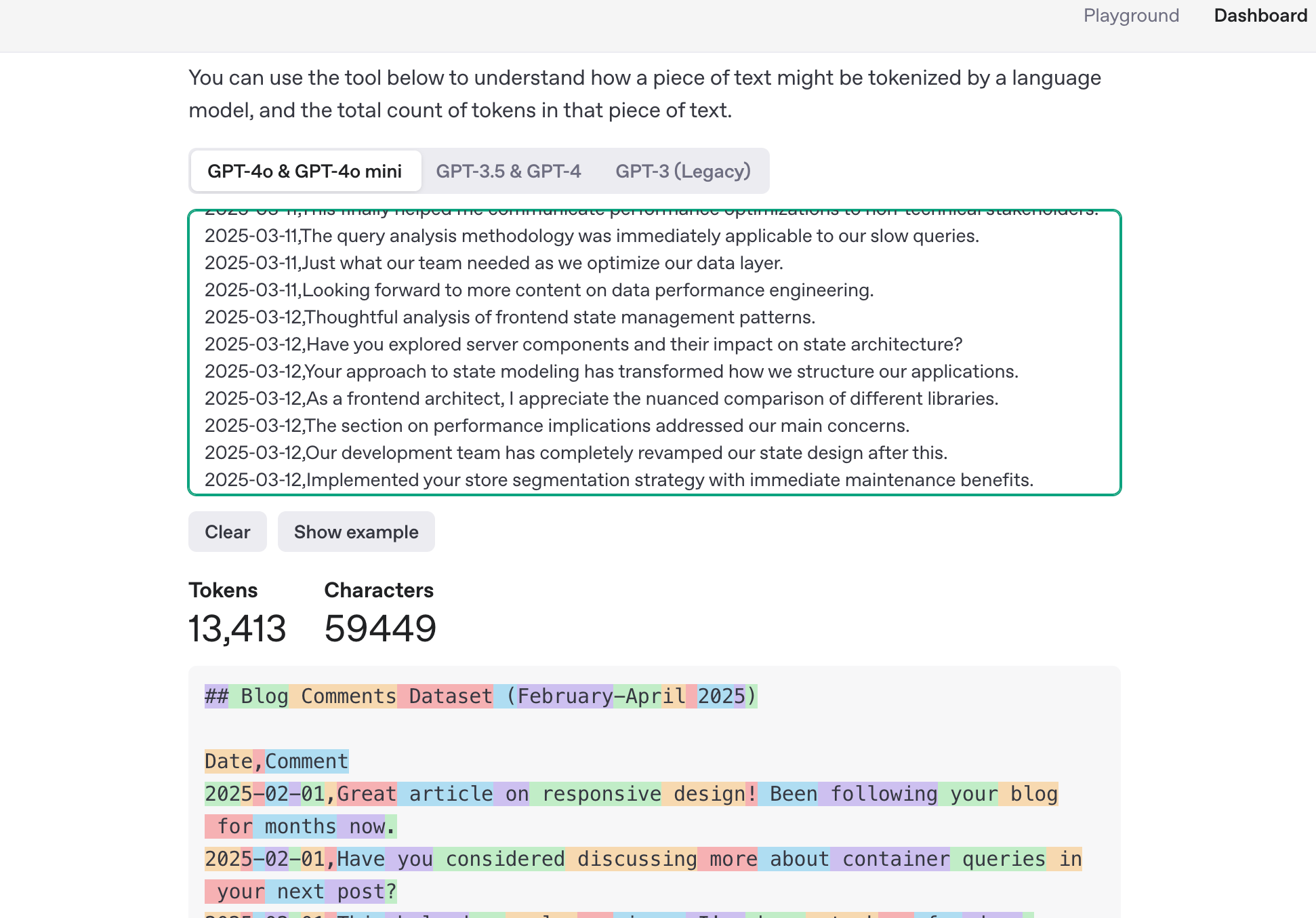

You can check the total amount of tokens in text, among other ways, using the free token counter from OpenAI:

https://platform.openai.com/tokenizer

The complete dataset used in this experiment can be found here: