Compare LLM's performance at scale with PromptFoo

Explore effective alternatives to OpenAI with Promptfoo, an open-source tool for comparing large language models (LLMs). Learn how to evaluate models like Together AI's LLAMA-3 against OpenAI's GPT-4o, discovering insights on speed, cost, and performance. Make informed decisions for your applicat...

I have previously discussed considering alternatives to OpenAI for productive, real-world applications. There are dozens of such alternatives, and many open-source options are particularly intriguing.

By comparing these solutions, you can potentially save money while also achieving better quality and speed.

For example, in a previous blog post I talked about the noticeable speed differences between models like OpenAI's gptg-4o - its fastest model - and LLAMA-3 on Together and Fireworks AI. They can deliver up to 3x the speed at a fraction of the cost.

How to compare LLMs

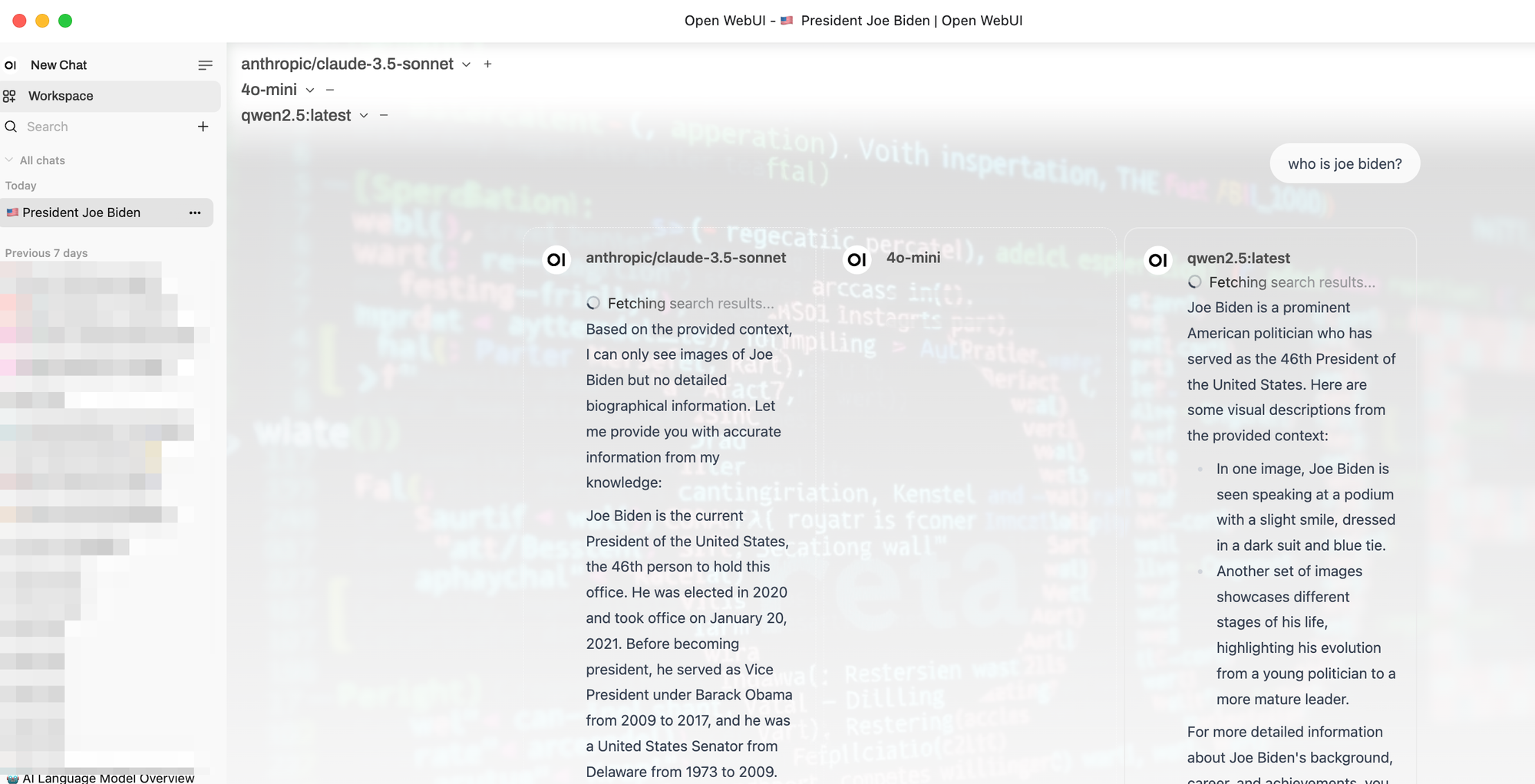

There are various ways to compare models. You can use tools like openwebui if you’re doing it occasionally or if you have just a few models to test. There are also many commercial model comparison tools you could leverage.

In a business setting, where you have dozens of prompts and use cases across many models, you may want to automate this evaluation. Having a tool that supports such tasks can be very useful. One tool I frequently use is Promptfoo.

In this blog post, we will explore how to use Promptfoo to compare the performance and quality of responses from alternative models to OpenAI—in this case, a llama-based model from Together AI. Of course, you can adapt this approach to any other model or scenario you like.

Let’s get started.

---

1. Introduction to Promptfoo

Promptfoo is an open-source tool designed to help evaluate and compare large language models (LLMs). It enables developers to systematically test prompts across multiple LLM providers, evaluate outputs using various assertion types, and calculate metrics like accuracy, safety, and performance. Promptfoo is particularly helpful for those building business applications who need a straightforward, flexible, and extensible API for LLM evaluation.

2. Overview of Together AI

Together AI is a platform that provides access to various open-source language models. Although we don’t have extensive product details in our database, it’s known for offering alternatives to OpenAI’s GPT models. Together AI aims to deliver more cost-effective and customizable options for developers working with LLMs.

---

3. Setting up Promptfoo

To get started with Promptfoo, follow these steps:

- Ensure you have Node.js 18 or newer installed.

- Install Promptfoo globally using npm:

npm install -g promptfoo

- Verify the installation:

promptfoo --version

- Initialize Promptfoo in your project directory:

promptfoo init

This will create a promptfooconfig.yaml file in your current directory.