Chatting with Your Private LLM Model Using Ollama and Open Web UI

Discover how to interact with your private LLM model hosted on Hugging Face! This guide walks you through chatting with a fine-tuned Llama3 model using Ollama and Open Web UI. Learn to download your model, create an Ollama file, import it, and start chatting in just a few simple steps! Dive in an...

If you have a private LLM model (e.g. fine-tuned from Llama3 or Mistral) hosted on Hugging Face, there are a few ways you can interact with it. You can use Hugging Face Spaces, deploy it on serverless platforms like Runpod or Vast.ai, or run it locally with Ollama.

We've already gone over the first two options in previous posts. In this post, we'll look at how to chat with your private model using Ollama and Open Web UI.

Steps Overview:

- Download the model

- Create the Ollama model file and import it into Ollama

- Update Open Web UI to reflect the new model

- Start using it!

In this tutorial, we'll be chatting with a fine-tuned model of Llama3 called awesomeLLM. Make sure to replace the model name with your own as needed.

1. Download Your Model Locally

To download your model locally, use the Hugging Face CLI:

huggingface-cli download airabbitX/awesomeLLMAfter downloading, the model will be saved in the Hugging Face cache, usually located at ~/.cache/huggingface/hub.

To view the downloaded models on a Mac, run:

ls ~/.cache/huggingface/hubOn Windows and Linux, the paths may vary. You should see something like this:

~/.cache/huggingface/hub/models--airabbitX--awesomeLLM/snapshots/68d90b9b1dc38c71f08b5b82d9875539a9b8e6be/2. Create the Ollama Model File

Create a new model file using the snapshot path from above and follow the template format provided by Ollama. The templates can be found here: https://ollama.com/hub/hub.

Here’s an example model file for a fine-tuned Llama3 model:

FROM /Users/airabbitx/.cache/huggingface/hub/models--airabbitX--awesomeLLM/snapshots/aa2d69d350a792e88037085a8976c1900437d3d8

TEMPLATE """{{ if .System }}<|start_header_id|>system<|end_header_id|>

{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>

{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>

{{ .Response }}<|eot_id|>"""

PARAMETER stop "<|start_header_id|>"

PARAMETER stop "<|end_header_id|>"

PARAMETER stop "<|eot_id|>"

PARAMETER stop "<|reserved_special_token"3. Import the Model in Ollama

Import the model using the following command (adjust the path to your model file):

ollama create awesomeLLMOnce successfully imported, check the model with this command:

ollama show awesomeLLMYou’ll see output similar to this:

Model

parameters 8.0B

quantization F16

arch llama

context length 8192

embedding length 4096

Parameters

stop "<|start_header_id|>"

stop "<|end_header_id|>"

stop "<|eot_id|>"

stop "<|reserved_special_token"4. Testing the Model

You can now start the inference and test the model from the command line using:

ollama run awesomeLLMIf the output doesn’t look right, you may be using the wrong prompt template. Ensure the correct template is used based on your model (Llama, Mistral, etc.).

Model Storage Location

Ollama copies the model to its own directory (e.g., ~/.ollama/models/). Since the models are usually large, you may want to delete them from the Hugging Face cache to avoid taking up extra space.

To check the location of the models, run:

ollama show --modelfile awesomeLLM5. Using the Model in Open Web UI

Now that the model is imported into Ollama, you can use it in Open Web UI.

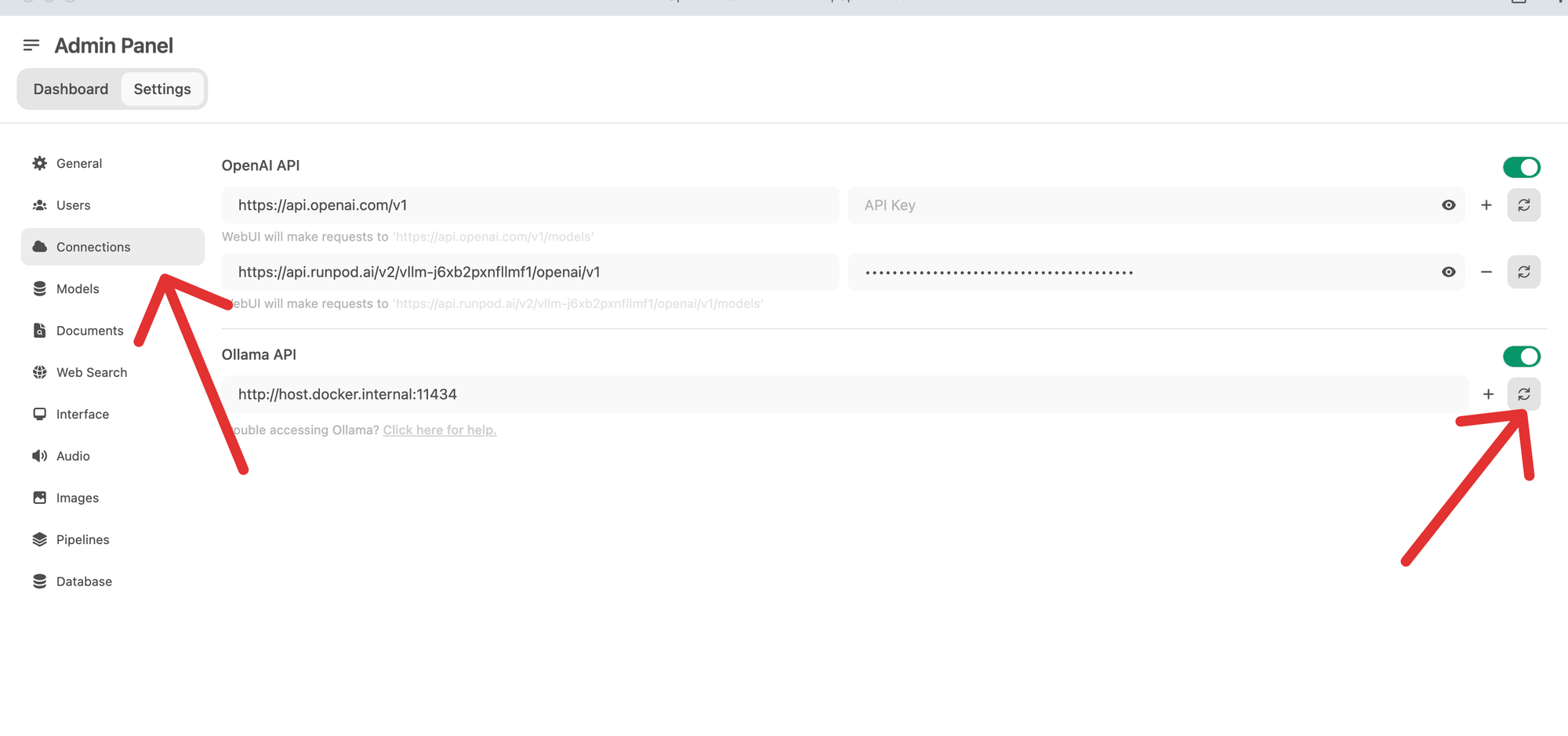

- Go to Connections -> Ollama API and click the Update button to refresh the list of available models.

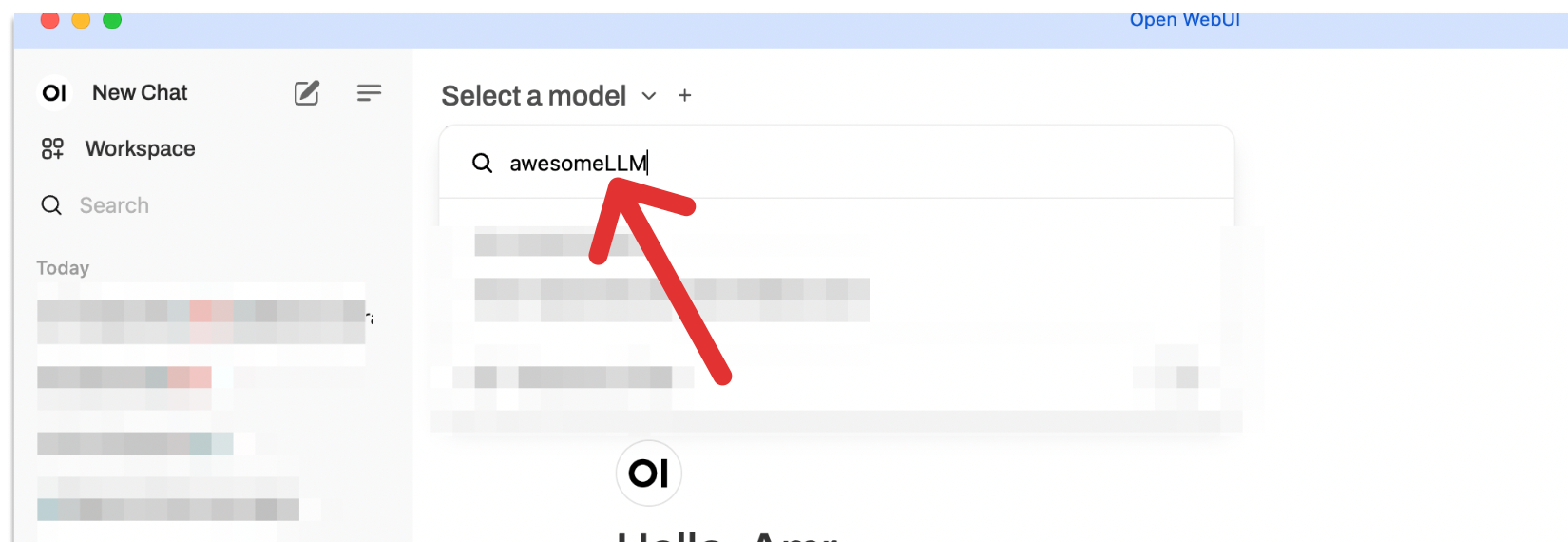

- Navigate to the main window and start a new chat by selecting the model imported into Ollama from the list.

If the model doesn't appear, ensure Ollama is running and check the list of running models using:

ollama listYou should see something like this:

NAME ID SIZE MODIFIED

awesomeLLM e108d67e3840 16 GB 47 hours ago Now you’re all set to chat with your private model using Ollama and Open Web UI!