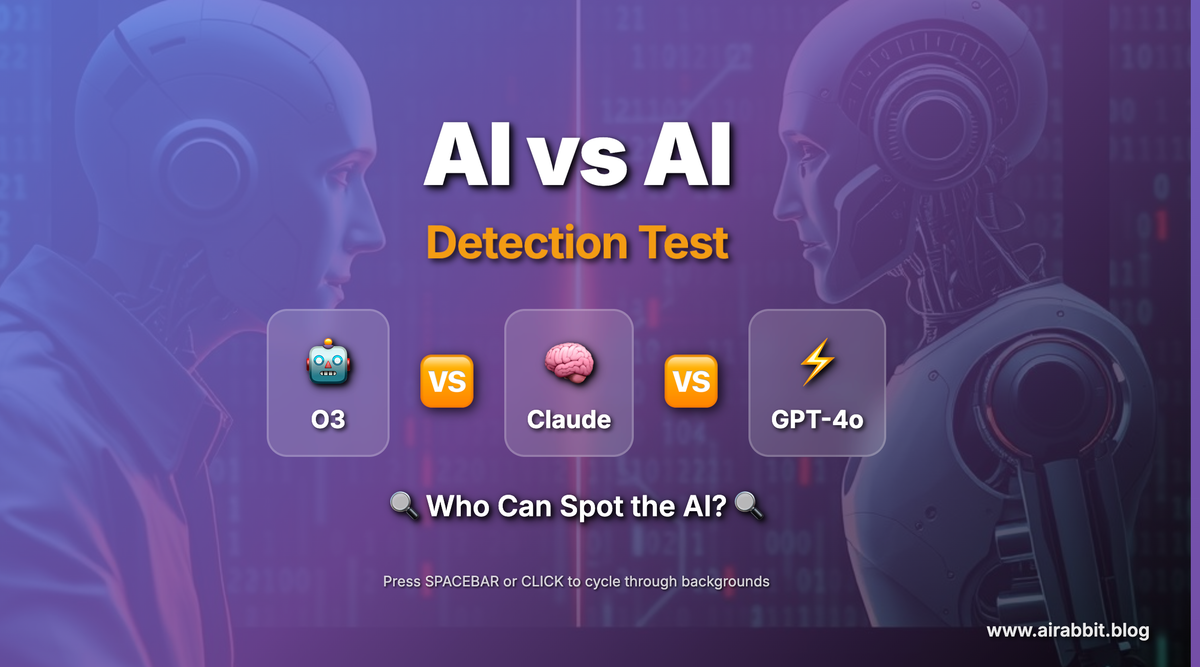

Can AI Outsmart AI? Testing AI Agents’ Ability to Identify Humans and Bots

AI agents are a hot topic right now, and many believe they will increasingly become a dominant force across all industries worldwide. Salesforce, for example, even predicts that there will be a billion agents in use by the end of this year – a truly astonishing number. With so many AI agents emerging, one significant challenge we're facing is distinguishing them from human beings, especially as even advanced technologies like CAPTCHA are being heavily challenged by increasingly intelligent AI.

But what about the opposite scenario? Can AI differentiate between humans and other AIs, regardless of whether they are from the same developer (like OpenAI or Anthropic)?

To explore this, we conducted an experiment using Autogen, in which one model was tasked with determining whether its conversation partner was an AI or a human. The experiment was conducted using multiple repetitions and different models.

In this post, I'll share a real test we recently performed to see how well leading AI models like O3, O4, and Claude Sonnet can detect an AI within a human-like conversation.

While I'm not aware of a formal scientific term for it, it's somewhat similar to what's known as a 'reverse Turing test'. Unlike many studies or tools that simply ask 'was this text written by AI?', our test involved a dynamic Q/A between two LLMs.