Beyond Simple Prompts: How MCP Unlocks Claude Sonnet Agentic Power

The article discusses using Claude Sonnet and MCP to automate the creation and refinement of book cover images, ensuring text accuracy through AI feedback loops.

I recently wrote about the impressive capability of sonnet 3.7 to generate a huge amount of content—enough to almost fill an entire book. You could use this book for your own purposes (for self-study, for example) or publish it.

In the latter case, you would probably need an appealing book cover. You could use any AI image generator for this purpose.

However, as you might know, some image generators struggle with text and might produce spelling mistakes, so you would have to iterate several times until all text is correct.

Alternatively, you can use another remarkable feature of Claude, MCP, to let Claude handle the review and iteration completely by itself. In this blog post I will show you how to leverage the Power of Claude Sonnet and MCP to do just that.

And here is how:

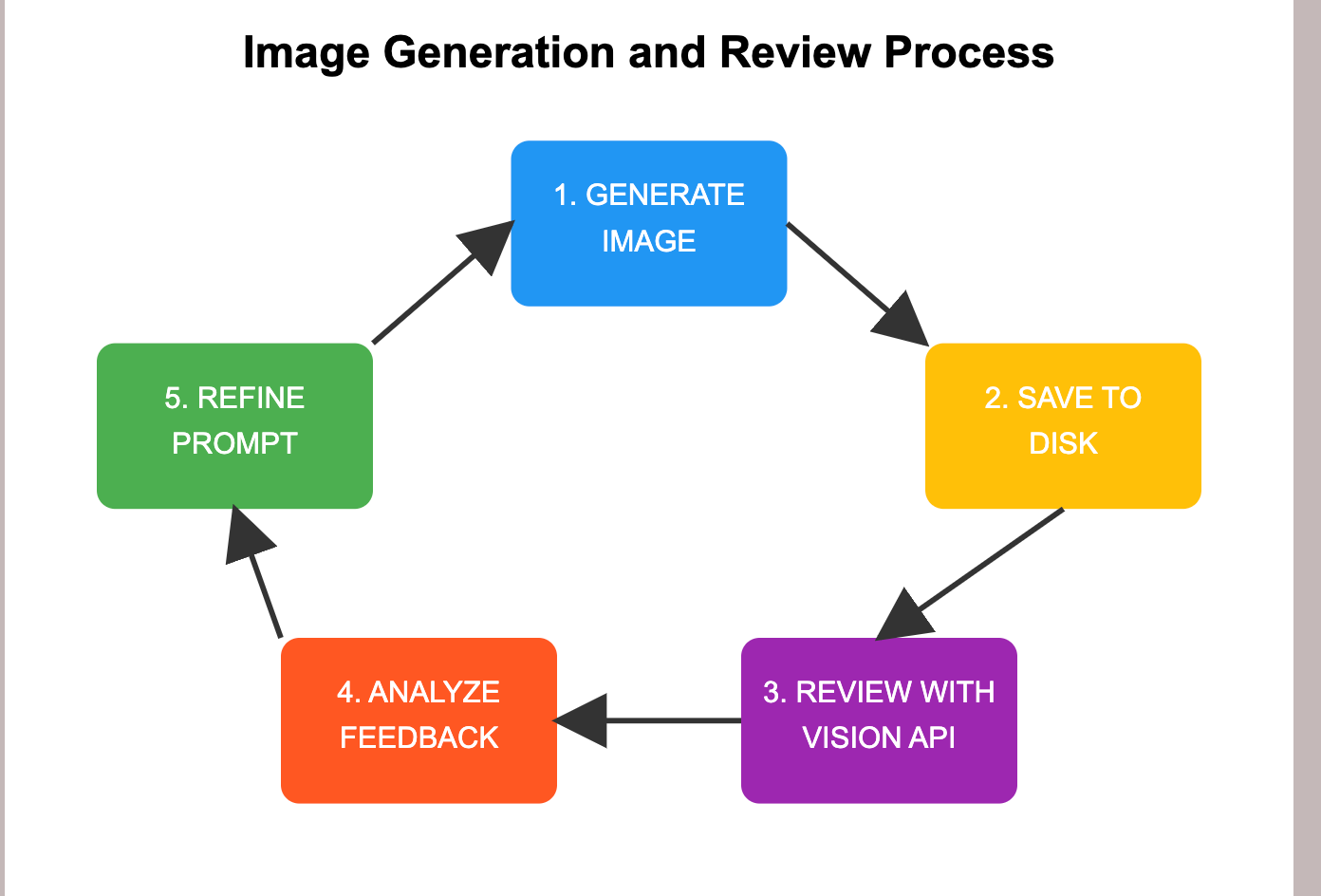

- Claude connects to the Flux Image generator on together.ai and describes the target image of the book cover in detail.

- The image is saved on disk.

- It connects to OpenAI Vision with this image and checks certain aspects to see if they match the intended idea.

- The response is fed back to refine the prompt.

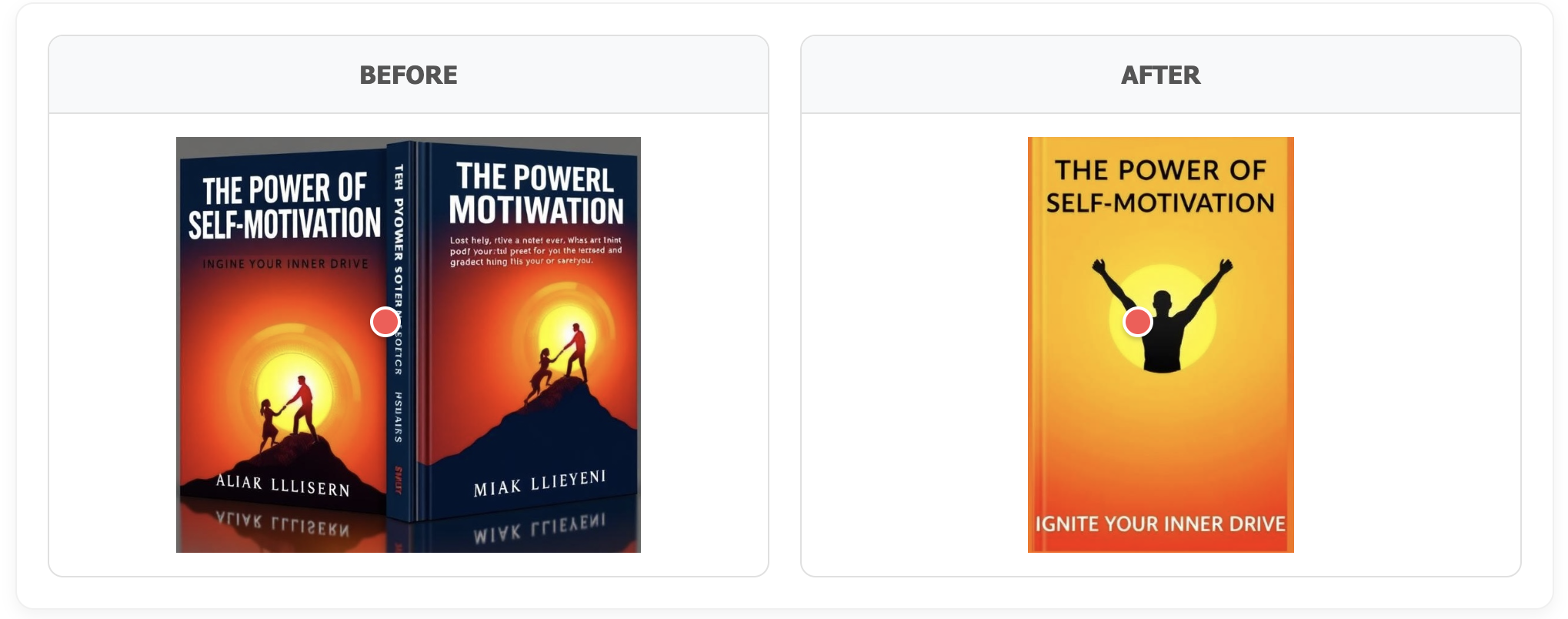

After applying this self-feedback loop a couple of times without any intervention, we get something like this:

As you can see, the initial image has many spelling mistakes, but with self-correction based on feedback from the vision capability (we use OpenAI here, but you can use any other model with vision capability, including Claude Sonnet itself).

The final image after 3 iterations has no spelling mistakes anymore:

---

Prerequisites

For this to work, we need two MCP servers. If you do not know what MCP is, please check my previous blog posts.

- Together AI MCP, which can basically use any model. Here we use flush shenll to generate the images:

https://github.com/sarthakkimtani/mcp-image-gen - OpenAI Vision MCP to analyze the content of the image:

https://github.com/pierrebrunelle/mcp-server-openai

In order to be able to reuse the generated images, they have to be saved. So, I had to modify the image-gen server to save the files first. Then we pass the saved file to OpenAI. You also have to adjust the OpenAI MCP server to use the Vision API. Currently, it only supports text.

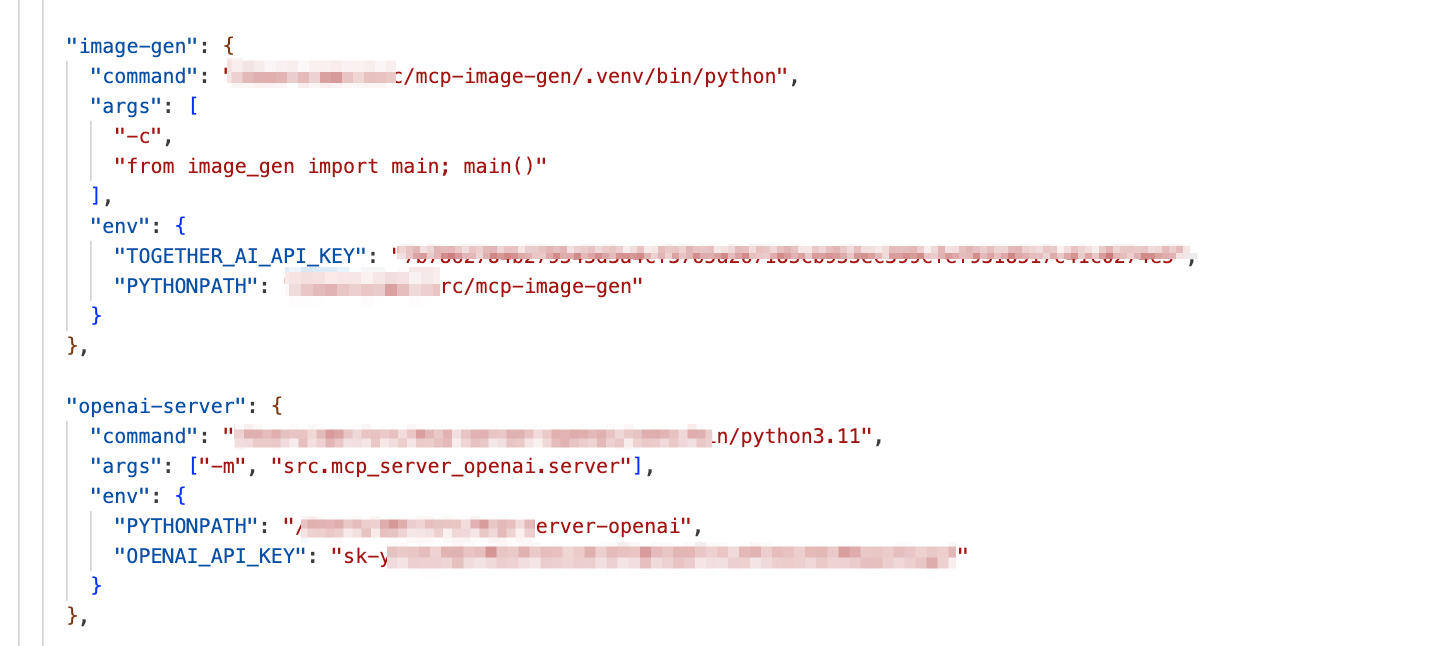

Here is an example of the MCP settings after setting up the MCP servers.

---

Step by Step

Step 1: The Prompt

Create a cover image for this book (flux-schnell model). Use OpenAI Vision (model gpt-4o) to ensure that the image matches the intended content and that all text is readable and visible.

Editing the content yourself is considered cheating.

Title: The Power of Self-Motivation: Ignite Your Inner Drive

Genre: Self-Help / Personal Development

Synopsis: The Power of Self-Motivation is a powerful guide that reveals how anyone can cultivate the internal drive necessary to achieve their goals, overcome obstacles, and unlock their full potential. In this book, you’ll discover how self-motivation is not just a fleeting feeling, but a skill that can be nurtured and mastered to create lasting success in every area of your life.

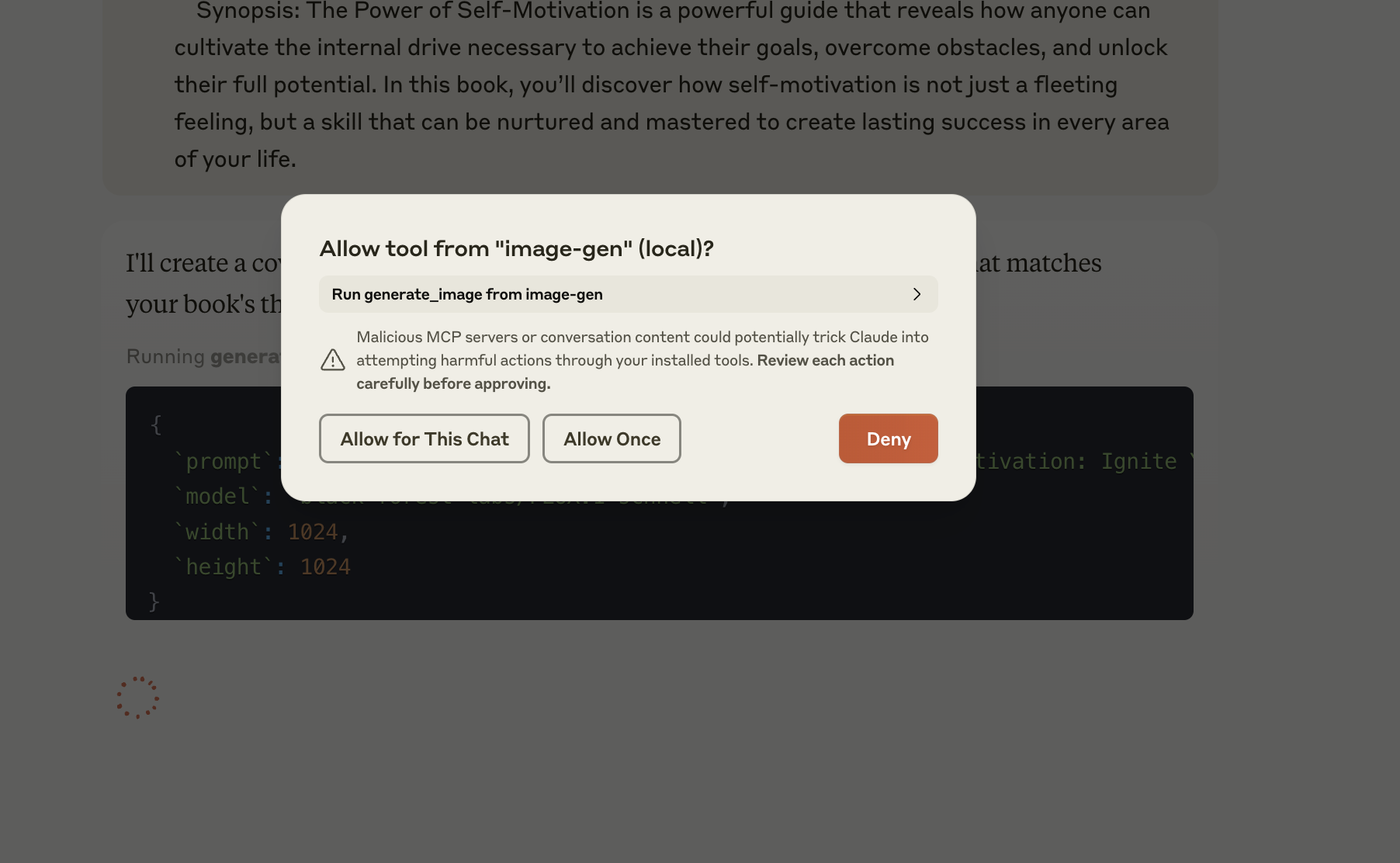

When prompted for permissions, choose to allow access for the entire chat for the image generator:

Then also allow access for OpenAI: