5 Reasons Why AI-generated Code Smells

LLMs excel at code generation, enhancing efficiency and quality. However, unguided use can lead to pitfalls like poor architecture, duplicated code, and security flaws. By avoiding common mistakes and establishing governance frameworks, developers can leverage AI effectively. This guide outlines ...

One of the most impressive abilities of LLMs is code generation. It can boost the efficiency and speed of coding, help bring ideas to life quickly, and improve overall code quality far more easily than before.

However, many have pointed out—and I’ve personally experienced—that unguided code generation might look appealing initially, but over time, the real cost could actually become even higher than without AI. Common issues include:

- Bad software architecture

- Monolithic structures that are hard to maintain

- Code duplication across the project

- Code that’s hard to read or understand (sometimes overcomplicated)

- No clear architecture (more patchwork than well-structured software)

- Lack of input validation and other vulnerabilities

Even more risks may arise with code generation. However, many can be avoided by steering clear of common mistakes and building a governance framework, rather than relying solely on the AI code assistant and the base foundation model (fine-tuned models don’t necessarily produce perfect code, either).

Below are some of the most common mistakes made in AI-powered software development (in no particular order).

Note that your specific enterprise policies and industry best practices will differ, so here we’ll focus on the general problems many face with AI and code generation.

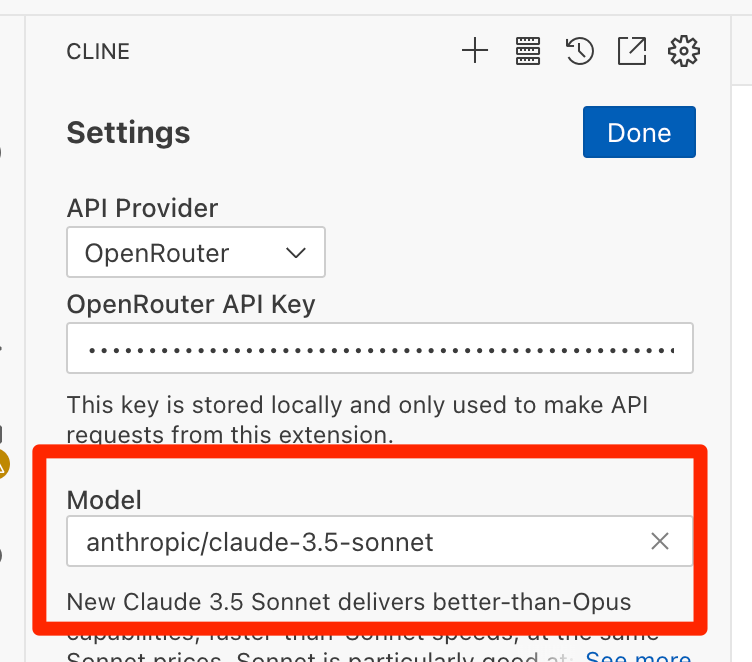

I’ve used many code generation tools, but I mostly got stuck with Cline (previously Claude-Dev). It’s a real alternative to commercial products like Copilot and Cursor, and in my opinion, it’s even better because of its agent-like capabilities.

---

Problem #1: Using an Outdated Model

Sometimes you set the model in the configuration and then forget about it... which leads to working with outdated models that do not include the latest changes in programming languages and frameworks, which can lead to producing code that is as outdated as the model itself.

Always ensure you’re using the latest model.

Here’s an example of the latest OpenRouter configuration:

anthropic/claude-3.5-sonnet

A list of models can be found here (if you use Claude):

https://docs.anthropic.com/en/docs/about-claude/models

And for OpenRouter (my preference because it has fewer daily limits):

https://openrouter.ai/anthropic/claude-3.5-sonnet

---

Problem #2: Too Little Context

Imagine you start developing a piece of software, build one component, then another, and days later you need to restart the session. Your model basically starts from scratch (models forget everything when you reset the session in your code assistant).

How do you get the model up to speed?

You could ask a question and hope it reads all the relevant files on its own, but that’s risky because it might:

- Produce duplicated code (already existing in the project but not recognized by the model)

- Ignore edge cases or important constraints in files it hasn’t read

- Generate code that’s inconsistent with the rest of the codebase